Google Cloud aims to provide extremely effective and cost-optimized ML workflow recipes with Vertex AI Model Garden. At the moment, it provides over 150 first-party, open, and third-party foundation models. Google debuted the well-liked open source LLM serving stack vLLM on GPUs at Vertex Model Garden last year. Since then, the deployment of serving has grown rapidly. Google is excited to present Hex-LLM, the Vertex AI Model Garden’s High-Efficiency LLM Serving with XLA on TPUs.

Vertex AI’s proprietary LLM serving framework, Hex-LLM, was created and refined for Google Cloud TPU hardware, which is offered as a component of the AI Hypercomputer. Modern LLM serving technologies, such as paged attention and continuous batching, are combined with internal XLA/TPU-specific optimisations to provide Hex-LLM, Google’s most recent low-cost, high-efficiency LLM serving solution on TPU for open-source models. Hex-LLM may now be deployed with a single click, on a notebook, or on the playground in Vertex AI Model Garden. Google is eager to learn how your LLM serving routines might benefit from Hex-LLM and Cloud TPUs.

Structure and standards

Inspired by several popular open-source projects, such as vLLM and FlashAttention, Hex-LLM combines the most recent LLM serving technology with custom optimisations made specifically for XLA/TPU.

Hex-LLM’s primary optimisations consist of:

- For KV caching, the token-based continuous batching approach guarantees the highest possible memory utilisation.

- A total overhaul of the PagedAttention kernel with XLA/TPU optimisations.

- To efficiently execute big models on several TPU processors, flexible and composable data parallelism and tensor parallelism algorithms with particular weights sharding optimisation are used.

Furthermore, a large variety of well-liked dense and sparse LLM models are supported by Hex-LLM, such as:

- Gemma 2B and 7B

- Gemma 2 9B and 27B

- Llama 2 7B, 13B, and 70B

- Llama 3 8B and 70B

- Mistral 7B and Mixtral 8x7B

Google is dedicated to providing Hex-LLM with the newest and best foundation models along with increasingly sophisticated technology as the LLM area continues to develop.

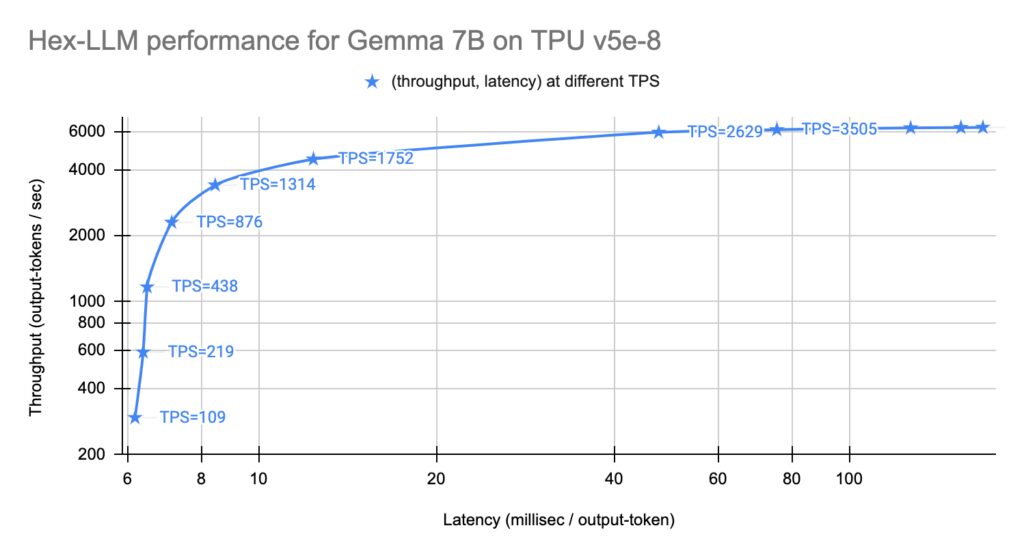

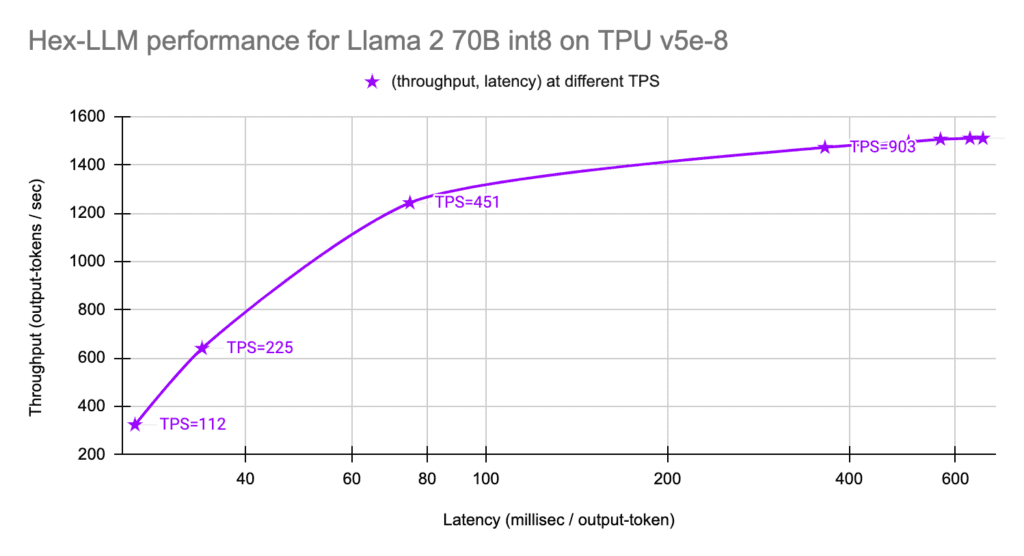

Hex-LLM offers low latency, high throughput, and competitive performance. The metrics that measured in Google’s benchmark experiments are described as follows:

- The average quantity of tokens an LLM server receives each second is measured in TPS (tokens per second). TPS is a more precise way to measure the traffic of an LLM server than QPS (queries per second), which is used to measure the traffic of a generic server.

- The amount of tokens the server can produce at a given TPS over a given period of time is measured by throughput. This is a crucial indicator for assessing a system’s capacity to handle several requests at once.

- The average time to generate a single output token at a given TPS is measured by latency. This calculates the total amount of time, including queueing and processing time, that is spent on the server side for every request.

Keep in mind that low latency and high throughput are typically mutually exclusive. Throughput and latency should both rise as TPS rises. At a given TPS, the throughput will saturate, but the latency will keep rising as the TPS rises. As a result, Google team may monitor the server’s throughput and latency metrics given a specific TPS. The throughput-latency plot that is produced in relation to various TPS provides a precise assessment of the LLM server’s performance.

A sample of the ShareGPT dataset, a commonly used dataset with prompts and outputs of varying durations, is used to benchmark Hex-LLM.

The performance of the Llama 2 70B (int8 weight quantised) and Gemma 7B versions on eight TPU v5e chips is shown in the following charts:

- The Gemma 7B model has a maximum TPS of 6250 output tokens per second and a minimum TPS of 6ms per output token.

- Llama 2 70B int8 model: at the lowest TPS, 26 ms per output token, and at the highest TPS, 1510 output tokens per second.

Launch Vertex AI Model Garden now

The Hex-LLM TPU serving container has been incorporated into Vertex AI Model Garden. Through the playground, one-click deployment, or Colab Enterprise Notebook examples for a range of models, users can utilise this serving technology.

Vertex Artificial Intelligence Model, a pre-deployed Vertex AI Prediction endpoint that is incorporated into the user interface is Garden’s playground. After entering the request’s optional arguments and the prompt text and clicking the SUBMIT button, users can rapidly receive the model’s response. Have a go at it with Gemma!

The simplest method for deploying a custom Vertex Prediction endpoint with Hex-LLM is to use the model card UI for one-click deployment:

- Go to the page of the model card and select “DEPLOY.”

- Choose the TPU v5e machine type ct5lp-hightpu-*t for deployment for the model variation of interest. To start the deployment process, select “DEPLOY” at the bottom. Two email notifications are available to you: one upon uploading the model and another upon the endpoint’s readiness.

Users can utilise the Vertex Python SDK to deploy a Vertex Prediction endpoint with Hex-LLM utilising the Colab Enterprise notebook examples for maximum flexibility.

- Go to the page of the model card and select “OPEN NOTEBOOK.”

- Pick out the notebook for Vertex Serving. By doing this, the Colab Enterprise notebook will open.

- Use the notebook to send prediction queries to the endpoint and deploy using Hex-LLM.

Users can adjust the deployment in this way to suit their needs the best. For example, they can accommodate a high volume of anticipated traffic by deploying with several clones.