Gradio-powered Generative AI Agents build apps quickly

AI is now the go-to technology for quickly expanding, powering, and modernizing apps in the era of low-code and no-code application development. Leading companies are scrambling to develop new apps more quickly as a result of the constantly changing technological landscape, which offers new potential and opportunities to connect and engage with consumers as well as optimize and infuse insights and experiences.

AI-infused application development is increasingly turning into a must to succeed in today’s market, whether it’s to adopt generative AI technologies or keep their competitive edge.

We will go over how to integrate Gradio, an open source frontend framework, with Vertex AI Conversation in this blog. Vertex AI Conversation enables developers to easily create proof-of-concept apps for generation AI while using the capabilities of conversational AI technologies.

Organizations may use these two technologies to deliver a proof-of-concept (PoC) that has an engaging, low-lift generative AI experience that will impress your consumers and motivate your development team.

Chatbots that are powered by Gen AI may deliver insightful and relevant interactions by learning from the unstructured data that belongs to your business. The Gradio front-end framework offers a simple way to create unique, interactive apps that let programmers quickly share and showcase machine learning models.

Vertex AI Dialogue

One of Gradio’s framework’s key features is the ability to build demo applications on top of your models with an intuitive web interface so that anybody can use them and provide your company right away feedback.

Integrating a Gradio app with a Vertex AI Conversation-based generative AI agent opens essential capabilities that let you customize and adapt to your particular requirements and user input. With the help of programmability, you can quickly demonstrate your chatbot’s capabilities and implement extensive personalisation and contextualization into discussions with clients.

Gradio

Businesses want a user-friendly interface to assess their machine learning models, APIs, or data science workflows in light of the tremendous growth in generative AI. A common use for Large Language Models (LLMs) is in chatbots. Businesses are using conversational interfaces like voice-activated chatbots or speech bots because interacting with LLMs seems intuitive and natural. Since speaking is considerably simpler than typing, voice bots are becoming more and more popular.

To communicate your machine learning model, API, or data science workflow with customers or colleagues, you can quickly create interfaces like chatbots, voice-activated bots, and even fully-fledged web apps using the open-source Python framework Gradio. Gradio enables you to create rapid demos and distribute them with just a few lines of Python code. Here, you can find out more about Gradio.

Introducing a Vertex AI Conversation-integrated Gradio application

The data ingestion tools of Vertex AI Conversation analyze your information to produce a virtual agent that is powered by LLMs. To enable a relevant and intimate engagement with end users, your agent may then build dialogues utilizing data from your company. With the Gradio framework, showcasing your application is simpler than ever thanks to seamless deployment through a web browser.

What it does

Using a number of data sources, chatbots may be created using Gradio that can respond to user inquiries. To do this, you might construct a middleware that makes use of Vertex AI Conversation to examine user input and provide an agent response. After that, the agent might look for solutions in a repository of documents, such as the knowledge base maintained by your business.

When the agent discovers the solution, it has the ability to summarize it and show it to the user in the Gradio app. In order for the user to learn more, the agent may additionally offer links to the sources of the response.

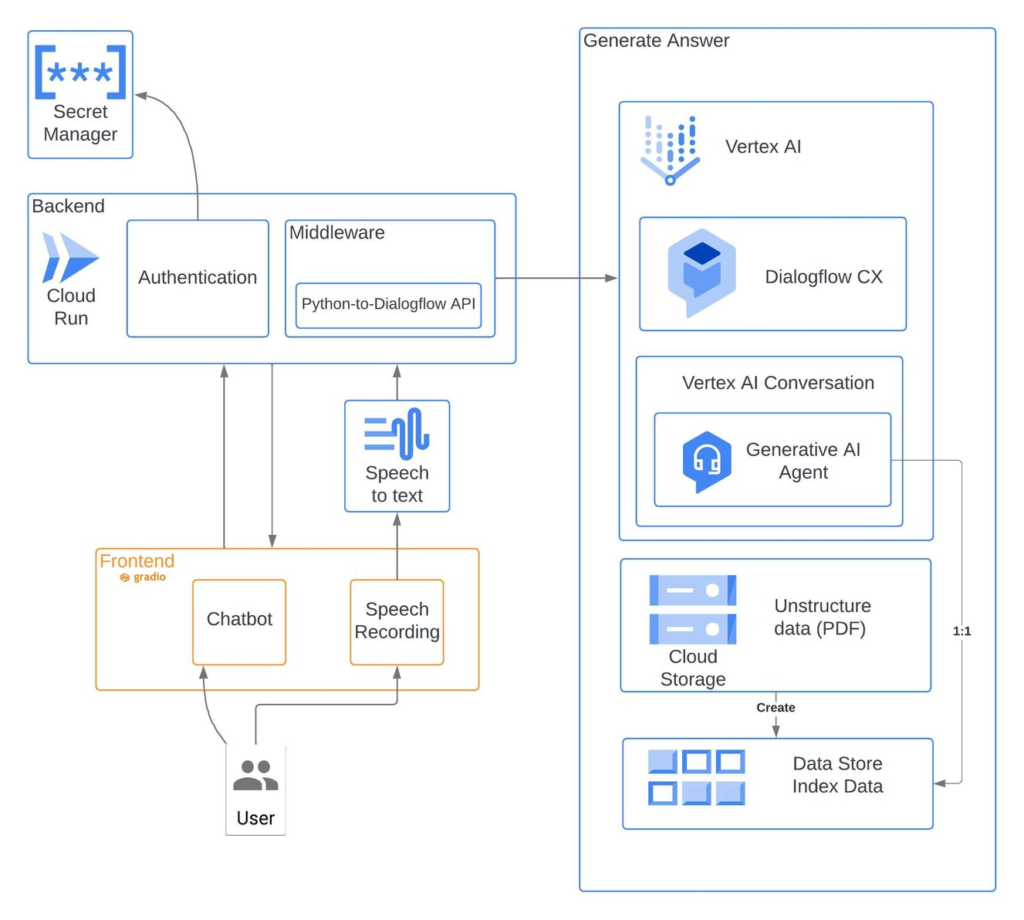

Here is a more thorough breakdown of each action:

- The chatbot receives a query from the user.

- The middleware uses the Dialogflow API to transmit the query to the genAI agent.

- The genAI agent explores the data storage for solutions.

- If the agent discovers a solution, it describes it and gives references.

- The middleware uses the Dialogflow API to transmit the summary and links to the Gradio app.

- The Gradio app shows the user a summary and links.

The high level architecture shown in the accompanying diagram can serve as a basic building block for an MVP with key functionality.

The components of the chatbot architecture are described in the paragraphs that follow.

Backend authentication:

Confirms the identity of the user.

All requests and responses are orchestrated by middleware to provide answers.

Answer generation:

Produces replies from a virtual agent based on enterprise data. The foundational elements or goods are Vertex AI.

Vertex AI Conversation:

Development of an intelligent agent that can comprehend and reply to natural language.

Dialogflow CX:

Dialogflow is used to manage conversations.

Enterprise data storage using the cloud

Data Store:

A repository for index data that Vertex AI Conversation automatically creates in order to index corporate data and enable Dialogflow to query it.

Speech to Text:

Transforms user voice recordings into text that is then sent to Generate Answer.

A voice-activated chatbot that can comprehend both keyboard inputs and voice-based communications is offered by the Gradio Frontend Chatbot. The Gradio framework is used to create the bot’s user interface.

Speech Recording:

Allows users to communicate via voice messages.

[…] standardization to enhance AI and OCP […]

[…] significantly with the release of the calls feature. By enabling voice calls to and from the AI agents, users can engage with the agents in a more immersive and natural way. The main features and […]