Introducing Willow, Google’s cutting-edge quantum chip.

Google’s new technology shows performance and error correction that opens the door to a practical, large-scale quantum computer.

Google presents Willow, its newest quantum chip, today. Two significant accomplishments are made possible by Google Willow’s cutting-edge performance on several criteria.

- The first is that when we use more qubits to scale up, Google Willow may cut errors tremendously. This solves a significant problem in quantum error correction that has been worked on for over three decades.

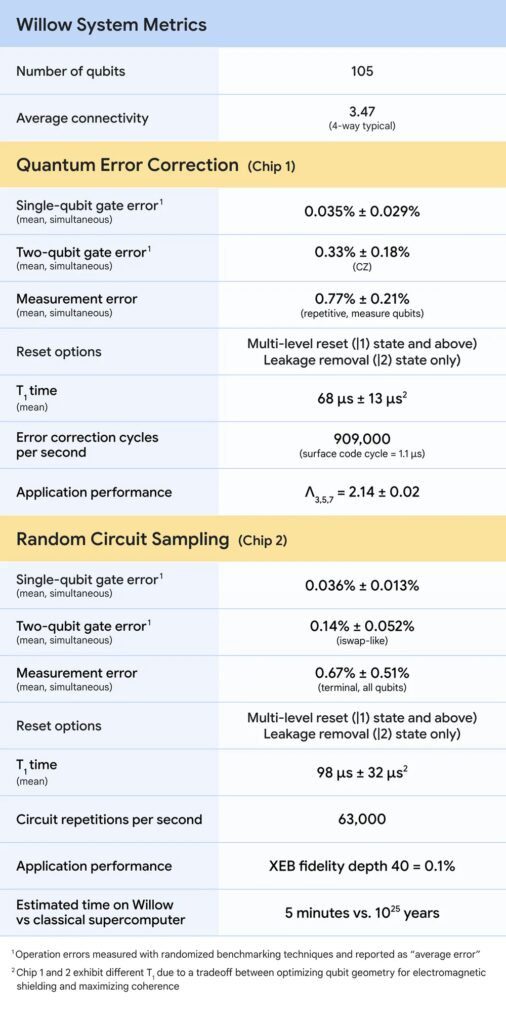

- Second, Willow completed a typical benchmark calculation in less than five minutes that would have taken ten septillion (or 1025) years for one of the fastest supercomputers available today, a time span that is far longer than the universe’s history.

The Google Willow chip marks a significant milestone in a process that started more than a decade ago. Google Quantum AI in 2012 was to create a practical, large-scale quantum computer that could use quantum mechanics, the “operating system” of nature as Google currently understands it, to advance scientific research, create beneficial applications, and address some of the most pressing issues facing society. Willow greatly advances its team’s long-term roadmap towards commercially applicable applications, which its team has mapped out as part of Google Research.

Below-threshold exponential quantum error correction!

Since qubits, the computational units of quantum computers, have a propensity to interchange information with their surroundings quickly, making it challenging to safeguard the information required to finish a computation, errors represent one of the biggest hurdles in quantum computing. Generally speaking, mistakes increase with the number of qubits used, and the system becomes classical.

Google findings, which were published in Nature Today, demonstrate that the more qubits Willow uses, the more errors it can decrease and the more quantum the system becomes. From a grid of 3×3 encoded qubits to a grid of 5×5 and a grid of 7×7, it tried progressively larger arrays of physical qubits, and each time it is able to reduce the error rate by half utilizing its most recent developments in quantum error correction. Stated differently, it can reduce the mistake rate exponentially.

The ability to reduce mistakes while increasing the number of qubits is referred to in the field as “below threshold.” Real progress in error correction requires proving that you are below the threshold, which has been a persistent problem ever since Peter Shor presented quantum error correction in 1995.

Additionally, this result involves several scientific “firsts.” For instance, it is among the first convincing examples of real-time error correction on a superconducting quantum system, which is essential for any practical computation because errors spoil your calculation before it is finished if you can’t fix them quickly enough. Additionally, our arrays of qubits have longer lifetimes than the individual physical qubits, demonstrating that error correction is enhancing the system overall. This is a “beyond breakeven” demonstration.

This is the most convincing scalable logical qubit prototype to date, and the first system below threshold. It is a clear indication that it is possible to construct practical, extremely massive quantum computers. Google Willow makes it possible for us to run useful, business-relevant algorithms that are impossible to duplicate on traditional computers.

Willow: Quantum Computing’s Leap Beyond Time and Space

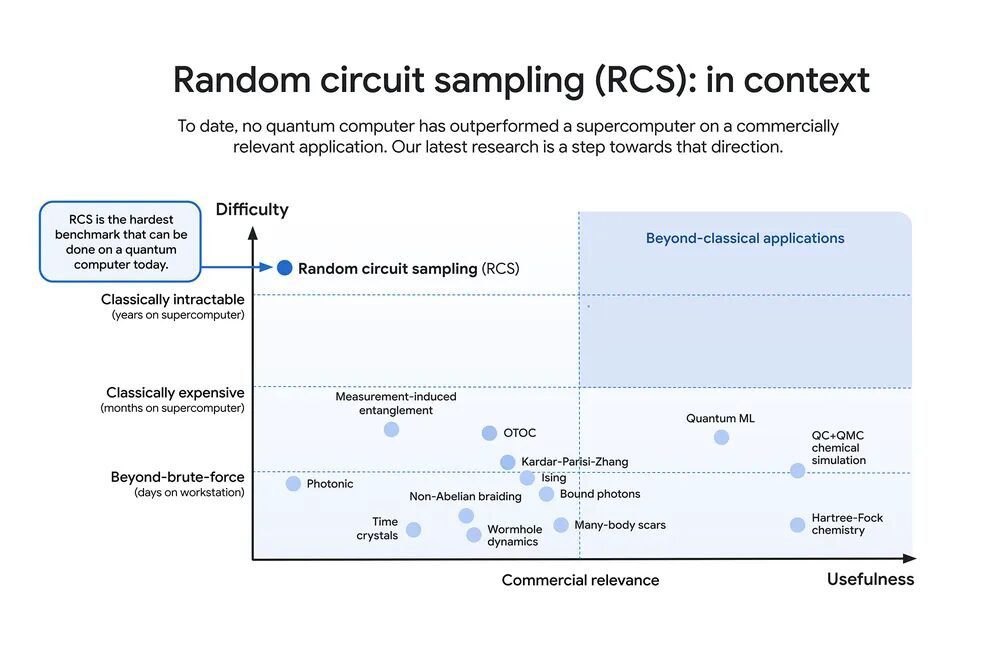

Google employed the random circuit sampling (RCS) benchmark to assess Google Willow‘s performance. The classically toughest benchmark that can currently be completed on a quantum computer is RCS, which was invented by its team and is now widely accepted as a standard in the field. This serves as a gateway to quantum computing, determining whether a quantum computer is capable of performing tasks that a classical computer is unable to.

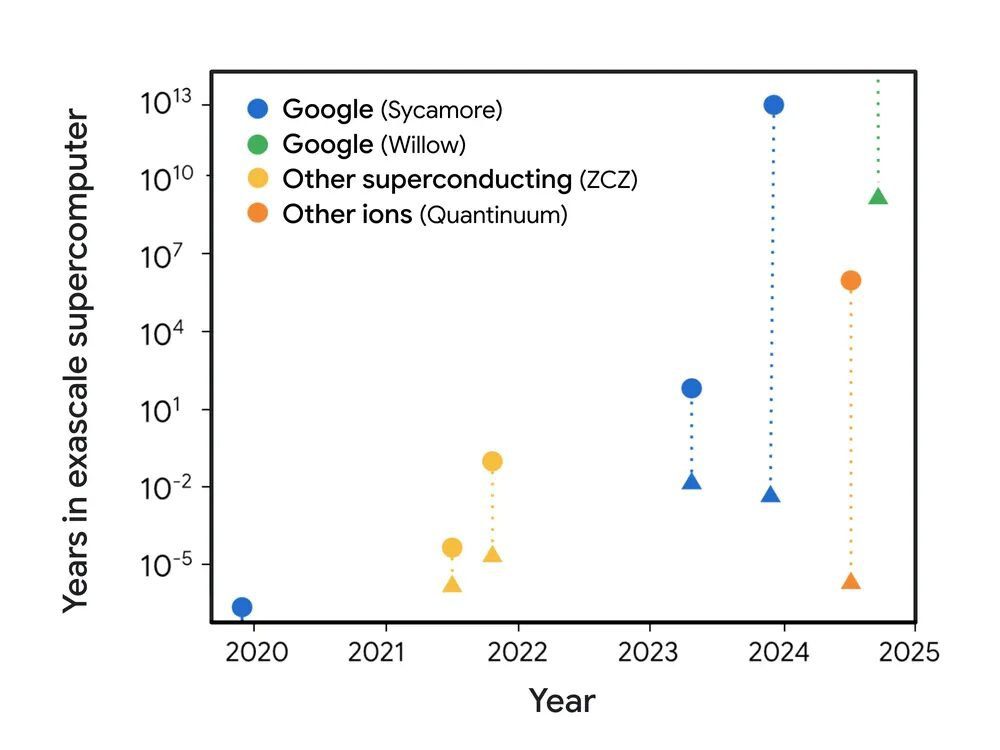

Any team developing a quantum computer should first see if it can outperform classical computers on RCS; if not, there is good reason to doubt its ability to handle increasingly challenging quantum jobs. This benchmark has been used routinely to evaluate advancements from one chip generation to the next; it previously released Sycamore results in October 2019 and again in October 2024.

Google Willow performs remarkably well on this criterion: It completed a calculation that would have taken a modern supercomputer 1025 or 10 septillion years in less than five minutes. It’s 10,000,000,000,000,000,000,000,000 years if you want to write it out. This astounding figure far beyond the age of the cosmos and known physics timelines. In keeping with David Deutsch’s initial prediction that we live in a multiverse, it supports the idea that quantum processing takes place in numerous parallel universes.

Google will keep improving, but these most recent outcomes for Google Willow, as seen in the plot below, are our finest to date.

Google made conservative estimates about Google Willow‘s ability to outperform Frontier, one of the most potent classical supercomputers in the world. For instance, made the generous and impractical assumption that Frontier would have unrestricted access to supplementary storage, such as hard discs, without any bandwidth overhead. The rapidly widening gap indicates that quantum processors are eroding at a double exponential rate and will continue to significantly outperform classical computers as we scale up. Of course, we expect classical computers to continue improving on this benchmark, as happened after Google announced the first beyond-classical computation in 2019.

Cutting-edge performance

Google’s brand-new, cutting-edge fabrication plant in Santa Barbara, one of the few in the world constructed just for this purpose, is where Google Willow was made. System engineering is essential to the creation of quantum processors. A chip’s single and two-qubit gates, qubit reset, and readout must all be carefully designed and implemented at the same time. System performance is negatively impacted if any one component delays or if two components don’t work well together. As a result, optimizing system performance guides every step of its process, from gate development and calibration to chip architecture and production. The accomplishments it provides evaluate quantum computing systems in a comprehensive manner rather than focusing on a single aspect at a time.

Because manufacturing more qubits doesn’t help if they aren’t of a high enough quality, Google concentrates on quality rather than quantity. With 105 qubits, Google Willow now performs best in class in both of the aforementioned system benchmarks random circuit sampling and quantum error correction. The most effective technique to gauge overall chip performance is to use such algorithmic benchmarks.

For instance, its T1 timings, which gauge how long qubits can sustain an excitation, the primary quantum computational resource, are now getting close to 100 µs (microseconds). Other, more focused performance measurements are also crucial. Compared to its prior generation of chips, this is a tremendous improvement of about five times. The following set of essential specifications can be used to assess quantum hardware and compare it across platforms:

What is next for Willow?

Demonstrating a first “useful, beyond-classical” computation on modern quantum processors that applies to a practical application is the field’s next hurdle. It is hopeful that the Willow chip generation will enable us to accomplish this. Two different kinds of experiments have been conducted thus far. It has used the RCS benchmark, which compares performance to traditional computers but has no known practical uses. However, it has conducted scientifically intriguing simulations of quantum systems that are still accessible by classical computers and has produced new scientific findings. Its objective is to simultaneously enter the field of algorithms that are practical for real-world, economically relevant problems and that are beyond the capabilities of traditional computers.

Training and optimizing specific learning architectures, modeling systems where quantum effects are significant, and gathering training data that is unavailable to classical machines will all be made possible by quantum computation. This includes advancing the development of fusion and new energy sources, creating more effective batteries for electric vehicles, and assisting in the discovery of new medications. Many of these revolutionary uses of the future will not be possible on traditional computers; quantum computing will be the key to unlocking them.