Test, tune, succeed: RAG retrieval optimization

Real-time, proprietary, and specialized data boosts large language models (LLMs) in retrieval-augmented generation (RAG). This reduces hallucinations and builds trust in AI applications by making LLMs more accurate, relevant, and contextually aware.

RAG’s principle is simple find relevant information and feed it to the LLM but its implementation is challenging. If done wrong, it can damage user trust in your AI. Lack of thorough evaluation is often to blame. RAG systems that are not carefully examined can have ‘silent failures’ that compromise their reliability and trustworthiness.

Google gives us the best techniques to detect RAG system faults and fix them with a transparent, automated evaluation framework in this blog article.

Step 1. Develop testing framework

A RAG system is tested by executing queries against the tool and assessing the results. Rapid testing and iteration require choosing a set of success criteria and calculating them rigorously, automatically, and repeatably. Guidelines are below:

- Collect high-quality test questions.

- Your test collection should cover a wide range of facts and incorporate real-world language and question complexity.

- Pro tip: Consult stakeholders and end users to verify dataset quality and relevance.

- Create a ‘golden’ dataset of desired outputs for evaluation.

- Some metrics can be calculated without a reference dataset, but a collection of known-good outcomes lets us create more complex evaluation metrics.

- Change only one variable between tests.

- Many RAG pipeline elements can affect assessment scores, therefore altering them one at a time ensures that a change is due to a single feature.

- We must also not change assessment questions, reference answers, or system-wide parameters and settings between test runs.

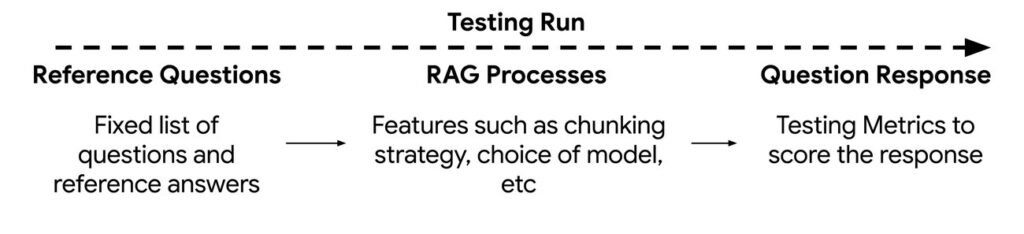

Change one part of the RAG system, run the battery of tests, modify the feature, run the same battery again, and watch how the test results change. After determining a feature cannot be enhanced, freeze the configuration and test another component of the process.

Three parts make up this testing framework:

- Answers to reference questions:

- The queries to assess. Reference answers may be included depending on the metrics.

- RAG methods

- Changing and evaluating retrieval and summarisation methods

- Results of questions

- Evaluation results graded using the testing framework

Create a testing framework

Selecting relevant metrics

Finding the appropriate system metrics requires trial and error. With prebuilt metrics that may be customized to your use case, predefined testing frameworks speed up the process. This lets you easily produce baseline scores for RAG system evaluation and refinement. From this baseline, you may increase retrieval and generation skills and measure results.

Common frameworks for RAG evaluations include:

Ragas

Ragas is an open-source RAG system evaluation tool. Factual correctness, answer relevancy, and question-matching content are measured. Ragas generates test data to help developers increase RAG system accuracy and usefulness.

Vertex AI Gen AI evaluation service

The Vertex AI gen AI evaluation tool lets customers compare generative models or applications using defined measures. It offers model selection, fast engineering, fine-tuning, metrics, data preparation, evaluations, and outcomes review. The service uses model-based and computation-based assessment methods on Google’s, third-party, and open models in several languages.

Examples of metrics

Model-based metrics evaluate candidate models using a proprietary Google model. This model evaluates responses using specific criteria.

- Pointwise metrics: The judge model scores the candidate model’s output (0–5) to indicate its conformance with the evaluation criteria. A higher score indicates greater match.

- Pairwise metrics: The judge model chooses the best model by comparing their responses. This method is often used to compare candidate and baseline models.

Computation-based metrics: These metrics compare model output to a reference using mathematical calculations. ROUGE and BLEU are popular.

Opinionated tiger team actions

- Create “golden” question inputs with stakeholders. These questions should appropriately reflect the RAG system’s key use cases. To ensure thorough testing, include simple, complex, multi-part, and misspelt questions.

- Use the Vertex AI generative AI evaluation framework. This framework lets developers quickly implement many test metrics and conduct model performance tests with little setup. It has a fast feedback loop for quick improvements.

- Assess the RAG retrieval system pointwise.

- Calculate model scores using these criteria:

- Response groundedness: How well the generated text matches source document facts.

- Wordiness: Response length and detail. Verbosity helps explain, yet it may make it hard to answer the question. This metric may be adjusted for your use case.

- Instruction following: The system reliably and fully follows instructions to produce relevant and user-intentioned text.

- Instruction-related question response quality: RAG’s capacity to generate detailed, coherent text that answers a user’s question.

- Archive findings in Vertex AI Experiments for easy comparisons.

Step 2: Root cause analysis and iterative testing

Setting up a repeatable testing methodology should help identify difficulties. The retrieval accuracy of your nearest neighbor matches and the context you give the LLM that creates your responses form RAG.

Identifying and isolating these components helps you to identify issue areas and develop testable hypotheses that can be tested as experiments in Vertex AI using the Gen AI assessment framework.

In a root cause analysis exercise, the user runs a testing run as a baseline, modifies one of the RAG components, and runs it again. The revised RAG component affects testing metric output scores. The purpose in this phase is to carefully alter and describe the components to maximize each metric score. The desire to make several changes between testing runs can disguise the impact of a single process and whether it changed your RAG system.

RAG experiment examples

Example RAG components for testing:

- What is the optimal number of neighbours for a document chunk supplied into an LLM to improve answer generation?

- Choice of embedding model affects retrieval accuracy?

- How do chunking methods affect quality? Consider altering chunk size or overlap or pre-processing chunks with a language model to summarise or paraphrase.

- Comparing Model A vs. Model B or Prompt A vs. Prompt B helps developers optimize models and prompts for specific use cases by fine-tuning prompt design or model configurations.

- What happens when you add title, author, and tags to documents for easier retrieval?

Opinionated tiger team actions

- Test model A vs. model B for simple, measurable generation tasks.

- Test retrieval chunking algorithms in one embedding model (400, 600, 1200, Full document text).

- Test long chunk pre-processing to reduce chunk size.

- Test the LLM’s context data. Do we pass the matched chunks or utilise them to seek up the source document and pass the complete document text to the LLM using lengthy context windows?

Step 3: Human assessment

Quantitative metrics from your testing methodology are important, but qualitative user feedback is too. Scalability and rapid iteration are improved by automated testing technologies, but they cannot replace human judgment in assuring quality. Human testers can assess tone, intelligibility, and ambiguity. Qualitative and quantitative testing give a more complete picture of RAG system performance.

After optimizing evaluation metrics through the automated testing framework, human tests are done to ensure baseline answer quality. Human reaction assessment may be part of your system-wide user-testing motions, such as performance, UX, etc. Similar to earlier trials, human testers can focus on specific system aspects using organized stages or evaluate the entire program and provide qualitative input.

Users who are engaged and eager to provide relevant input are needed because human testing is time-consuming and repetitive.

Opinionated tiger team actions

- Identify RAG system target user key personas.

- Get a representative sample of individuals that match these personas for realistic feedback.

- If feasible, test with technical and non-technical users.

- If feasible, sit with the user to ask follow-up questions and clarify their responses.

Conclusion

Google Cloud’s generative AI assessment tool lets you develop prebuilt and unique evaluation procedures to improve your RAG system.