Overview of Cloud Data Fusion

Building and managing data pipelines rapidly is possible with Google Cloud Data Fusion, a corporate data integration service that is cloud-native and completely managed. You may create scalable data integration solutions using the Cloud Data Fusion web interface. Without having to worry about managing the infrastructure, it enables you to connect to several data sources, transform the data, and then transmit it to multiple destination systems.

Take advantage of Cloud Data Fusion now

Within minutes, you may begin investigating Cloud Data Fusion.

- Establish a Google Cloud Data Fusion instance: To begin, establish an instance of Cloud Data Fusion.

- Cost: Learn about Cloud Data Fusion expenses before you set out on your adventure.

- Concepts: be familiar with the essential terms used in cloud data.

- Quickstart: Build your first pipeline to gain an understanding of Cloud Data Fusion.

Examine Cloud Data Fusion

The following sections provide an explanation of the key elements of cloud data fusion.

Tenant project

Tenancy units of tenant projects host the services needed to create and maintain Cloud Data Fusion pipelines and save pipeline metadata. For every customer project, a distinct tenant project is generated, whereby Google Cloud Data Fusion instances are supplied. All networking and firewall configurations from the client project are carried over to the tenant project.

Cloud Data Fusion: Console

The Cloud Data Fusion console, also called the control plane, is a web interface and a collection of API activities that manage the Cloud Data Fusion instance itself, including updating, restarting, removing, and creating new instances.

Fusion of Cloud Data: Studio

The development, execution, and administration of pipelines and associated artifacts are handled by Cloud Data Fusion Studio, also known as the data plane. These operations are handled via a collection of REST API and web interface functions.

Three methods for limiting access to your pipelines and Google Cloud Data Fusion instance

A fully managed, cloud-native enterprise data integration solution, Cloud Data Fusion allows users to easily create and manage secure data pipelines. One of the main ways it achieves its security objectives is by limiting access. They go over three methods in this blog post for controlling access control in Cloud Data Fusion:

- Using IAM for instance-level access control

- Role-based access control (RBAC) for namespace-level access control

- Using namespace service accounts to isolate access

These methods are all predicated on a few essential ideas:

Namespaces

In a Google Cloud Data Fusion instance, a namespace is a logical grouping of apps, data, and related information. Namespaces can be thought of as an instance division.

Accounting for services

In essence, a service account is a unique Google account created specifically for services or apps. When connecting with Google Cloud APIs and services, applications that are operating on Google Cloud resources authenticate themselves using the service account.

There are two service accounts at Cloud Data Fusion:

Usually referred to as the Cloud Data Fusion API service agent, the design service account allows access to client resources (such as pipeline validation, wrangler, and preview) during the pipeline design phase.

Execution service account: Dataproc uses this account, also known as the compute engine service account, to run the pipeline.

Let’s now examine the three levels at which access can be controlled in more detail. In Fusion of Cloud Data.

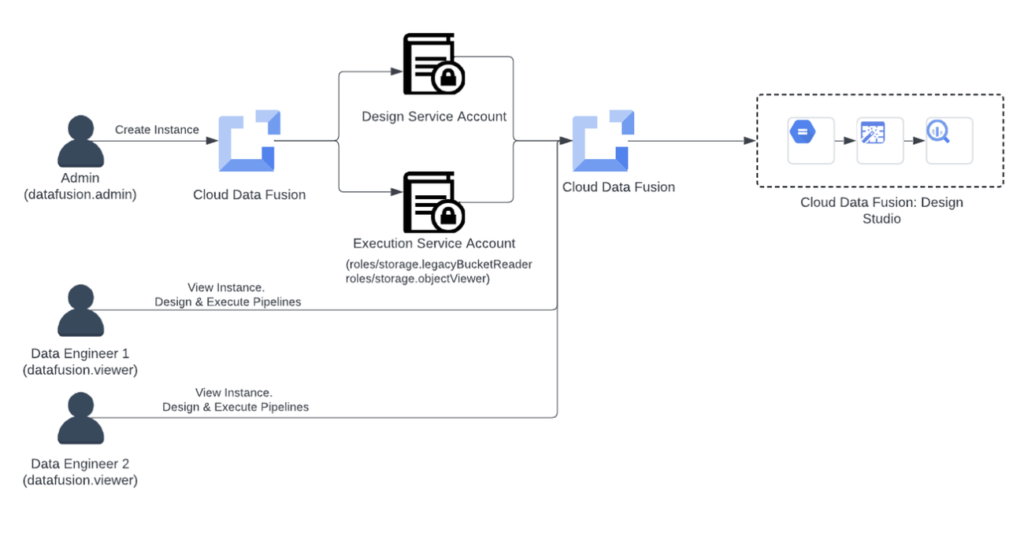

Scenario 1: IAM-based instance-level access control

Identity and Access Management (IAM) is integrated with Google Cloud services by Cloud Data Fusion. The roles admin and viewer are predefined. In accordance with the IAM principle of least privilege, only users who require the ability to manage (create and destroy) instances should be granted the admin role. Users who do not need to control the instances only need access should be given the viewer role.

Imagine that Acme Inc. runs pipelines for its finance department using Cloud Data Fusion. Acme Inc. intends to begin with a basic structure for access control and uses Identity and Access Management (IAM) to manage access as follows:

Assigning users to IAM roles:

- Acme Inc. recognises data engineers and administrators in the finance division.

- In order for the admin to create, modify, or remove a Cloud Data Fusion instance, the role “role/datafusion.admin” is assigned to them.

- Data engineers are then given the “role/datafusion.viewer” role by the admin, enabling them to observe the instance and create and run pipelines.

- Although data engineers have complete access to Google Cloud Data Fusion Studio, they are unable to upgrade, modify, or remove Cloud Data Fusion for example

Assigning service account IAM roles:

- Acme Inc.’s data engineers are looking to create a pipeline that will take data out of Cloud Storage and put it into BigQuery.

- To allow data to be ingested from Cloud Storage and BigQuery, the administrator grants the following roles to the Google Cloud Data Fusion execution service account:

- roles/storage.legacyBucketReader

- roles/storage.objectViewer

- roles/bigquery.jobUser

- A data engineer can use these services to ingest data without any limitations after assigning them.

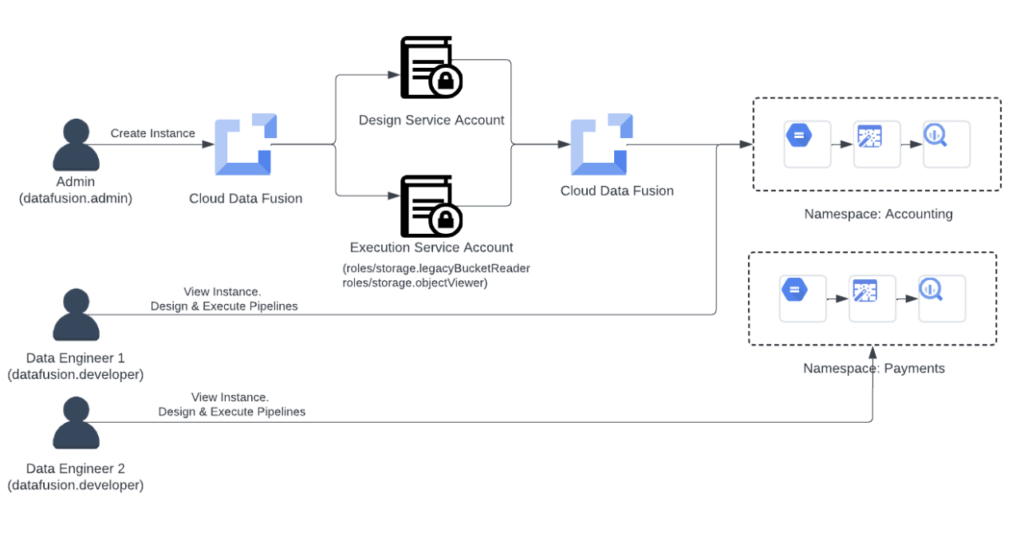

Scenario 2: RBAC-based namespace-level access control

You can control the precise user rights in your data pipelines with Google Cloud Data Fusion thanks to RBAC. This helps you keep an eye on who can access which resources, what they can do with them, and which portions of an instance they may access. At the namespace level, RBAC offers the following roles that can be assigned to a user:

- datafusion.viewer: Look at the pipelines

- datafusion.operator: Utilise the pipelines that are deployed.

- Develop, preview, and implement pipelines with datafusion.developer.

- datafusion.editor: total availability of all resources

You may also create custom roles with it.

Acme Inc. now wishes to divide the accounting and payments teams under the finance department’s use of Google Cloud Data Fusion. Acme Inc. needs to make sure that only the data engineers on the accounting team are able to execute pipelines in the namespaces for payments and accounting. Acme Inc. uses RBAC in the following ways:

At the namespace level, giving the user (or principal) roles:

- Acme Inc.’s Cloud Data Fusion admin locates a data engineer (DE1) in the accounting division.

- The administrator gives DE1 permission to use the “Accounting” namespace in the Google Cloud Data Fusion console’s permissions section.

- DE1 is given the datafusion.developer role by the admin, enabling pipeline creation, preview, and deployment under the “Accounting” namespace.

- In a similar vein, the administrator gives Data Engineer (DE2) in the payments department the namespace “Payment” and the role “datafusion.developer.”

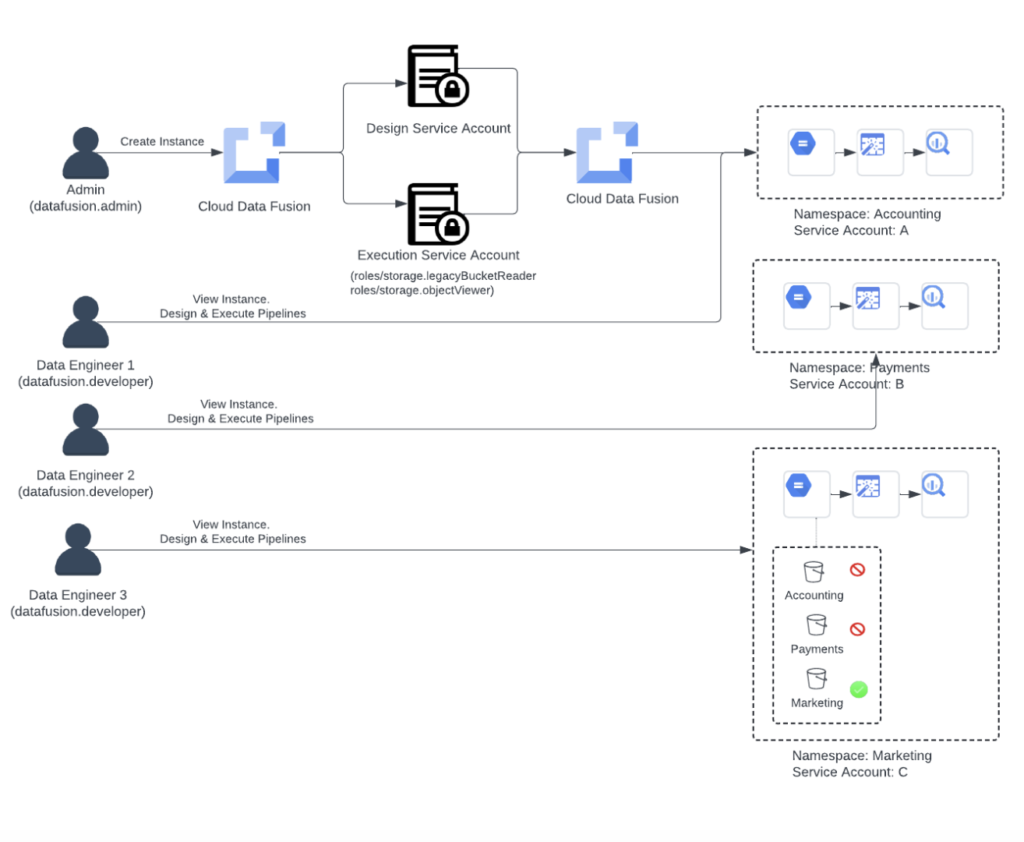

Third scenario: Use namespace service accounts to isolate access

You can further restrict access to Google Cloud resources within each namespace by using namespace-level service accounts. Only pipeline design-time actions, such as wrangler, pipeline preview, connections, etc., are isolated by namespace service accounts.

Think about the Acme Inc. case.

The marketing division will now be able to use Google Cloud Data Fusion more extensively. Acme Inc. develops a namespace only for the “Marketing” department so that when data engineers design pipelines, they are unable to view any data from other departments even if they can execute pipelines in that namespace. The following is how Acme Inc. uses namespace service accounts:

At the namespace level, giving the user (or principal) roles:

- The Cloud Data Fusion administrator at Acme Inc. locates a data engineer (DE3) in the “Marketing” division.

- The administrator gives DE3 permission to use the “Marketing” namespace in the Google Cloud Data Fusion console’s permissions section.

- DE3 is given the datafusion.developer role by the admin, enabling him or her to create, test, and implement pipelines in the “Marketing” namespace.

- DE3 wants to build a pipeline to ingest data from Cloud Storage and put it into BigQuery; their use case is the same as other departments’.

- When DE3 establishes a new connection in its namespace, it can see the storage buckets of other departments.

At the namespace level, service accounts are assigned:

- Each “Accounting,” “Payments,” and “Marketing” namespace has a service account that the administrator configures and allocates.

- The administrator navigates to Cloud Storage and gives the “roles/storage.objectUser” role to the principals of the “Accounting,” “Payments,” and “Marketing” service accounts.

- Data Engineer (DE3) opens a storage bucket list and establishes a Cloud Storage connection in Google Cloud Data Fusion. Although they can only access the storage buckets of the “Marketing” department, data engineers can view the list of buckets.

Prevent unauthorised access and alteration of data

Cloud Data Fusion provides strong capabilities to reduce the risk of unintended data alteration or unauthorised access for ETL workloads. Roles can be thoughtfully designed and namespaces can be strategically used to build a customised, secure data environment that meets the needs of your organisation.

Google Cloud data fusion pricing

The two primary components of Cloud Data Fusion price are:

Regardless of the quantity of pipelines you construct or use, the design cost is determined by the number of hours a Cloud Data Fusion instance is operational. The price points of the three categories are Developer, Basic, and Enterprise. The good news is that there is a free tier included with every tier; each customer can use the first 120 hours for free each month. Based on hourly rates, the following is a ballpark estimate of monthly costs:

Developer: ~$250

Basic: ~$1100

Enterprise: ~$3000

Processing cost: The Dataproc clusters that your pipelines are running on will determine this. Another Google Cloud service that offers clusters for data processing is called Dataproc. The amount you pay will depend on how many virtual CPU cores you utilise and how long your jobs take. Generally, it’s about $0.01 per core per second.