Direct Preference Optimization Explained

An improved technique for reinforcement learning training with human feedback

Direct preference optimization performance can be enhanced by 20% to 40% by contrasting training pairings with significant reward differences, which reduces spurious correlations.

The typical technique for matching large language models (LLMs) with human preferences, such as the need for factually correct responses and nontoxic language, is reinforcement learning with human feedback (RLHF). Direct preference optimization, one of the most widely used RLHF techniques lately, involves the LLM selecting between two output possibilities, one of which has been marked as favoured by human annotators.

Yet, LLMs are at risk of picking up erroneous correlations from the data when using direct preference optimisation (DPO) and other comparable direct-alignment procedures. For example, it is typical for the serious, considerate comments to be lengthier than the offensive ones in toxicity databases. Thus, an LLM may learn to like longer responses over shorter ones during RLHF, which may not be the case generally.

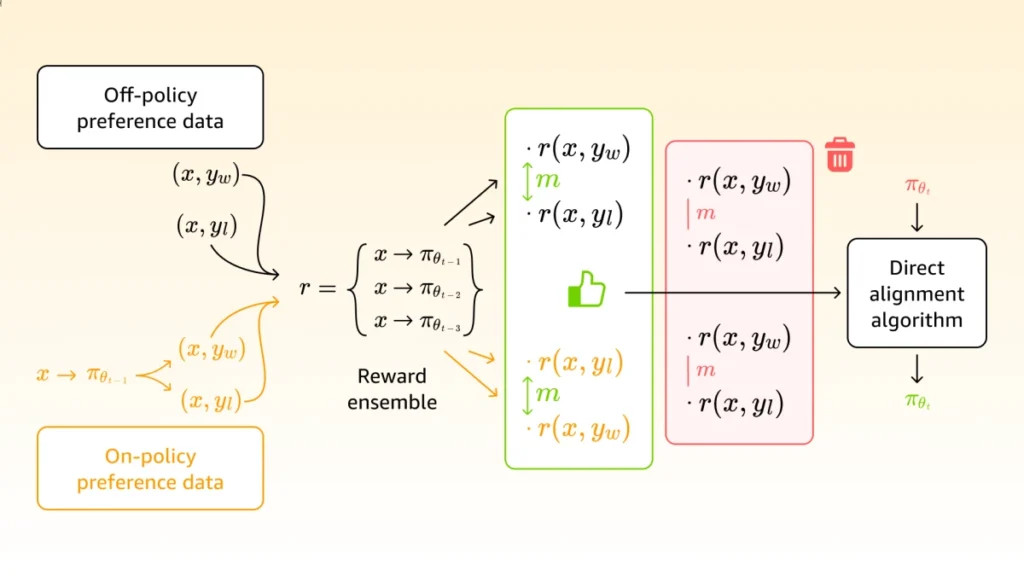

Amazon Science introduced SeRA, a technique for reducing misleading correlations for self-reviewing and alignment, at this year’s International Conference on Learning Representations (ICLR). First, it produces more training instances using the LLM itself following the initial round of RLHF on human-annotated data. Next, it evaluates the intensity of preference for training pairs using the LLM’s output probabilities, retaining only those in which the desired response is highly preferred.

Amazon Science tests its method on four benchmark datasets by comparing a SeRA-trained model to three baseline models. It utilizes an off-the-shelf LLM to determine which answer is better after comparing the output of its model to each of the baselines for each test input. In these pairwise comparisons, the SeRA-trained model consistently outperforms the three baselines, often by as much as 20% to 40%.

Direct preference optimization

Using the trial-and-error process of reinforcement learning, an agent engages with the environment and is rewarded more or less based on its behaviours. The agent tries to figure out a strategy that maximises its total benefit over time.

A robot may receive a negative reward for running into a wall and a high payout for successfully navigating to a designated place in classical reinforcement learning. This type of contact with the environment can be literal. However, in RLHF, the reward is contingent on how well an LLM’s output matches a human-specified paradigm instance.

In conventional RLHF, a different model that has also been trained on human-annotated data determines the reward. However, this method takes a lot of time and is not scalable. DPO eliminates the need for a second model because the LLM is rewarded if it selects the output that humans want and not if it doesn’t.

DPO’s disadvantage is that it treats every training pair similarly, so that whether the desired output is substantially or simply marginally liked, the reward is the same. This raises the likelihood that erroneous correlations will be learnt by the model.

The model might conclude that toxicity, rather than answer length, was the pertinent characteristic of the training instances if, for example, selecting very toxic responses resulted in a higher penalty than selecting slightly toxic responses. SeRA reintroduces the disparities after DPO resolves them.

SeRA

Using a dataset of example pairs with human annotations, it first carry out standard DPO with SeRa. The LLM has gained knowledge about the kinds of outcomes that people want after this initial run through the data.

Next, it creates a fresh set of training examples using the modified model. It gives each response a preference score based on the likelihood that the updated model will produce that response for each pair of generated responses. Only pairs where the desired response scores noticeably higher than the non-preferred response are retained.

We next filter the data in the original, human-annotated dataset using the same metric. After that, we repeat DPO by combining filtered samples from the old dataset with filtered samples from our newly created dataset. Until model performance converges, this procedure is repeated, with the generated samples making up an increasing percentage of the dataset.

According to this idea, if a dataset is intended to depict a contrast but also contains false correlations, the intended contrast, for example, between toxic and non-toxic data, will be substantially larger than the unintended contrast, which would be between lengthy and short responses.

We believe this to be a reasonable assumption for other false correlations, and it held true for the four benchmark datasets Amazon used to test its approach. However, there may be situations where it isn’t true, so it’s important to keep an eye on the model’s convergence behavior when using the SeRA approach.

In its work, Amazon shows how to generalize its approach to alternative direct-alignment techniques, even though it employed DPO in its trials. Lastly, there is a chance that it can enter a feedback loop where the model exaggerates a feature of the original dataset when it use model-generated data to train a model. To maintain consistency in the distinctive properties of the training data, the model’s reward is therefore based on both the current iteration and previous iterations for each run through the data.