Introduction

A neural network called CLIP is skilled at understanding visual ideas under the guidance of plain language. It focuses on a pretraining job that entails matching captions with matched pictures while simultaneously training an image encoder and a text encoder. Because of its design, Contrastive Language-Image Pretraining can easily adjust to a range of visual classification standards. By just being given the names of the visual categories that need to be identified, it does this, exhibiting “zero-shot” learning skills similar to those seen in GPT-2 and GPT-3 models.

A key component of contemporary computer vision is the Contrastive Language-Image Pretraining (CLIP) architecture. The CLIP architecture may be used to train embedding models for image and video classification, image similarity calculations, retrieval augmented generation (RAG), and other applications.

Several public checkpoints on huge datasets have been modified in the Contrastive Language-Image Pretraining architecture, which was made available by OpenAI. Since OpenAI published the first model using the CLIP architecture, other businesses, including Apple and Meta AI, have trained their own CLIP models. However, these models are often trained for generic purposes rather than being customized to a particular use case.

What is CLIP?

OpenAI created the multimodal vision model architecture known as Contrastive Language-Image Pretraining (CLIP). Text and image embeddings may be calculated using CLIP. Text and picture pairings are used to train CLIP models. With reference to the image’s text caption, these pairings are utilized to train an embedding model that discovers connections between an image’s components.

CLIP models

Many corporate applications may benefit from the use of CLIP models. For instance, it may be used to help with:

- Sorting pictures of components on production lines

- Sorting videos in a media collection

- Real-time, large-scale picture content moderation

- Before you train big models, deduplicate the photos.

- And more

Depending on the hardware, Contrastive Language-Image Pretraining models may operate at several frames per second. Models like CLIP work best on hardware designed for AI development, such the Intel Gaudi 2 accelerator. For instance, researchers discovered that 66,211 CLIP vectors could be computed in 20 minutes using a single Intel Gaudi 2 accelerator. Many real-time applications can be solved at this speed; more chips might be added for better results.

While OpenAI’s Checkpoint and other out-of-the-box CLIP models are effective for many use cases, typical CLIP models are not suitable for highly specialized use cases or use cases including corporate data, which would be impossible for big models to train on.

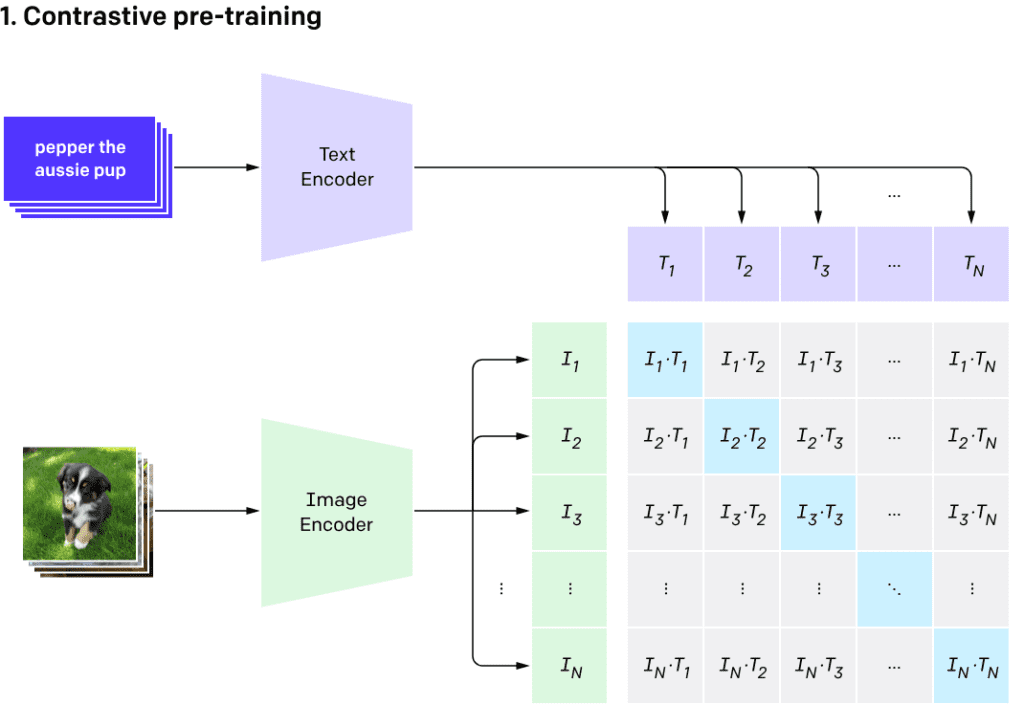

Contrastive Pre-training

Contrastive Language-Image Pretraining calculates the dense cosine similarity matrix between each potential (image, text) candidate in a batch of image-text pairings. The main concept is to decrease the similarity for erroneous pairings (depicted in grey in the picture) and increase the similarity for correct pairs (shown in blue in the figure below). They do this by optimizing over these similarity scores a symmetric cross-entropy loss.

To put it simply, the goal is to minimize the similarity between the picture and the other captions while increasing the similarity between the image and its matching caption. This reasoning is also applied to the caption, thus want to reduce the similarity between all other photos and increase the similarity between the caption and its related image.

Text Encoder And Image Encoder

The distinct encoders for text and graphics in CLIP’s architecture provide users freedom in selecting what they want. Users may increase flexibility and experimentation by choosing other text encoders or replacing the conventional picture encoder, such as a Vision Transformer, with alternatives like ResNet. Naturally, you will need to train your model anew if you change one of the encoders since the embedding distribution will be changed.

Contrastive Language-Image Pretraining Use cases

Contrastive Language-Image Pretraining may be used for many different purposes. Some noteworthy use cases are as follows:

Diffusion model conditioning, similarity search, and zero-shot picture categorization.

Usage

An picture and pre-defined classes are usually used as input for real-world applications. The accompanying Python example shows how to execute CLIP using the transformers library. It want to zero-shot categorize the picture below as either a dog or a cat in this case.

The following probabilities were obtained after running this code:

from PIL import Image

import requests

from transformers import CLIPProcessor, CLIPModel

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(

text=["a photo of a cat", "a photo of a dog"],

images=image,

return_tensors="pt",

padding=True,

)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)After executing this code, it got the following probabilities:

- “a cat picture”: 99.49%

- “a dog picture”: 0.51%

Limitations

Contrastive Language-Image Pretraining is good for zero-shot classification, but it won’t likely beat a customized, specialized model. Furthermore, it has poor generalization capabilities, especially when dealing with data or instances that were not used during training. The study also demonstrates how the selection of categories affects CLIP’s efficacy and biases, as seen by experiments conducted on the Fairface dataset. Racial and gender classifications showed notable differences, with racial accuracy being about 93% and gender accuracy exceeding 96%.

Conclusion

The embedding models known as Contrastive Language Image Pretraining (CLIP) models may be used to a variety of tasks, including dataset deduplication, semantic search applications, zero-shot image and video classification, image-based retrieval augmented generation (RAG), and more.

OpenAI’s CLIP model has completely transformed the multimodal area. CLIP’s ability to categorize photos into categories it wasn’t expressly trained on, thanks to its mastery of zero-shot learning, is what makes it unique. Its creative training approach, which teaches it to match photos with text labels, gives it this amazing capacity for generalization.