Dataproc Serverless: More rapid, simpler, and intelligent. To provide new features that further improve the speed, ease of use, and intelligence of Dataproc Serverless.

Elevate your Spark experience with:

Native query execution: Take use of the new Native query execution in the Premium tier to see significant speed improvements.

Using Spark UI for smooth monitoring: With a built-in Spark UI that is accessible by default for all Spark batches and sessions, you can monitor task progress in real time.

Investigation made easier: Troubleshoot batch operations from a single “Investigate” page that automatically filters logs by errors and shows all the important metrics highlighted.

Using Gemini for proactive autotuning and supported troubleshooting: Allow Gemini to reduce malfunctions and adjust performance by analyzing past trends. Utilize Gemini-powered insights and suggestions to swiftly address problems.

Accelerate your Spark jobs with native query execution

By enabling native query execution, you may significantly increase the performance of your Spark batch tasks in the Premium tier on Dataproc Serverless Runtimes 2.2.26+ or 1.2.26+ without requiring any modifications to your application.

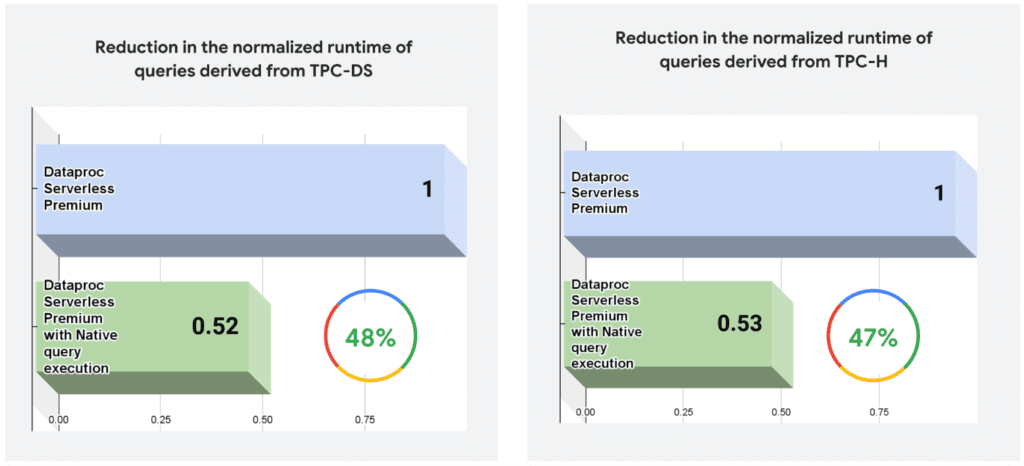

In the experiments using queries taken from the TPC-DS and TPC-H benchmarks, this new functionality in the Dataproc Serverless Premium tier increased the query performance by around 47%.

The 1TB GCS Parquet data and queries produced from the TPC-DS and TPC-H standards serve as the foundation for the performance findings. Since these runs do not meet all of the standards of the TPC-DS standard and the TPC-H standard specification, they cannot be compared to published TPC-DS standard and TPC-H standard results.

Use the native query execution qualifying tool to get started right away. It will make it simple to find tasks that qualify and calculate possible performance improvements. Once the batch tasks on the list have been identified for native query execution, you may activate it to speed up the operations and perhaps save money.

Seamless monitoring with Spark UI

Are you sick and weary of battling to manage and build up persistent history server (PHS) clusters for the sole purpose of debugging your Spark batches? Wouldn’t it be simpler to see the Spark UI in real-time without having to pay for the history server?

Up until recently, establishing and maintaining a separate Spark persistent history server was necessary for tracking and debugging Spark activities in Dataproc Serverless. Importantly, the history server has to be set up for every batch run. Otherwise, the batch job’s study of the open-source user interface would not be possible. Additionally, switching between apps was sluggish in the open-source user interface.

It have clearly heard you. It present Dataproc Serverless’s completely managed Spark UI, which simplifies monitoring and troubleshooting.

In both the Standard and Premium levels of Dataproc Serverless, the Spark UI is integrated and accessible immediately for any batch job and session at no extra cost. Just submit your task, and you can immediately begin using the Spark UI to analyze performance in real time.

You will adore the Serverless Spark UI for the following reasons:

| Traditional Approach | The new Dataproc Serverless Spark UI | |

| Effort | Create and manage a Spark history server cluster. Configure each batch job to use the cluster. | No cluster setup or management required. Spark UI is available by default for all your batches without any extra configuration. The UI can be accessed directly from the Batch / Session details page in the Google Cloud console. |

| Latency | UI performance can degrade with increased load. Requires active resource management. | Enjoy a responsive UI that automatically scales to handle even the most demanding workloads. |

| Availability | The UI is only available as long as the history server cluster is running. | Access your Spark UI for 90 days after your batch job is submitted. |

| Data freshness | Wait for a stage to complete to see that its events are in the UI. | View regularly updated data without waiting for the stage to complete. |

| Functionality | Basic UI based on open-source Spark. | Enhanced UI with ongoing improvements based on user feedback. |

| Cost | Ongoing cost for the PHS cluster. | No additional charge. |

Accessing the Spark UI

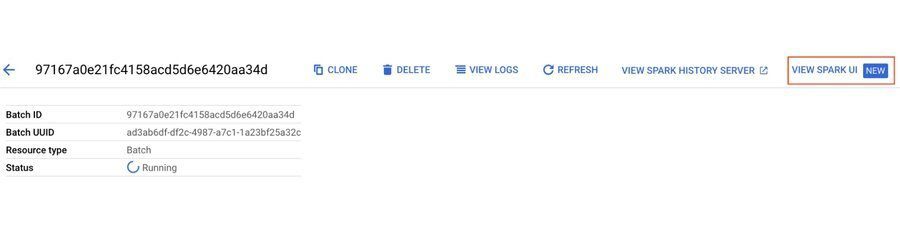

The “VIEW SPARK UI” link is located in the upper right corner.

With detailed insights into your Spark job performance, the new Spark UI offers the same robust functionality as the open-source Spark History Server. Browse active and finished applications with ease, investigate jobs, stages, and tasks, and examine SQL queries to have a thorough grasp of how your application is being executed. Use thorough execution information to diagnose problems and identify bottlenecks quickly.

The ‘Executors’ page offers direct connections to the relevant logs in Cloud Logging for even more in-depth investigation, enabling you to look into problems pertaining to certain executors right away.

If you have previously set up a Persistent Spark History Server, you may still see it by clicking the “VIEW SPARK HISTORY SERVER” link.

Streamlined investigation (Preview)

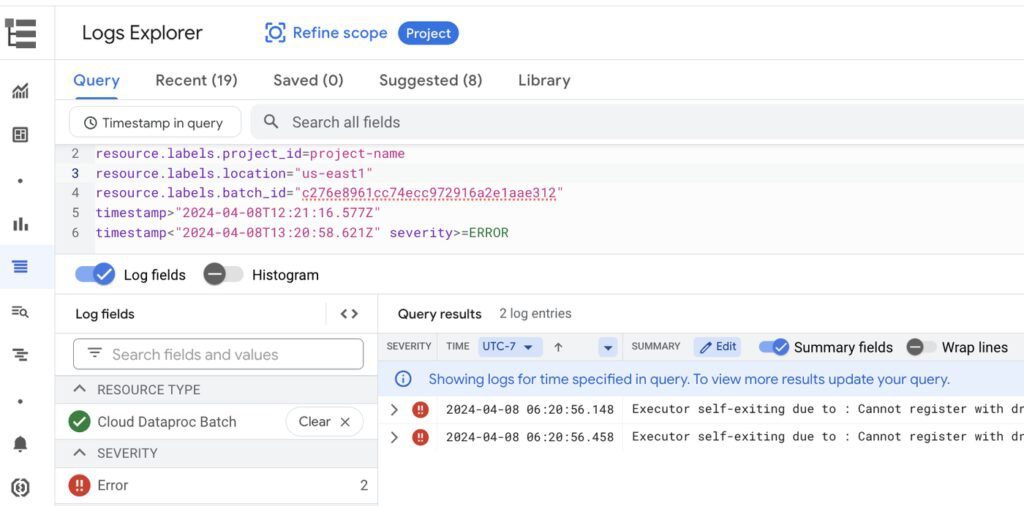

You may get immediate diagnostic highlights gathered in one location with the new “Investigate” option in the Batch details page.

The key metrics are automatically shown in the “Metrics highlights” area, providing you with a comprehensive view of the state of your batch task. If you want more metrics, you have the option to design a custom dashboard.

A widget called “Job Logs” displays the logs sorted by mistakes underneath the metrics highlights, allowing you to quickly identify and fix issues.

Proactive autotuning and assisted troubleshooting with Gemini (Preview)

Finally, when submitting your batch job setups, Gemini in BigQuery may assist simplify the process of optimizing hundreds of Spark attributes. Gemini can eliminate the need to go through many gigabytes of logs in order to debug the operation if it fails or runs slowly.

Enhance performance: Gemini may automatically adjust your Dataproc Serverless batch tasks’ Spark settings for optimum dependability and performance.

Simplify troubleshooting: By selecting “Ask Gemini” for AI-powered analysis and help, you may rapidly identify and fix problems with sluggish or unsuccessful tasks.