Graph Neural Network Training (GNN) Accelerated on Intel CPU with Hybrid Partitioning and Fused Sampling

High points

- A novel graph sampling technique dubbed “fused sampling,” created by Intel Labs and AIA, may speed up the training of Graph Neural Networks (GNNs) on CPUs by up to two times. One of the most widely used libraries for GNN training, the Deep Graph Library (DGL), now includes the updated sample process.

- With the use of a novel graph partitioning technique called “hybrid partitioning,” Intel Labs has been able to significantly accelerate the distributed training of Graph Neural Networks (GNNs) on huge networks. Popular graph benchmarks have seen epoch durations reduced by as much as 30%.

- Using 16 2-socket computers, each with two 4th Gen Intel Xeon Scalable Processors (Sapphire Rapids), the combination of fused sampling and hybrid partitioning set a new CPU record for training GNNs on the well-known ogbn-papers100M benchmark, achieving a total FP32 training time of just 1.6 minutes.

In several graph-related tasks, including link prediction in recommendation graphs, molecular graph physical property prediction, and high-level semantic feature prediction of nodes in citation graphs and social networks, Graph Neural Networks (GNNs) have achieved state-of-the-art performance. In many fields, graphs may include billions of edges and millions of nodes.

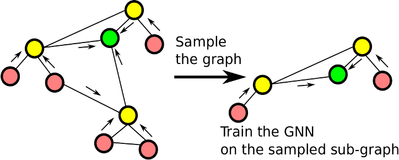

Training over the whole graph at once may rapidly deplete memory. Sampling-based training is one of the most widely used techniques for training GNNs on big graphs: we randomly select a small portion of the graph (small enough to fit in available memory) for each training iteration and train the GNN on this graph sample. However, as a illustrates, the time required for the graph sampling during each iteration may easily eclipse the time for the forward and backward runs of the GNN.

The graph is often divided among many computers to speed up sampling-based training, as seen in the machines are in charge of producing their own graph samples and using them to train the GNN model. Each computer would need to speak with other machines in order to create a graph sample since the graph topology is divided across the devices. As we generate bigger graph samples, this communication cost will increase. When the GNN model includes additional layers, the graph sample size usually grows.

In the following, we outline two complimentary methods that tackle the significant communication cost associated with distributed sample-based training and the high sampling CPU sampling overhead now experienced by popular machine learning libraries.

1. Combining Sampling

Every training iteration must include graph sampling. Thus, it is essential to sample graphs as quickly as feasible. Popular GNN libraries, like DGL (a popular GNN training library), provide a typical sample pipeline that consists of several phases that each produce intermediate tensors that must be written to and subsequently read from memory.

2. Adaptable partitioning

When a graph becomes too large to store in the memory of one training machine, it is often divided among many machines. The relevant graph data required for each machine to train the GNN model is requested and provided via inter-machine communication. We have noticed that the majority of the graph representation size is often occupied by the characteristics connected to the graph nodes. More than 90% of the RAM required to display the graph is often occupied by the node characteristics.

Inspired by this finding, we created a novel partitioning technique called hybrid partitioning, which, as splits the graph exclusively according to its node properties while reproducing the relatively tiny graph topology information (the graph’s adjacency matrix) across all training machines. Because the machines only need to communicate node characteristics, this results in a significant decrease in the number of communication rounds in distributed sampling based GNN training trials.

As a fused sampling in conjunction with hybrid partitioning resulted in a significant decrease in epoch durations for distributed sample-based GNN training. Even on its own, hybrid partitioning improves performance; when combined with fused sampling, it increases epoch times by more than two times. We obtain a record-breaking total FP32 training time of under 1.6 minutes on 16 2-socket computers by using hybrid partitioning and fused sampling.

[…] a result of its strong and yet current Intel CPU, the SZBOX S513 is a flat computer system that is expected to be suitable for a variety of […]

[…] Maximus Z790 Hero BTF can support a top-tier Intel CPU with voltage regulator modules with 20+1+2 power stages rated at 90 amps each. Each power stage […]

[…] the transition between these scales in a seamless approach. The training of an equivariant graph neural network, also known as an E(n)-GNN, was carried out so that we could demonstrate that […]