It looked at how combining Gretel with BigQuery DataFrame simplifies synthetic data production while maintaining data privacy in the useful guide to synthetic data generation with Gretel and BigQuery DataFrames. In summary, BigQuery DataFrame is a Python client for BigQuery that offers analysis pushed down to BigQuery using pandas-compatible APIs.

Gretel provides an extensive toolkit for creating synthetic data using state-of-the-art machine learning methods, such as large language models (LLMs). An seamless workflow is made possible by this integration, which makes it simple for users to move data from BigQuery to Gretel and return the created results to BigQuery.

The technical elements of creating synthetic data to spur AI/ML innovation are covered in detail in this tutorial, along with tips for maintaining high data quality, protecting privacy, and adhering to privacy laws. In Part 1, to de-identify the data from a BigQuery patient records table, and in Part 2, it create synthetic data to be saved back to BigQuery.

Setting the stage: Installation and configuration

With BigFrames already installed, you may begin by using BigQuery Studio as the notebook runtime. To presume you are acquainted with Pandas and have a Google Cloud project set up.

- Step 1: Set up BigQuery DataFrame and the Gretel Python client.

- Step 2: Set up BigFrames and the Gretel SDK: To use their services, you will want a Gretel API key. One is available on the Gretel console.

Part 1: De-identifying and processing data with Gretel Transform v2

De-identifying personally identifiable information (PII) is an essential initial step in data anonymization before creating synthetic data. For these and other data processing tasks, Gretel Transform v2 (Tv2) offers a strong and expandable framework.

Tv2 handles huge datasets efficiently by combining named entity recognition (NER) skills with sophisticated transformation algorithms. Tv2 is a flexible tool in the data preparation pipeline as it may be used for preprocessing, formatting, and data cleaning in addition to PII de-identification. Study up on Gretel Transform v2.

Step 1: Convert your BigQuery table into a BigFrames DataFrame.

A portion of the DataFrame that it will be transforming is shown in the table below. Based on the value of the sex column, to generate new first and last names after hashing the patient_id column.

patient_id first_name last_name sex race

pmc-6545753-1 Antonio Fernandez Male Hispanic

pmc-6192350-1 Ana Silva Female Other

pmc-6332555-4 Lina Chan Female Asian

pmc-6089485-1 Omar Hassan Male Black or African American

pmc-6100673-1 Aisha Khan Female Asian

Step 2: Work with Gretel to transform the data.

Step 3: Explore the de-identified data.

# Take a look at the newly transformed BigFrames DataFrame

transformed_df = transform_results.transformed_df

transformed_df.peek()

A comparison of the original and de-identified data may be seen below.

Original:

patient_id first_name last_name sex race

pmc-6545753-1 Antonio Fernandez Male Hispanic

pmc-6192350-1 Ana Silva Female Other

pmc-6332555-4 Lina Chan Female Asian

pmc-6089485-1 Omar Hassan Male Black or African American

pmc-6100673-1 Aisha Khan Female Asian

De-identified:

patient_id first_name last_name sex race

389b63f369 John Hampton Male Hispanic

eff31024e6 Christine Carlson Female Other

8af37475b6 Sarah Moore Female Asian

7bd5f08fb8 Russell Zhang Male Black or African American

1628622e23 Stacy Wilkinson Female Asian

Part 2: Generating synthetic data with Navigator Fine Tuning (LLM-based)

Gretel Navigator Fine Tuning (NavFT) refines pre-trained models on your datasets to provide high-quality, domain-specific synthetic data. Important characteristics include:

- Manages a variety of data formats, including time series, JSON, free text, category, and numerical.

- Maintains intricate connections between rows and data kinds.

- May provide significant novel patterns, which might enhance the performance of ML/AI tasks.

- Combines privacy protection with data usefulness.

By utilizing the advantages of domain-specific pre-trained models, NavFT expands on Gretel Navigator’s capabilities and makes it possible to create synthetic data that captures the subtleties of your particular data, such as the distributions and correlations for numeric, categorical, and other column types.

Using the de-identified data from Part 1, it will refine a Gretel model in this example.

Step 1: Make a model better:

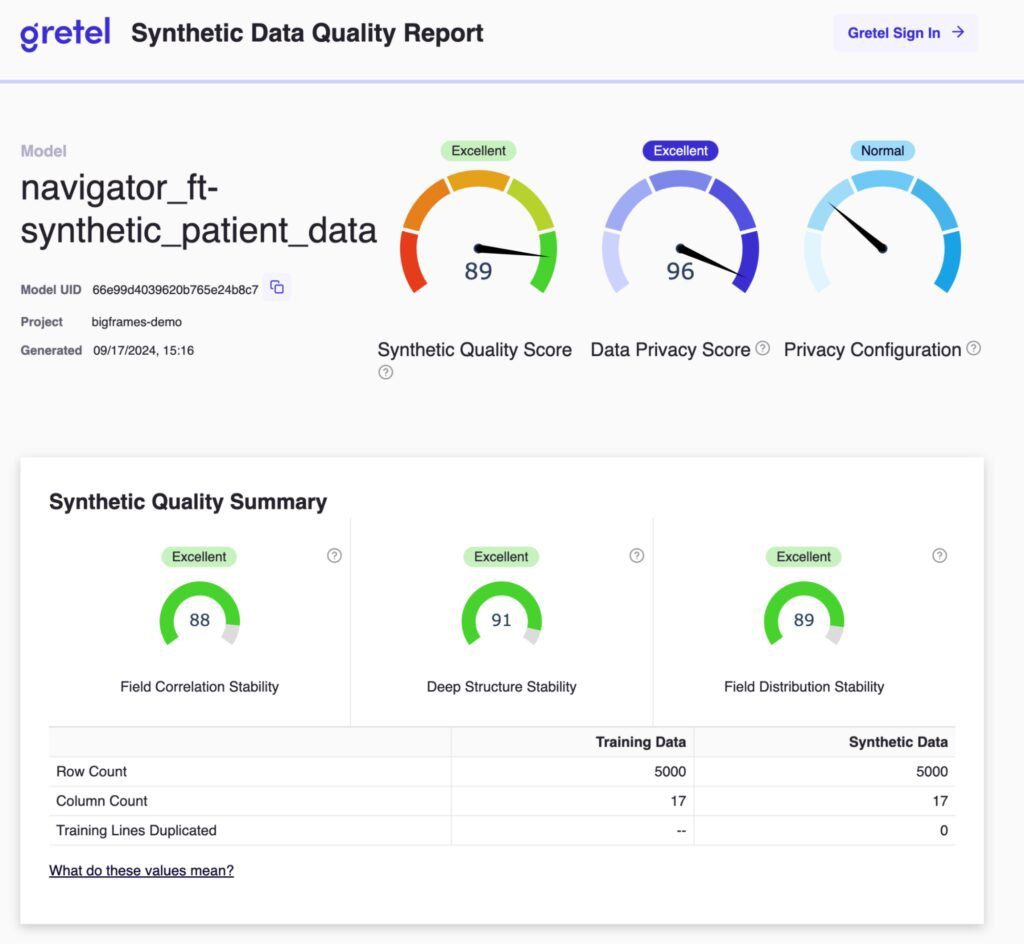

# Display the full report within this notebook

train_results.report.display_in_notebook()

Step 2: Retrieve the Quality Report for Gretel Synthetic Data:

Step 3: Create synthetic data using the optimized model, assess the privacy and quality of the data, and then publish the results back to a BQ table.

A few things to note about the synthetic data:

- Semantically accurate, the different modalities (free text, JSON structures) are completely synthetic and retained.

- The data are grouped by patient during creation due to the group-by/order-by hyperparameters that were used during fine-tuning.

How to use BigQuery with Gretel

This technical manual offers a starting point for creating and using synthetic data using Gretel AI and BigQuery DataFrame. You may use the potential of synthetic data to improve your data science, analytics, and artificial intelligence development processes while maintaining data privacy and compliance by examining the Gretel documentation and using these examples.