The First AMD 1B Language Models Are Introduced: AMD OLMo.

Introduction

Recent discussions have focused on the fast development of artificial intelligence technology, notably large language models (LLMs). From ChatGPT to GPT-4 and Llama, these language models have excelled in natural language processing, creation, interpretation, and reasoning. In keeping with AMD’s history of sharing code and models to foster community progress, we are thrilled to inform AMD OLMo, the first set of completely open 1 billion parameter language models.

Why Build Your Own Language Models

You may better connect your LLM with particular use cases by incorporating domain-specific knowledge by pre-training and fine-tuning it. With this method, businesses may customize the model’s architecture and training procedure to fit their particular needs, striking a balance between scalability and specialization that may not be possible with off-the-shelf models. The ability to pre-train LLMs opens up hitherto unheard-of possibilities for innovation and product differentiation across sectors, especially as the need for personalized AI solutions keeps rising.

On a cluster of AMD Instinct MI250 GPUs, the AMD OLMo in-house trained series of language models (LMs) are 1 billion parameter LMs that were trained from scratch utilizing billions of tokens. In keeping with the objective of promoting accessible AI research, AMD has made the checkpoints for the first set of AMD OLMo models available and open-sourced all of its training information.

This project enables a broad community of academics, developers, and consumers to investigate, use, and train cutting-edge big language models. AMD wants to show off its ability to run large-scale multi-node LM training jobs with trillions of tokens and achieve better reasoning and instruction-following performance than other fully open LMs of a similar size by showcasing AMD Instinct GPUs’ capabilities in demanding AI workloads.

Furthermore, the community may use the AMD Ryzen AI Software to run such models on AMD Ryzen AI PCs with Neural Processing Units (NPUs), allowing for simpler local access without privacy issues, effective AI inference, and reduced power consumption.

Unveiling AMD OLMo Language Models

AMD OLMo is a set of 1 billion parameter language models that have been pre-trained on 16 nodes with four (4) AMD Instinct MI250 GPUs and 1.3 trillion tokens. It are making available three (3) checkpoints that correlate to the different training phases, along with comprehensive reproduction instructions:

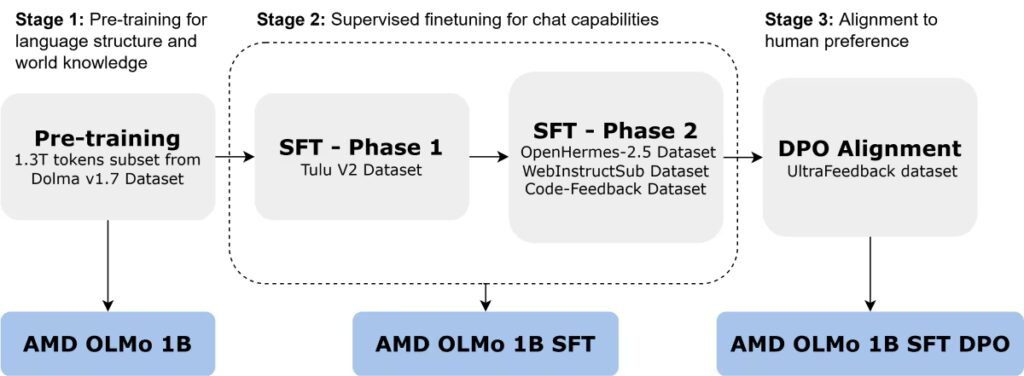

- AMD OLMo 1B: Pre-trained on 1.3 trillion tokens from a subset of Dolma v1.7.

- Supervised fine-tuning (SFT) was performed on the Tulu V2 dataset in the first phase of AMD OLMo 1B, followed by the OpenHermes-2.5, WebInstructSub, and Code-Feedback datasets in the second phase.

- AMD OLMo 1B SFT DPO: Using the UltraFeedback dataset and Direct Preference Optimization (DPO), this model is in line with human preferences.

With a few significant exceptions, AMD OLMo 1B is based on the model architecture and training configuration of the completely open source 1 billion version of OLMo. In order to improve performance in general reasoning, instruction-following, and chat capabilities, we pre-train using fewer than half of the tokens used for OLMo-1B (effectively halving the compute budget while maintaining comparable performance) and perform post-training, which consists of a two-phase SFT and DPO alignment (OLMo-1B does not carry out any post-training steps).

It generate a data mix of various and high-quality publically accessible instructional datasets for the two-phase SFT. All things considered, it training recipe contributes to the development of a number of models that outperform other comparable completely open-source models trained on publicly accessible data across a range of benchmarks.

AMD OLMo

Next-token prediction is used to train the AMD OLMo models, which are transformer language models that solely use decoders. Here is the model card, which includes the main model architecture and training hyperparameter information.

Data and Training Recipe

As seen in Figure 1, it trained the AMD OLMo series of models in three phases.

Stage 1: Pre-training

In order to educate the model to learn the language structure and acquire broad world knowledge via next-token prediction tasks, the pre-training phase included training on a large corpus of general-purpose text data. To selected 1.3 trillion tokens from the Dolma v1.7 dataset, which is openly accessible.

Stage 2: Supervised Fine-tuning (SFT)

In order to give its model the ability to follow instructions, to then improved the previously trained model using instructional datasets. There are two stages in this stage:

Stage 1: To start, it refine the model using the TuluV2 dataset, a high-quality instruction dataset of 0.66 billion tokens that is made publicly accessible.

Stage 2: To refine the model using a comparatively bigger instruction dataset, Open Hermes 2.5, in order to significantly enhance the instruction following capabilities. The Code-Feedback and WebInstructSub datasets are also used in this phase to enhance the model’s performance in the areas of coding, science, and mathematical problem solving. The total number of tokens in these databases is around 7 billion.

Throughout the two rounds, it carried out several fine-tuning tests with various dataset orderings and discovered that the above sequencing was most beneficial. To lay a solid foundation, the employ a relatively small but high-quality dataset in Stage 1. In Stage 2, it use a larger and more varied dataset combination to further enhance the model’s capabilities.

Stage 3: Alignment

Finally, it use the UltraFeedback dataset, a large-scale, fine-grained, and varied preference dataset, to further fine-tune it SFT model using Direct Preference Optimization (DPO). This improves model alignment and yields results that are in line with human tastes and values.

Results

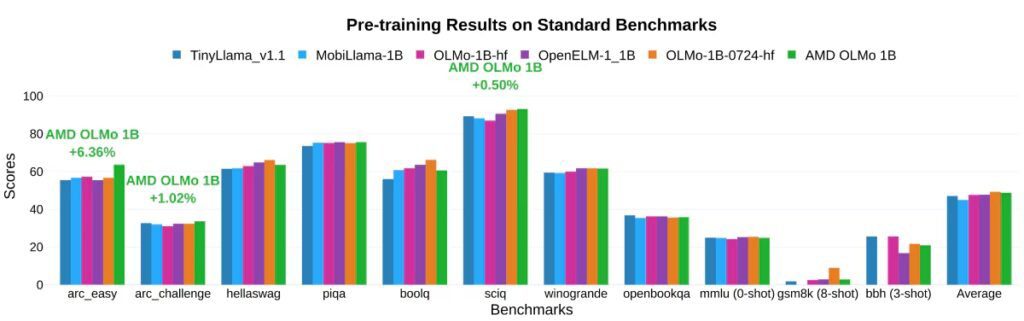

It contrast AMD OLMo models with other completely open-source models of comparable scale that have made their training code, model weights, and data publicly available. TinyLLaMA-v1.1 (1.1B), MobiLLaMA-1B (1.2B), OLMo-1B-hf (1.2B), OLMo-1B-0724-hf (1.2B), and OpenELM-1_1B (1.1B) are the pre-trained baseline models that to utilized for comparison.

For general reasoning ability, compares pre-trained models to a variety of established benchmarks. To assess responsible AI benchmarks, multi-task comprehension, and common sense reasoning using Language Model Evaluation Harness. It assess GSM8k in an 8-shot setting, BBH in a 3-shot setting, and the other benchmarks in a zero-shot scenario out of the 11 total.

Using AMD OLMo 1B:

- With less than half of its pre-training compute budget, the average overall general reasoning task score (48.77%) is better than all other baseline models and equivalent to the most recent OLMo-0724-hf model (49.3%).

- Accuracy improvements on ARC-Easy (+6.36%), ARC-Challenge (+1.02%), and SciQ (+0.50%) benchmarks compared to the next best models.

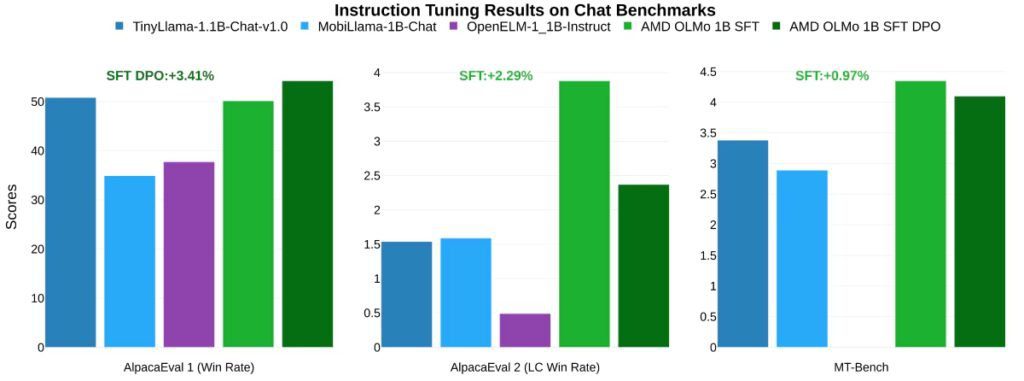

To employed TinyLlama-1.1B-Chat-v1.0, MobiLlama-1B-Chat, and OpenELM-1_1B-Instruct, the instruction-tuned chat equivalents of the pre-trained baselines, to assess the chat capabilities. It utilized Alpaca Eval to assess instruction-following skills and MT-Bench to assess multi-turn conversation skills, in addition to Language Model Evaluation Harness to assess common sense reasoning, multi-task comprehension, and responsible AI benchmarks.

Regarding the comparison of previous instruction-tuned baselines with the adjusted and aligned models:

The model accuracy was improved by two phases SFT from the pre-trained checkpoint on average for almost all benchmarks, including MMLU by +5.09% and GSM8k by +15.32%.

- Significantly better (+15.39%) than the next best baseline model (TinyLlama-1.1B-Chat-v1.0 at 2.81%) is AMD OLMo 1B SFT performance on GSM8k (18.2%).

- SFT model’s average accuracy across standard benchmark is at least +2.65% better than baseline chat models. It is further enhanced by alignment (DPO) by +0.46%.

- SFT model also outperforms the next-best model on the chat benchmarks AlpacaEval 2 (+2.29%) and MT-Bench (+0.97%).

- How alignment training enables it AMD OLMo 1B SFT DPO model to function similarly to other conversation baselines on responsible AI assessment benchmarks.

Additionally, AMD Ryzen AI PCs with Neural Processing Units (NPUs) may also do inference using AMD OLMo models. AMD Ryzen AI Software makes it simple for developers to run Generative AI models locally. By maximizing energy efficiency, protecting data privacy, and allowing a variety of AI applications, local deployment of such models on edge devices offers a secure and sustainable solution.

Conclusion

With the help of an end-to-end training pipeline that runs on AMD Instinct GPUs and includes a pre-training stage with 1.3 trillion tokens (half the pre-training compute budget compared to OLMo-1B), a two-phase supervised fine-tuning stage, and a DPO-based human preference alignment stage, AMD OLMo models perform on responsible AI benchmarks on par with or better than other fully open models of a similar size in terms of general reasoning and chat capabilities.

Additionally, AMD Ryzen AI PCs with NPUs, which may assist allow a wide range of edge use cases, were equipped with the language model. The main goal of making the data, weights, training recipes, and code publicly available is to assist developers in reproducing and innovating further. AMD is still dedicated to delivering a constant flow of new AI models to the open-source community and looks forward to the advancements that will result from their joint efforts.