Presenting AMD Nitro Diffusion: One-Step Models of Diffusion.

Stable Diffusion 2.1 Models

Recent generative AI research has altered image production and visual content generation with significant quality and flexibility gains. VAEs and GANs may aid. Due to its text-to-image synthesis, picture-to-image translation, and image inpainting, diffusion models are popular image generators. Together, these developments open up new opportunities in a variety of domains, from scientific visualization to entertainment, while also pushing the limits of creative and practical applications.

Simplifying Diffusion Models to Produce Images More Effectively

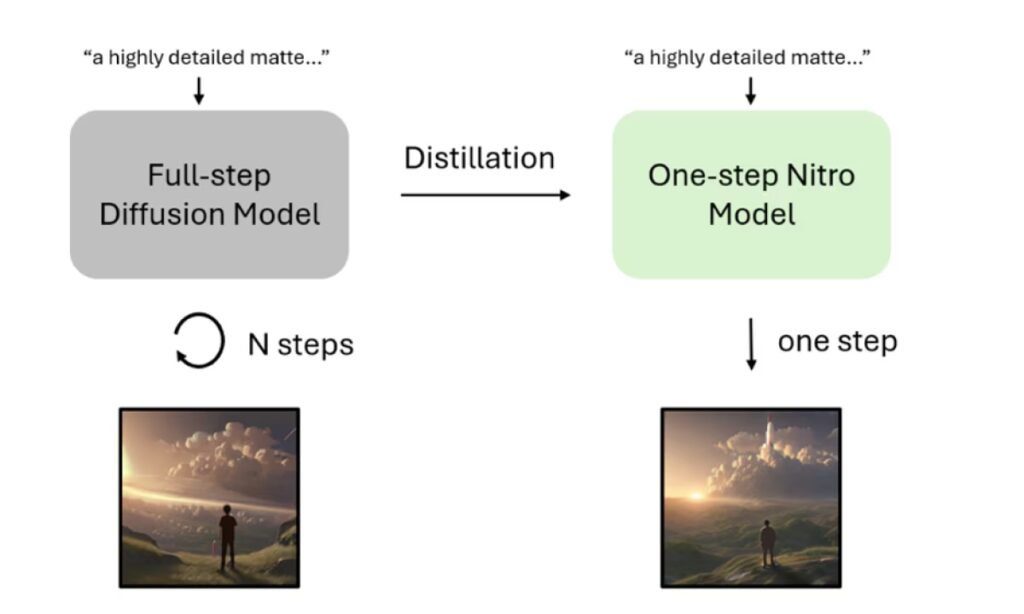

Despite their efficacy, diffusion models’ large architectures which can contain billions of parameters and repeated denoising procedure make them very resource-intensive during inference. A single picture is produced using popular diffusion models, such as Stable Diffusion, in about 20–50 stages. As shown in the graphic below, a model that can generate a comparable image in just 1–8 stages may be created by the distillation process. The reduced number of steps leads to 60-95% shorter inference latency and considerably lower model FLOPS since each step entails a full forward transit via a huge network.

AMD Nitro Diffusion Models

Stable Diffusion 2.1 Nitro, derived from the well-known Stable Diffusion 2.1 model, and PixArt-Sigma Nitro, derived from the high resolution PixArt-Sigma model, are two single-step diffusion models that AMD is releasing to show that its Instinct MI250 accelerators are prepared for model training and to establish a foundation for future studies. Unlike its instructor models, which require numerous forward passes to create images, these two models generate images with a single forward pass, reducing model FLOPS by 90%+ with just a little decrease in image quality.

It approach to distilling these models combines a number of cutting-edge techniques and approximates Latent Adversarial Diffusion Distillation, which is the process that produced the well-known Stable Diffusion 3 Turbo model.

Additionally, one can are making the programs and models available to the open-source community. It think this release will contribute to the advancement of more research in the topic of adversarial diffusion distillation, given the original authors did not make a functioning implementation available to the public.

Deep Dive into Technology

Foundational Models

For picture generating tasks, the Stable Diffusion family of open-source models is a popular option. These models employ a CLIP model as the text encoder and a UNet architecture as the diffusion backbone. To construct this UNet-based single-step model, it used Stable Diffusion 2.1 as the foundational model.

Impressive picture creation quality is also attained by the PixArt family of models, particularly at higher resolutions. The foundation of PixArt models is a Diffusion Transformer (DiT), which provides greater conditioning flexibility and scales well. To improve text comprehension, the models additionally make advantage of the bigger T5 text encoder. To construct his higher resolution transformer-based single-step model, it used PixArt-Sigma as the foundation model.

Latent Adversarial Diffusion Distillation

Adversarial training, which entails jointly training two models a discriminator to ascertain whether a generated sample is authentic or fraudulent and a generator to produce samples that seem to the discriminator to be identical to real data has been a thoroughly researched and successful method for training GAN image generators.

It is difficult to train a big model from start in an adversarial way because of its instability and vulnerability to mode collapse. Nonetheless, it has been demonstrated that using a discriminator to refine a pre-trained diffusion model works well, particularly when condensing a diffusion model into a few-step or even single-step GAN model.

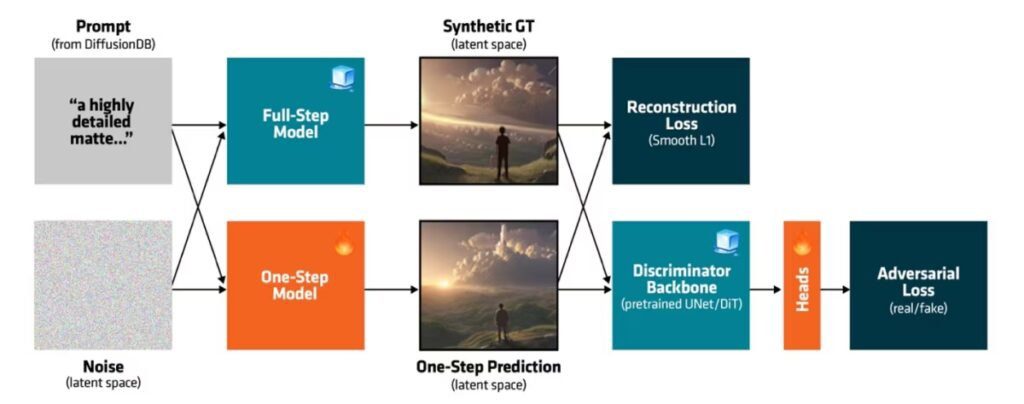

In order to encourage the outputs of the single-step model to have a distribution that is comparable to that of the basic model, people included an adversarial loss. It connected learnable heads to the intermediate outputs of this frozen backbone and initialized the discriminator using the structure and weights of the UNet or DiT backbone of the base model. This made it possible for us to effectively reuse the pre-training characteristics that the backbone had learnt.

The discriminator and generator are specified in latent space instead of pixel space, which eliminates the requirement to use the VAE decoder to decode the latents and drastically lowers the amount of memory needed to apply the adversarial loss. This is another advantage of this approach.

The distillation pipeline is depicted in Figure 2 and includes the creation of synthetic data as well as combined adversarial training of the model and a latent-space discriminator.

Synthetic Data

The goal is to get the closest feasible distilled model outputs to the base model outputs. As a result, people created synthetic data utilising the basic model as the ground-truth directly, rather than utilising an external dataset to refine the model. In order to create 1M synthetic images using the foundation models nous wish to distil, the developers pulled 1M prompts from Diffusion DB, a sizable collection of actual prompts created by users of image generating applications.

Reconstruction Losses and the Noise-Image Paired Dataset

In order to bring the distribution of the distilled model outputs closer to that of the basic model, the adversarial objective was used. Then distilled the models using an additional reconstruction loss calculated on the noise-image pairings to help the generator better build mappings from the noise distribution to the picture distribution. In particular, everyone let the model train to reconstruct the target latent image from the noise, given a target latent picture and the matching starting noise that the base model used to build the latent image. It found that the distillation process becomes more stable with the additional reconstruction loss.

It investigated a number of reconstruction losses, such as Laplacian Pyramid loss, SSIM loss in latent space, the LPIPS perceptual loss in pixel space, and basic L1 and L2 losses. Additionally, they re-implemented LatentLPIPS, a latent space perceptual loss that was first presented in a previous paper. Although nous saw slight gains in picture quality using LatentLPIPS, but did not observe significant benefits with these perceptual losses to warrant the additional processing. Thus, everyone chose Smooth L1 as the reconstruction loss and kept things simple.

Zero Terminal SNR

According to “Common Diffusion Noise Schedules and Sample Steps are Flawed,” common diffusion noise schedulers have flaws that result in a non-zero signal-to-noise ratio (SNR) even in the very last timestep (t=999), suggesting image leakage and creating discrepancies between the noise levels observed during training and inference. This problem is especially severe for single-step generation as there is no opportunity for iterative improvement and the model just takes one step. In order to guarantee that the picture is produced from pure noise during both training and inference, people changed the noise scheduler to enforce zero terminal SNR.

Implementation Details

PyTorch, which is used to construct this distillation process, operates flawlessly on AMD Instinct accelerators right out of the box. To access sophisticated training features like gradient accumulation, Fully Sharded Data Parallel (FSDP), and mixed-precision training, they utilized the Hugging Face Accelerate library. To facilitate use, the generated model checkpoints are compatible with common Diffusers pipelines.

To avoid running the VAE or text encoder during training, first precompute the latent representations of the produced pictures and the text embeddings of the prompts before training, as its distillation process takes place exclusively in the latent space of the diffusion model. Training throughput is significantly increased, and the preprocessing expense is spread out over several trials.

Findings

Comparing Visually

For readers to compare the AMD Nitro models with the base full-step versions, it offers graphic findings in this blog. ROCm software 6.1.3 was used to create all of the photos on an AMD Instinct MI250 accelerator.

Prompt

During the day, when the moon is full, a tiny river flows through the town, and there is an old stone mountain pine forest with a lighthouse built in the midst of the mountains. ambient lighting, concept art, dynamic volumetric lighting, octane, raytrace, cinematic, high quality, high resolution, complex, hyper-detailed, and smooth.

Prompt

Studio Ghibli, Makoto Shinkai, Artgerm, Wlop, Greg Rutkowski, and others created this incredibly detailed matte painting of a man on a hill watching a rocket launch in the distance. It features volumetric lighting, octane render, 4K resolution, and is trending on Artstation. It is a masterpiece that combines hyperrealism, intricate details, cinematic lighting, and depth of field.

Prompt

Landscape vista photography, landscape veduta picture & tdraw, futuristic lighthouse, flash light, hyper-realistic, epic composition, cinematic, intricate landscape painting produced in Enscape, Miyazaki, 4K meticulous post-processing, and Unreal Engineered.

Prompt

Extremely realistic, focused, and detailed photograph of a sailing ship with dramatic light, a pale morning, cinematic lighting, low angle, trending on Artstation, 4K, unreal engine 5, studio Ghibli, and complex artwork by John William Turner.

Prompt

This adorable suede toy owl has detailed features, is geometrically exact, has relief on its skin and plastic on its body, and is cinematic.

Prompt

Studio Ghibli, Makoto Shinkai, Artgerm, Wlop, Greg Rutkowski, and others created this incredibly detailed matte painting of a man on a hill watching a rocket launch in the distance. It features volumetric lighting, octane render, 4K resolution, and is trending on Artstation. It is a masterpiece that combines hyperrealism, intricate details, cinematic lighting, and depth of field.

Prompt

A strikingly gorgeous eagle surrounded by vector flowers, with long, glossy, wavy hair, a polished, extremely detailed vector floral illustration fused with hyper realism, muted pastel colours, vector floral details in the background, muter colours, hyper detailed, extremely intricate, overwhelming realism in a complex scene with a magical fantasy atmosphere, and no watermark or signature.

In conclusion

To demonstrate the potential of AMD Instinct accelerators for model training and inference, as well as to provide the groundwork for future studies, one can created customized single-step diffusion models. These models are lightweight enough to operate well on data centre systems as well as edge devices like AI-enabled PCs and laptops, and they show performance equivalent to full-step diffusion models. Crucially, AMD is making the models and code available to the open-source community in order to assist more developers in accelerating their innovation through the AMD open software ecosystem. It anticipate that this will help generative AI improve.