Amazon Bedrock now offers new APIs to improve RAG apps

Amazon Bedrock is a fully managed service that provides a wide range of features you need to create generative AI applications with security, privacy, and responsible AI. It does this by providing a selection of high-performing foundation models (FMs) from top AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API. With the help of Amazon Bedrock Knowledge Bases, a fully managed service, developers can efficiently create generative AI applications that are highly accurate, low latency, secure, and adaptable.

Bedrock Knowledge Bases uses Retrieval Augmented Generation (RAG) to link foundation models (FMs) to an organization’s internal data. RAG assists FMs in providing more precise, tailored, and pertinent replies.

Two announcements pertaining to Amazon Bedrock Knowledge Bases are described in this post:

- Support for streaming data intake and bespoke connections.

- Reranking model support.

Support for streaming data intake and bespoke connections

Amazon Bedrock Knowledge Bases now enable custom connections and streaming data intake. Instead of requiring a complete sync with the data source on a regular basis or after each modification, developers may now effectively and economically ingest, update, or remove data immediately with a single API request. RAG-based generative AI applications are being developed by customers more often for a variety of use cases, including business search and chatbots.

To ensure that the applications’ end users always have access to the most recent information, they must, nevertheless, maintain the data in their knowledge bases current. Data synchronization is currently a laborious procedure that necessitates a complete sync each time new data is added or deleted. Integrating data from unsupported sources, such Quip or Google Drive, into their knowledge base presents additional difficulties for customers. Usually, users have to transfer the data to a supported source, such Amazon Simple Storage Service (Amazon S3), before initiating the ingestion process to make it accessible in Amazon Bedrock Knowledge Bases.

In addition to causing significant expense, this extra step delays the data’s availability for querying. Additionally, because the data must be stored in a supported data source prior to ingestion, users who wish to use streaming data such as news feeds or Internet of Things (IoT) sensor data face delays in real-time data availability. These delays and inefficiencies can turn into major operational bottlenecks and raise expenses as clients grow their data.

To guarantee that the knowledge base is current and accessible for real-time querying, it is critical to have a more economical and effective method of ingesting and managing data from several sources. Customers can now effectively add, verify the status of, and remove data using direct APIs without having to list and sync the full dataset with to support for bespoke connectors and streaming data intake.

Currently accessible

In all AWS regions where Amazon Bedrock Knowledge Bases are accessible, support for custom connections and streaming data input is now available. For further information and upcoming improvements, see the Region list.

Reranking model support

Additionally, it released the new Rerank API in Amazon Bedrock, which will let developers to employ reranking models to improve the accuracy and relevancy of replies, therefore increasing the speed of their RAG-based apps. In order to return similar items even if they don’t share any words with the query, semantic search which is supported by vector embeddings embeddings documents and queries into a semantic high-dimension vector space where texts with related meanings are nearby in the vector space and thus semantically similar.

Since RAG applications extract a variety of pertinent documents from the vector store and the relevance of the returned documents to a user’s query is crucial to giving accurate results, semantic search is employed in these applications.

Semantic search is limited, nonetheless, in its ability to rank the best documents according to user preferences or query context, particularly when the user question is intricate, unclear, or contains nuanced context. As a result, documents that are just marginally related to the user’s query may be retrieved. Another issue that arises from this is that sources are not properly cited and attributed, which damages the RAG-based application’s credibility and transparency.

Future RAG systems should have a high priority on creating reliable ranking algorithms that are more sensitive to user context and intent in order to overcome these constraints. Enhancing source credibility evaluation and citation procedures is also crucial in order to validate the dependability and openness of the produced answers.

In order to guarantee that foundation models acquire the most relevant material and provide more accurate and contextually appropriate results, advanced reranking models address these issues by giving priority to the most pertinent content from a knowledge base for a query and extra context. By giving the information given to the generation model priority, reranking models may lower the costs associated with response creation.

Currently accessible

The following AWS Regions now provide the Rerank API in Amazon Bedrock: Asia Pacific (Tokyo), Europe (Frankfurt), Canada (Central), and the US West (Oregon). Even if you are not utilising Amazon Bedrock Knowledge Bases, you can still rerank documents using the Rerank API on your own. Go to the Amazon Bedrock product page to find out more about Amazon Bedrock Knowledge Bases.

Amazon Bedrock’s new RAG assessment and LLM-as-a-judge features

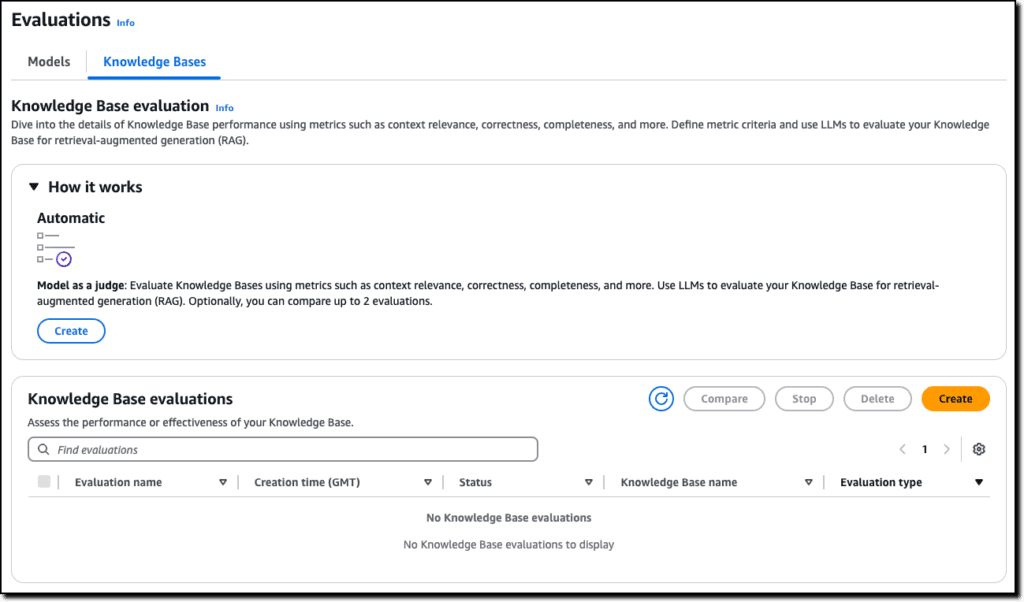

RAG assessment is now supported by Amazon Bedrock Knowledge Bases. With Amazon Bedrock Knowledge Bases, you can evaluate and optimize Retrieval Augmented Generation (RAG) applications by running an automated knowledge base evaluation. A large language models (LLMs) is used in the assessment procedure to calculate the metrics. You may adjust your settings and compare several setups using RAG assessments to acquire the outcomes you want for your use case.

With the addition of LLM-as-a-judge to Amazon Bedrock Model Evaluation, you can now test and assess other models with human-like quality at a fraction of the expense and time required to do human assessments.

By offering quick, automated review of AI-powered apps, reducing feedback loops, and accelerating advancements, these new capabilities facilitate the transition to production. Correctness, helpfulness, and responsible AI criteria like response refusal and harmfulness are among the many quality factors that are evaluated in these assessments.

The assessment findings include natural language explanations for each score in the output and on the console to make it simple and straightforward. Additionally, the scores are normalised from 0 to 1 to facilitate interpretation. In order for non-scientists to comprehend how scores are determined, rubrics are fully disclosed in the documentation along with the judge prompts.

Things to know

The following AWS Regions offer previews of these new assessment capabilities:

- RAG examination in Europe (Frankfurt, Ireland, London, Paris), South America (São Paulo), Asia Pacific (Mumbai, Sydney, Tokyo), Canada (Central), and the United States (East) (N. Virginia).

- Asia Pacific (Mumbai, Seoul, Sydney, Tokyo), Central Canada, Europe (Frankfurt, Ireland, London, Paris, Zurich), South America (São Paulo), and the United States (North Virginia) are all home to LLM judges.

Keep in mind that the region determines which evaluator models are accessible.

Model inference cost is based on the regular pricing seen on Amazon Bedrock. The evaluation assignments itself don’t come with any extra fees. Both the evaluator and evaluated models are invoiced using their standard provided or on-demand pricing. The AWS documentation for transparency contains the judge prompt templates, which are a component of the input tokens.

Although the underlying models can operate with information in other languages they support, the assessment service is initially optimized for English-language content.