Build Your Own Personalized Chatbot. Quickly and Easily Train Large Language Models on Intel Processors. Using 52K self-generated data points, the Alpaca 7B model LLaMA-based model matches the instruction-following capabilities of the DaVinci-003 model.

The remarkable performance of large language models (LLMs) on dialogue agents like ChatGPT, GPT-4, and Gemini has garnered a lot of attention recently. LLMs are constrained, nevertheless, by the substantial time and expense needed to train or improve them. Their huge data sets and model sizes are to blame for this.

Nous will show you how to quickly train and optimize a custom chatbot on conveniently accessible hardware in this post. The chatbot is developed using 4th Generation Intel Xeon Scalable processors and a methodical approach that produces a domain-specific dataset and an optimized fine-tuning code base.

Alpaca 7B model Overview

With some adjustments that it go into in the following section, the current Alpaca 7B model is refined from a 7B LLaMA model using 52K instruction-following data produced by the methods in the Self-Instruct paper. It discovered that the Alpaca 7B model and the text-davinci-003 model exhibit comparable behavior on the Self-Instruct instruction-following assessment suite in an initial human evaluation.

Alpaca is currently in the early stages of development, and numerous issues need to be resolved. Crucially, the Alpaca 7B model has not yet been optimized to be secure and innocuous. Therefore, in order to enhance the model’s safety and ethical considerations, to advise users to exercise caution when dealing with Alpaca and to report any troubling behavior.

The dataset, training recipe, and data production process are all included in the first release. If the LLaMA designers grant us permission, it plan to make the model weights available. To help readers better understand Alpaca’s capabilities and limitations, as well as to enable us assess Alpaca’s performance on a larger audience, it have decided to provide a live demo for the time being.

Objective Method

Meta’s LLaMA model was refined to create Stanford Alpaca, an instruction-following language model. This project served as inspiration to develop an improved process for building a unique, domain-specific chatbot. Although there are a number of language models available, some of which perform better than others, the developers chose Alpaca since it is an open model.

Guided seed generation, free (non-guided) seed generation, sample production, and fine-tuning are the four primary processes in the chatbot’s workflow (Figure 1).

It would want to present a prompt template that is helpful in seed task development before guiding you through these processes.

Everyone added a new criteria to the template, which states that “the generated task instructions should be related to issues.” This aids in producing seed tasks associated with the designated domain. Also employ both guided and free (non-guided) seed task generation to produce a wider variety of seed challenges.

Alpaca’s pre-existing seed tasks are utilized in guided seed task production. The content from the domain prompt template is combined and fed into the current dialogue agent for every seed job. And anticipate producing the same quantity of assignments.

Lets once more make use of the current dialogue agent to produce the instruction samples with these seed tasks. Utilizing the domain prompt template, the output adheres to the specifications using the “instruction,” “input,” and “output” formats.

The resemblance between an instruction sample and a domain seed task may have caught your attention. Consider them as a ChatGPT prompt and the output that results from it, respectively, with one influencing the other.

Getting Your Custom Chatbot Started

Instead of fine-tuning the LLM with billions of parameters, the developers efficiently fine-tune the LLM using the Low-Rank Adaptation (LoRA) technique. LoRA significantly reduces the number of trainable parameters for downstream tasks by injecting trainable rank decomposition matrices into each layer of the transformer architecture and freezing the pretrained model weights.

In addition to parameter-efficient fine-tuning, one may accelerate the fine-tuning process by utilising hardware and software acceleration. The Intel Advanced Matrix Extensions (Intel AMX-BF16) instructions, which are available on 4th Generation Intel Xeon Scalable processors and are specifically developed to speed up AI performance, are an example of hardware acceleration. When compared to unoptimized versions of these frameworks and packages, the Hugging Face transformers, PyTorch, and Intel Extension for PyTorch software optimizations also aid in speeding up performance.

Concatenation of instruction samples can also be enabled to enhance the fine-tuning procedure. Instead of having many training samples of varying lengths, the primary idea is to concatenate multiple tokenized sentences into a single, longer, and more focused sentence as the training sample. By doing this, the underlying hardware efficiency is increased.

One compute node is used for all of the aforementioned optimizations. In order to maximize processing power, multimode fine-tuning with distributed data parallelism can also be carried out throughout the fine-tuning process.

Let’s begin domain chatbot training now:

- Take a look at the Intel Extension for Transformers sample code.

- Install the software programs that the requirements file specifies are required.

- Get the pretrained models here. Hugging Face offers the FLAN-T5 for download.

- You can use a different version from Hugging Face or ask Meta for access to LLaMA directly.

Findings

Also used 2,000 domain instruction samples which were developed and 52,000 general instruction samples from Alpaca to evaluate the fine-tuning on two well-known LLMs (LLaMA-7B and FLAN-T5 XL). On 4th Generation Intel Xeon Scalable CPUs, there are speedups of up to 25x using the software optimization strategies previously mentioned.

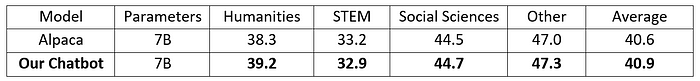

The new Massive Multitask Language Understanding (MMLU) benchmark, which only assesses models in zero-shot and few-shot scenarios, was used to gauge the knowledge the model had learnt during pretraining. Table 1 illustrates that a chatbot performs better overall and for the majority of the domain subtasks.

Although one’s MMLU findings are 25 times more efficient in terms of parameter size, they are comparable to those of GPT-3(175B). Also invite you to test this build with additional open language models, utilising Intel’s software optimizations and hardware acceleration.

Along with the MMLU standard, furthermore evaluated qualitative conversations, such as:

- Input prompt: Where can it locate Intel Microchip product details?

- Alpaca’s response: Intel.com is the website where you can get product details on Intel microchips. Information on their motherboards, graphics cards, processors, and other goods can be found there. Product details, guides, and other materials are also available.

- The chatbot’s response: Intel Microchips’ product details are available on their website, Intel Support.

Summary

Having made the domain-specific dataset and source code available in the Intel Extension for Transformers. As part of your AI processes, everyone invite you to develop your own chatbot using Intel CPUs and investigate other Intel AI technologies and optimizations.

In order to speed up chatbot inference on Intel processors, people will next allow quantization and compression techniques. Give Intel Extension for Transformers a star to get the latest optimizations.