Replication pipelines are vulnerable to failure in the intricate and dynamic realm of data replication. Restarting replication while minimising data integrity problems requires a number of manual processes, and determining the reason and timing of failure can be challenging.

Cloud Datastream

In circumstances like database failover or protracted network outages, you may immediately resume your data replication with little to no data loss thanks to Datastream’s new stream recovery capability.

Datastream’s unique methodology

Relational database data streaming

To load data into BigQuery, Cloud SQL, Cloud Storage, and Spanner, Datastream reads and delivers each change, insert, update, and delete from your MySQL, PostgreSQL, AlloyDB, SQL Server, and Oracle databases. It consistently feeds every event in real time and is native to Google. It is agentless. Every month, Datastream handles more than 500 billion events.

Dependable pipes with sophisticated recovery

Costly disruptions can occur unexpectedly. Because of Datastream’s strong stream recovery, you can minimise data loss and downtime while still maintaining vital business processes and making choices based on continuous data streams.

Resolution of Schema Drift

Datastream has the capability to quickly and smoothly resolve schema drift when source schemas change. With each schema update, Datastream rotates files, generating a new file in the target bucket. A current, versioned Schema Registry can be accessed via an API request, providing access to original source data types.

Safe by design

Datastream offers a variety of private, secure connectivity options to safeguard data while it’s being transported. You may also feel secure knowing that your data is secure as it streams because it is encrypted both in transit and at rest.

Google Datastream

Read change events (inserts, updates, and deletes) from source databases and write them to a data destination are the methods used by change data capture to integrate data and enable action. Real-time analytics, database replication, and other use cases are made possible by Datastream’s support for change streams into BigQuery, Cloud SQL, Cloud Storage, and Spanner from Oracle and MySQL databases.

Take the example of a financial institution that replicates transaction data from its operational database to BigQuery for analytics using Datastream. The primary database instance unexpectedly switches to a replica due to a hardware problem. Since the original source is no longer accessible, Datastream’s replication pipeline is malfunctioning. Replication from the failover database instance can continue thanks to stream recovery, which makes sure no transaction data is lost.

Consider an online shop that replicates consumer input using Datastream and then uses BigQuery ML for sentiment analysis. The link to the source database is broken by an extended network outage. Some of the modifications on the database server are gone by the time network connectivity is restored. Stream recovery in this scenario enables the user to rapidly resume replication from the first log position that becomes available. The merchant values having the most recent data for ongoing sentiment analysis and trend spotting, even though some feedback can be missed.

Stream recovery’s advantages

Stream recovery has several advantages, such as:

Diminished data loss

Recover from data lost as a result of unintentional deletion of log files, database instance failovers, and other situations.

Reduced downtime

Minimise downtime by promptly restoring your stream and starting up continuous CDC ingestion.

Recovery made easier

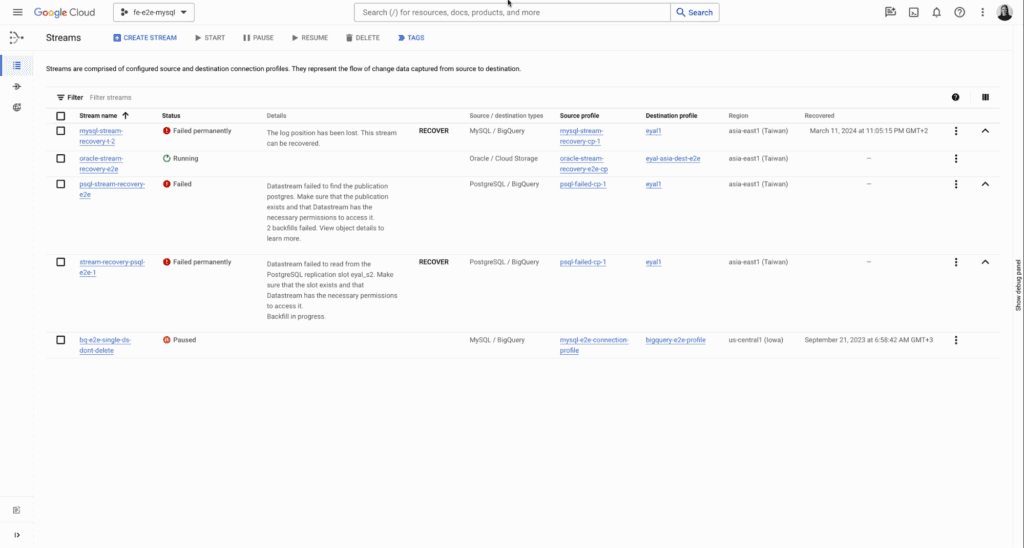

Recover your stream with ease using an easy-to-use UI.

Using Stream Recovery

You have a few possibilities with stream recovery, based on the particular failure circumstance and the availability of recent log information. You have three options for MySQL and Oracle: skip the current position and stream from the next available position, skip the current position and stream from the most recent position, or retry from the current log position. at order to ensure that no data is lost or duplicated at the destination, you can additionally specify a specific log position, such as the Log Sequence Number (LSN) or Change Sequence Number (CSN), from which you want the stream to resume.

You can instruct Datastream to resume streaming from the newly created replication slot for PostgreSQL sources by creating a new replication slot in your PostgreSQL database.

Launching a stream from a given location

There are other situations in which you might need to begin or continue a stream from a particular log location in addition to stream recovery. For instance, when historical data already exists in the destination and you would like to merge CDC from a particular point in time (when the historical data terminates), or when the source database is upgraded or transferred. In these situations, you can set a beginning position before initiating the stream using the stream recovery API.

Overview of stream recovery

A streaming stream may experience unrecoverable problems and get permanently marked as FAILED. These issues stop the stream from running and may result in lost data.

Rather than rebuilding the stream and backfilling the previous data, you can recover a permanently failed stream by instructing it to disregard the error and continue reading the ongoing events. Resetting the replication to begin reading from a new replication position will revive a stream that has permanently failed.

Start now

Generally speaking, stream recovery is now accessible for all available Datastream sources across all Google Cloud regions using the Google Cloud dashboard and API.