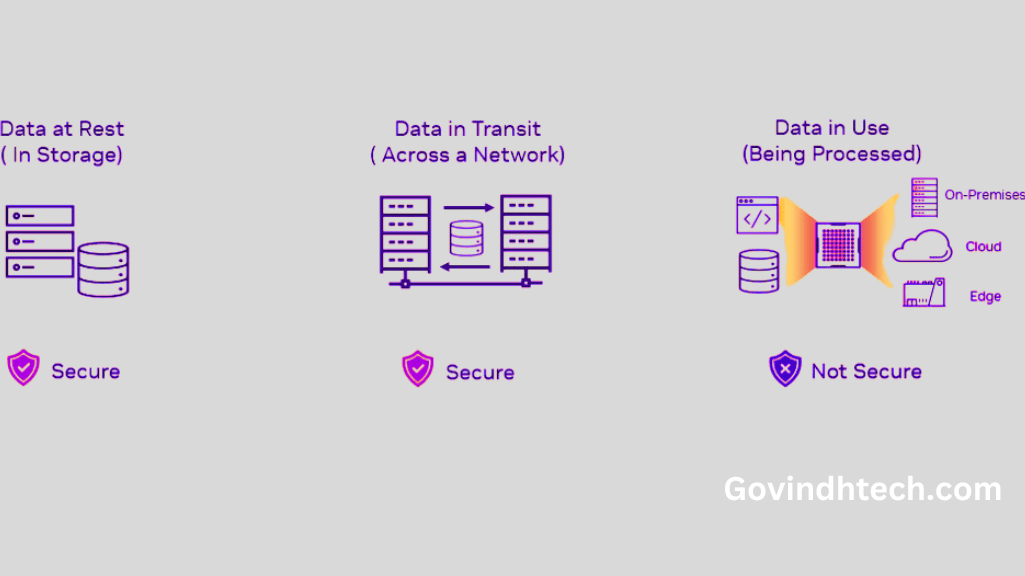

Unauthorized access and data breaches pose significant threats to organizations’ sensitive data and applications. To address this concern, confidential computing has emerged as a solution that ensures data protection and application security. It accomplishes this by performing computations within a secure and isolated environment known as a trusted execution environment (TEE) within a computer’s processor.

The TEE acts as a locked box, safeguarding data and code within the processor from unauthorized access and tampering. It provides an additional layer of security for organizations that handle sensitive data or intellectual property (IP). By blocking access to data and code from various sources, including hypervisors, host operating systems, infrastructure owners, and physical server access, confidential computing reduces the surface area for both internal and external threats. It secures data and IP at the lowest level of the computing stack, ensuring the hardware and firmware used for computing are trustworthy.

Extending the TEE concept to NVIDIA GPUs can significantly enhance the performance of confidential computing for AI applications. This integration enables faster and more efficient processing of sensitive data while maintaining robust security measures.

Secure AI with NVIDIA H100

NVIDIA has introduced confidential computing as a built-in hardware-based security feature in the NVIDIA H100 Tensor Core GPU. This feature enables customers in regulated industries such as healthcare, finance, and the public sector to protect the confidentiality and integrity of sensitive data and AI models in use.

With security measures integrated into the GPU architecture, organizations can build and deploy AI applications using NVIDIA H100 GPUs in on-premises, cloud, or edge environments. The confidentiality and integrity of both customer data and AI models are protected from unauthorized viewing or modification during execution. This provides peace of mind for customers, ensuring the protection of sensitive data and valuable AI intellectual property.

Confidential Computing on NVIDIA H100

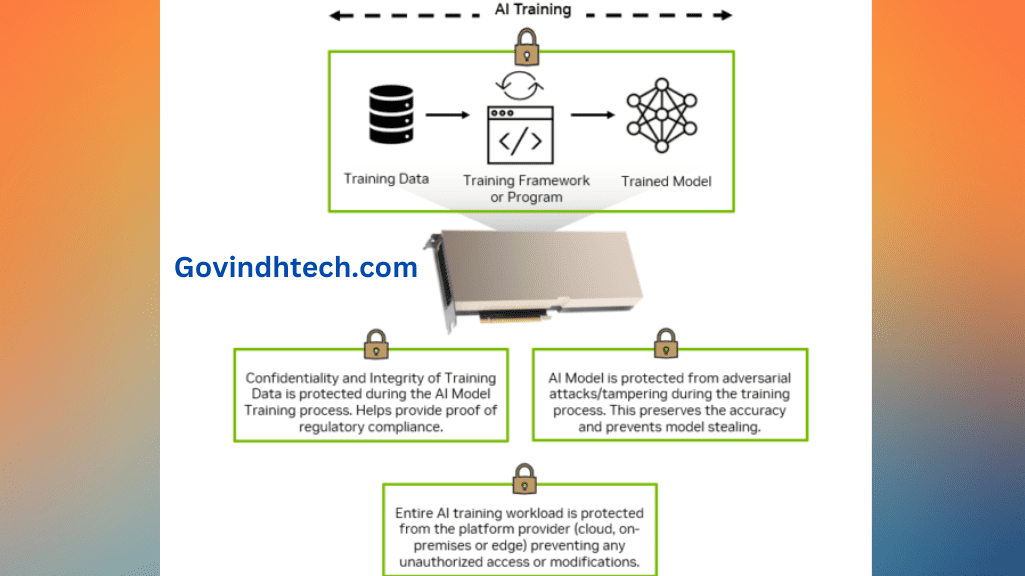

- Confidential AI Training:

- Protecting sensitive and regulated data during AI model training.

- Safeguarding personal health information (PHI), personally identifiable information (PII), and proprietary data.

- Preventing unauthorized access or modifications during on-premises or hosted training processes.

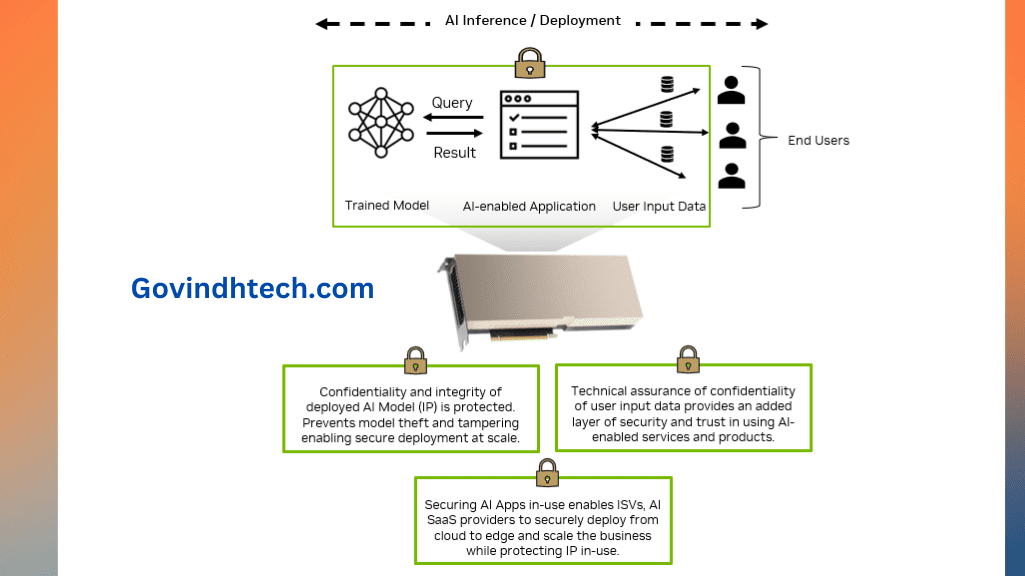

- Confidential AI Inference:

- Ensuring privacy and regulatory compliance by protecting end-user inputs during AI model inference.

- Securing valuable AI models from unauthorized viewing, modification, or theft.

- Building trust with end users and assuring enterprises of AI model protection during deployment.

- AI IP Protection for ISVs and Enterprises:

- Safeguarding proprietary AI models developed by independent software vendors (ISVs) for specific use cases.

- Protecting AI models from tampering or theft when deployed in customer data centers or cloud environments.

- Increasing customer trust and reducing risks associated with third-party application usage.

- Confidential Federated Learning:

- Enabling collaboration between multiple data owners without compromising data confidentiality and integrity.

- Leveraging secure multi-party computing for federated learning use cases.

- Protecting local data and AI models from unauthorized access or modification during collaboration and aggregation.

NVIDIA Platforms for Accelerated Confidential Computing On-Premises

To leverage confidential computing on NVIDIA H100 GPUs, a CPU that supports a virtual machine (VM)-based TEE technology, such as AMD SEV-SNP and Intel TDX, is required. Extending the VM-based TEE from the supported CPU to the H100 GPU enables full encryption of the VM memory without any code changes to the application.

Leading OEM partners are now shipping accelerated platforms compatible with confidential computing, powered by NVIDIA H100 Tensor Core GPUs. These platforms combine NVIDIA H100 PCIe Tensor Core GPUs with AMD Milan or AMD Genoa CPUs supporting AMD SEV-SNP technology.

Partners such as ASRock Rack, ASUS, Cisco, Dell Technologies, GIGABYTE, Hewlett Packard Enterprise, Lenovo, Supermicro, and Tyan are delivering the first wave of NVIDIA platforms. These platforms enable enterprises to secure their data, AI models, and applications on-premises within their data centers.

NVIDIA’s confidential computing software stack, to be released this summer, will initially support single GPU configurations and subsequently extend to multi-GPU and Multi-Instance GPU setups.

By leveraging accelerated confidential computing on NVIDIA H100 GPUs, organizations can enhance security, privacy, and regulatory compliance while achieving performant, scalable, and secure AI workloads.