A vision-language model for numerous tasks is the PaliGemma 2 mix

Google introduced PaliGemma 2, an improved vision-language model in the Gemma family, in December of last year. Pretrained checkpoints of various sizes (3B, 10B, and 28B parameters) were included in the release. These checkpoints can be readily adjusted on a variety of vision-language tasks and domains, including image segmentation, captioning short videos, answering scientific questions, and text-related tasks with high performance.

The important information is that PaliGemma 2 mix checkpoints are now available. Models calibrated to a variety of activities, PaliGemma 2 mix enables direct exploration of the model’s capabilities and unconventional application for typical use cases.

What’s new in the mix for PaliGemma 2?

One model for several tasks: Short and extended captioning, optical character recognition (OCR), answering image questions, object detection, and segmentation are among the tasks that PaliGemma 2 mix can handle.

Recognition of Optical Characters (OCR) Text can be extracted from photos using the model’s OCR capabilities. For instance, the model can read text such as “WARNING DANGEROUS RIP CURRENT” from an image when given the input “ocr”.

Captioning images The model is capable of producing image captions. For instance, the model can generate captions like “a cow standing on a beach next to a sign that says warning dangerous rip current” or “A cow standing on a beach next to a warning sign” when given the input “caption en”.

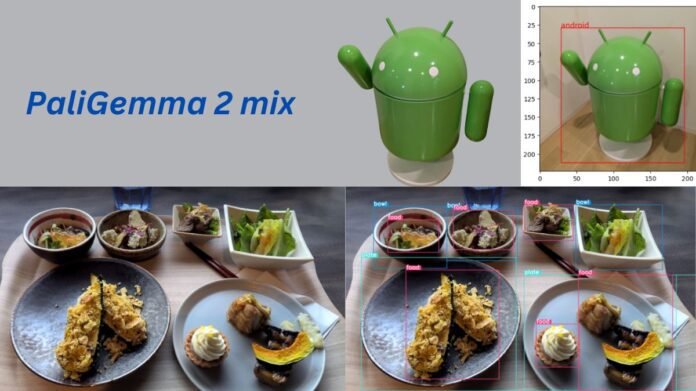

Identifying objects PaliGemma 2 mix is capable of identifying items in pictures. For instance, if you enter “detect android” or “detect cow,” the model will identify such things in the picture. With an input like “detect chair ; table” or “detect food ; plate ; bowl,” it can also detect numerous objects.

Detection

- Task: Detection (PaliGemma-2-3b-mix-224)

- Input: “detect android\n”

Result

Segmenting images By recognising and distinguishing various areas or objects inside an image, the model is able to segment images. For instance, the model will segment the objects in the image if you provide the input “segment cat” or “segment cow.”

Question Answering PaliGemma 2 mix is able to respond to enquiries concerning pictures. For instance, the model can respond “beach” when given the input “answer en where is the cow standing?”

Developer-friendly dimensions: The various model sizes (3B, 10B, and 28B parameters) and resolutions (224px and 448px) allow you to use the model that best suits your needs.

Utilise the framework of your choice: Make use of your favourite frameworks and tools, such as Gemma.cpp, PyTorch, Keras, JAX, and Hugging Face Transformers.

Limitations:

According to the source, PaliGemma 2 mix works well for a variety of jobs, but it is best to fine-tune it for a particular activity or domain.

You can upgrade straight to PaliGemma 2 without making any changes if you were already using the original PaliGemma mix checkpoints. The way the model is prompted determines the jobs it completes. The official documentation explains the various prompt task syntax, and its technical report provides more details on the development of PaliGemma 2.

Start Now

Are you prepared to explore PaliGemma 2’s possibilities? You can investigate the possibilities of the mix model in the following ways:

- Make a few clicks to test out the mix model: Use the Hugging Face demo to immediately explore the mix model’s potential.

- Get models here: Get the weights of the mix models on Hugging Face and Kaggle.

- Find out how to use the model: Try the Keras inference notebook locally or immediately in Google Colab.

- Just a few clicks will deploy and tune: Go straight to Vertex Model Garden and use the PaliGemma 2 mix.

Although PaliGemma 2 mix performs well on a variety of tasks, you will achieve the greatest outcomes if you optimise PaliGemma 2 for your particular activity or area. E

Summary

PaliGemma 2 mix, an improved vision-language model in the Gemma family, was introduced by Google. These models can do a variety of jobs right out of the box, including object detection, OCR, and captioning, because they are tailored for a variety of applications. PaliGemma 2 mix is compatible with well-known frameworks like Keras and Hugging Face Transformers and is available in a variety of sizes and resolutions.

Through a variety of sites, developers can get weights, investigate the model’s capabilities, and discover how to execute the model. Although the PaliGemma 2 mix performs well, it is advised to optimise the original PaliGemma 2 for best outcomes in particular activities or domains. The release is a step towards vision-language models that are easier for developers to use and more adaptable.