Contents [hide]

Linear Algebra for Machine learning

There is a close relationship between machine learning and mathematics. With the aid of mathematics, one may select the best machine learning algorithm by taking into account factors like training duration, complexity, feature count, etc. Each algorithm is founded on mathematical notions. An important branch of mathematics known as “Linear Algebra” describes the study of the vectors, matrices, planes, mappings, and lines needed for linear transformation.

Since the phrase “linear algebra” was first used in the early 18th century to identify unknowns in linear equations and solve them quickly, it has become a crucial area of mathematics that aids in data analysis. Furthermore, there is no denying that the most crucial and essential component for processing machine learning applications is linear algebra. It is also necessary to begin studying data science and machine learning.

In machine learning, linear algebra is essential and a fundamental building block that allows ML algorithms to operate on a vast array of datasets.

Linear algebra concepts are widely used in the construction of machine learning algorithms. In particular, it can accomplish the following tasks, despite being utilized in practically every machine learning concept:

- Optimization of data.

- Singular Value Decomposition (SVD), matrix operations, covariance matrices, loss functions, regularization, and support vector machine classification are among its applications.

- Linear regression is implemented in machine learning.

In addition to the applications listed above, linear algebra is employed in neural networks and data science.

Linear algebra and other basic mathematical concepts serve as the foundation for Machine Learning and Deep Learning systems. To learn and understand Machine Learning or Data Science, one must be knowledgeable with linear algebra and optimization theory.

Note: While linear algebra is a necessary mathematical concept for machine learning, it is not necessary to become intimate with it. It implies that having a solid understanding of linear algebra is sufficient for machine learning and that mastery of the subject is not necessary.

What is the purpose of learning linear algebra before machine learning?

In machine learning, linear algebra is like the flour used in bakeries. Similar to how flour is the foundation of a cake, linear algebra is the foundation of all machine learning models. Additionally, the cake requires additional components, such as soda, cream, sugar, and egg. Likewise, additional ideas like vector calculus, probability, and optimization theory are needed for machine learning. Thus, we can conclude that Machine Learning uses the aforementioned mathematical ideas to generate a good model.

Some advantages of studying linear algebra before machine learning are listed below:

- Better graphic experience.

- Improved statistics.

- Developing improved machine learning algorithms.

- Estimating the Machine Learning prediction.

- Easy to learn.

Better graphic experience:

Improved graphics processing in machine learning, including image, audio, video, and edge detection, is made possible by linear algebra. You can work on these several graphical representations that are supported by machine learning programs. Additionally, classifiers supplied by machine learning algorithms train portions of the offered data set according to their categories. The trained data’s mistakes are also eliminated by these classifiers.

Additionally, through a particular language known as Matrix Decomposition Techniques, Linear Algebra aids in the computation and solution of huge and complicated data sets.

Improved statistics:

In machine learning, statistics is a key notion for organizing and integrating data. Additionally, linear algebra facilitates a better understanding of statistical concepts. Using linear algebraic methods, procedures, and notations, advanced statistical notions can be incorporated.

Developing improved machine learning algorithms:

Furthermore, better supervised and unsupervised machine learning algorithms are developed with the help of linear algebra.

The following are a few supervised learning algorithms that can be made with Linear Algebra:

- Logistic Regression

- Decision trees

- Linear regression

- Support vector machines (SVM)

Additionally, linear algebra can be used to create the following list of unsupervised learning algorithms:

- Single Value Decomposition (SVD)

- Clustering

- Components Analysis

You may also self-customize the different parameters in the live project and gain in-depth expertise to deliver the same with greater accuracy and precision by using the ideas of linear algebra.

Estimating the Machine Learning prediction:

Working on a machine learning project requires you to have a broad perspective and be able to convey additional viewpoints. You must therefore raise your understanding and familiarity with machine learning ideas in this regard. You can start by configuring various graphs and visualizations, adjusting parameters for different machine learning algorithms, or tackling concepts that others may find challenging to comprehend.

Easy to learn:

One of the more significant and easily understood areas of mathematics is linear algebra. Anytime sophisticated mathematics and its applications are needed, it is taken into account.

Minimum Linear Algebra for Machine Learning

Notation:

With the help of linear algebraic notation, you can read descriptions of algorithms in books, articles, and websites and comprehend how they operate. If you utilize for-loops instead of matrix operations, you will still be able to complete the puzzle.

Operations:

Working with vectors and matrices at a high degree of abstraction can assist clarify ideas and improve coding, description, and even thinking skills. Learning the fundamental operations of linear algebra, including addition, multiplication, inversion, matrix and vector transposing, etc., is necessary.

Matrix Factorization:

Matrix factorization, and more especially matrix deposition techniques like SVD and QR, is one of the subjects of linear algebra that is most frequently suggested.

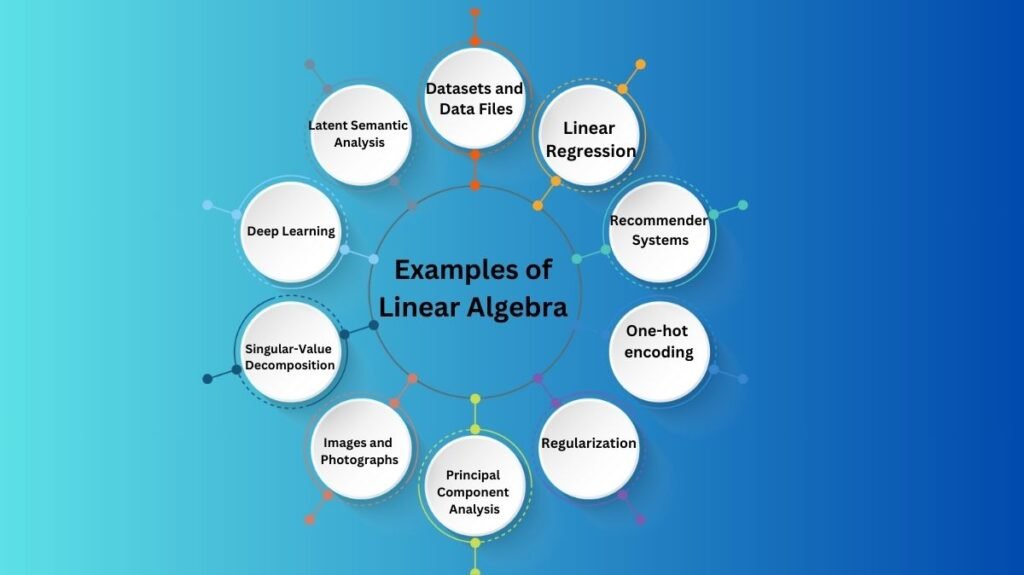

Machine Learning Examples of Linear Algebra

- Datasets and Data Files:

Every machine learning project uses the dataset, which is then used to fit the machine learning model.

Every dataset has a structure that is similar to a table, with rows and columns. where observations are represented by each row and features/variables by each column. One important data structure in linear algebra, the matrix, is used to handle this dataset.

Additionally, this dataset represents a Matrix(X) and Vector(y) when split into input and output for the supervised learning model; the vector is also a crucial idea in linear algebra.

- Images and Photographs:

Photographs and images are utilized in computer vision applications for machine learning. Since an image is a table structure with height and width for each pixel, it is an example of the matrix from linear algebra.

Additionally, the notations and operations of Linear Algebra are used to execute other image manipulations, including cropping, scaling, resizing, and more.

- One Hot Encoding

In machine learning, categorical data is occasionally required. The common encoding method for these categorical variables is called one-hot encoding, and it is used to make them simpler and easier to work with.

One row represents each dataset example, and one column represents each category in a table that displays a variable using the one-hot encoding technique. Furthermore, a binary vector with either zero or one value is encoded for every row. Sparse representation is a branch of linear algebra, and this is an example of it.

- Linear Regression

A common machine learning technique that was inspired by statistics is linear regression. It is used in machine learning to forecast numerical values and explains the connection between input and output variables. Matrix factorization techniques are the most often used approach for solving linear regression problems utilizing Least Square Optimization. The linear algebraic concepts of LU decomposition and singular-value decomposition are two frequently employed matrix factorization techniques.

- Regularization

In machine learning, we often search for the most straightforward model to produce the best result for the particular issue. Simpler models are good at generalizing from particular examples to unidentified datasets. Smaller coefficient values are frequently associated with these more straightforward models.

Regularization refers to a method of reducing the size of a model’s coefficients during the data-fitting process. The L1 and L2 regularization procedures are frequently used. In reality, both of these regularization techniques are derived directly from linear algebra and are known as vector norms. They quantify the length or magnitude of the coefficients as a vector.

- Principal Component Analysis

Every dataset typically has thousands of characteristics, making it one of the hardest machine learning jobs to fit the model with such a large dataset. Furthermore, a model constructed with irrelevant features is less accurate than one constructed with relevant features. Dimensionality reduction is the term used to describe a number of machine learning techniques that automatically decrease a dataset’s column count. Principal Component Analysis (PCA) is the most widely used dimensionality reduction technique in machine learning. High-dimensional data is projected using this technique for training models and displays. PCA applies linear algebra’s matrix factorization technique.

- Singular-Value Decomposition

A common method for reducing dimensionality is singular-value decomposition, or SVD for short.

It is the matrix-factorization method of linear algebra and is widely applied in many various fields, including noise reduction, feature selection, and visualization.

- Latent Semantic Analysis

A branch of machine learning called natural language processing, or NLP, deals with spoken and written language.

NLP uses the occurrence of words to represent a text content as huge matrices. For instance, the known vocabulary terms may be included in the matrix column, while the rows may include sentences, paragraphs, pages, etc. The cells in the matrix may indicate the frequency or count of the word’s occurrences. Text is represented as a sparse matrix. This type of processing makes it much simpler to compare, query, and use the documents as the foundation for a supervised machine learning model.

This type of data preparation is termed Latent Semantic Analysis, or LSA for short. Latent Semantic Indexing, or LSI, is another name for it.

- Recommender System

One area of machine learning that deals with predictive modeling and makes product suggestions is called a recommender system. Examples include online book recommendations based on a customer’s past purchases and movie and TV show recommendations, such those found on Amazon and Netflix.

The primary foundation for the development of recommender systems is linear algebra. It can be interpreted as an illustration of how to use distance metrics like Euclidean distance or dot products to determine how comparable sparse customer behavior vectors are.

Recommender systems use a variety of matrix factorization techniques, including singular-value decomposition, to query, search, and compare user data.

- Deep Learning

Artificial Neural Networks, often known as ANNs, are non-linear machine learning algorithms that process information similarly from one layer to another and function similarly in the brain.

These neural networks are the subject of deep learning, which uses faster and more advanced technology to train and create larger networks with massive datasets. All deep learning techniques perform admirably on a variety of difficult tasks, including speech recognition and machine translation. Data structures in linear algebra, which are multiplied and added together, provide the foundation of processing neural networks. Additionally, tensors (matrices with more than two dimensions) comprising inputs and coefficients for multiple dimensions, vectors, and matrices are used by deep learning algorithms.

Conclusion

We have covered the function and significance of linear algebra in machine learning in this topic. Learning the fundamentals of linear algebra is crucial for everyone interested in machine learning in order to comprehend how ML algorithms operate and select the most appropriate method for a given task.