Contents

One of the most important components of any technology or application these days is data. In machine learning initiatives, data is also essential. Since various data sets are needed for each machine learning project, data preparation can be the most important step in the process.

The following phase of the ML lifecycle involves data preparation. To get insights or generate predictions, the data is first gathered from multiple sources, then the junk data is cleaned and converted into real-time machine learning projects. Furthermore, machine learning aids in the identification of patterns in data, allowing for exact predictions, the construction of data sets, and the proper transformation of data.This topic, “Understanding Data Preparation in Machine Learning” will go over several data preparation strategies, including data pre-processing, data splitting, and other related topics. Let’s begin with a brief overview of data preparation in machine learning.

What is Data Preparation?

In machine learning projects, data preparation is the process of gathering, integrating, cleaning, and converting unprocessed data in order to make exact predictions.

Data preparation can alternatively be called “data wrangling,” “data cleaning,” “data pre-processing,” or “feature engineering.” It is the next phase of the machine learning process, following data collection.

Data preparation is specific to the data, project objectives, and methods to be utilized in data modeling procedures.

Prerequisites of Data Preparation

Everyone must investigate a few critical activities while working with data during the data preparation stage. They are as follows:

Data Cleaning:Identification of errors and their rectification or improvement are part of this work.

Feature Selection:We must determine which input data variables are most crucial or pertinent to the model.

Data Transforms: Data transformation is the process of transforming unprocessed data into a format that is appropriate for the model.

Feature Engineering: Feature engineering is the process of creating new variables from an already accessible dataset.

Dimensionality Reduction:During the dimensionality reduction process, higher dimensions are converted into lower dimension features without damaging the data.

Data Preparation in Machine Learning

The process of cleaning and modifying raw data so that machine learning algorithms can produce accurate predictions is known as data preparation. Even while data preparation is thought to be the most difficult step in machine learning, it actually makes the process simpler in real-time applications.

The following problems have been reported during the machine learning data preparation step:

Missing data: Most databases contain incomplete records or missing data. Sometimes records have blank cells, values (like NULL or N/A), or a specific character (like a question mark) in place of the necessary data.

Outliers or Anomalies: When ML algorithms receive data from unidentified sources, they are sensitive to the distribution and range of values. These variables can degrade the model’s performance as well as the machine learning training system as a whole. Therefore, it is crucial to identify these anomalies or outliers using methods like visualization.

Unstructured data format:It is necessary to extract data from multiple sources and convert it into a different format. So, always seek advice from subject-matter experts or import data from reliable sources before launching an ML project.

Limited Features:It is required to import data from numerous sources for feature enrichment or to build multiple features in datasets because data from a single source can only include restricted features.

Understanding feature engineering:By adding new content to the ML models, features engineering improves model performance and prediction accuracy.

Why is Data Preparation important?

A particular data format is needed for every machine learning project. To do this, datasets must be thoroughly prepared before being used in projects. Predictions can occasionally be less accurate or inaccurate due to missing or incomplete information in data sets. Additionally, some data sets have less business context and are clean but not sufficiently structured, such as aggregated or pivoted. Therefore, data preparation is required to change raw data after it has been collected from multiple data sources.

The following are some significant advantages of data preparation in machine learning:

- In many analytics processes, it aids in producing accurate prediction results.

- It greatly lowers the likelihood of errors and assists in identifying problems or faults in the data.

- It improves one’s ability to make decisions.

- It lowers the whole project cost (including the cost of data maintenance and analysis).

- Duplicate content can be eliminated to make it valuable for several uses.

- As a result, the model operates better.

Steps in Data Preparation Process

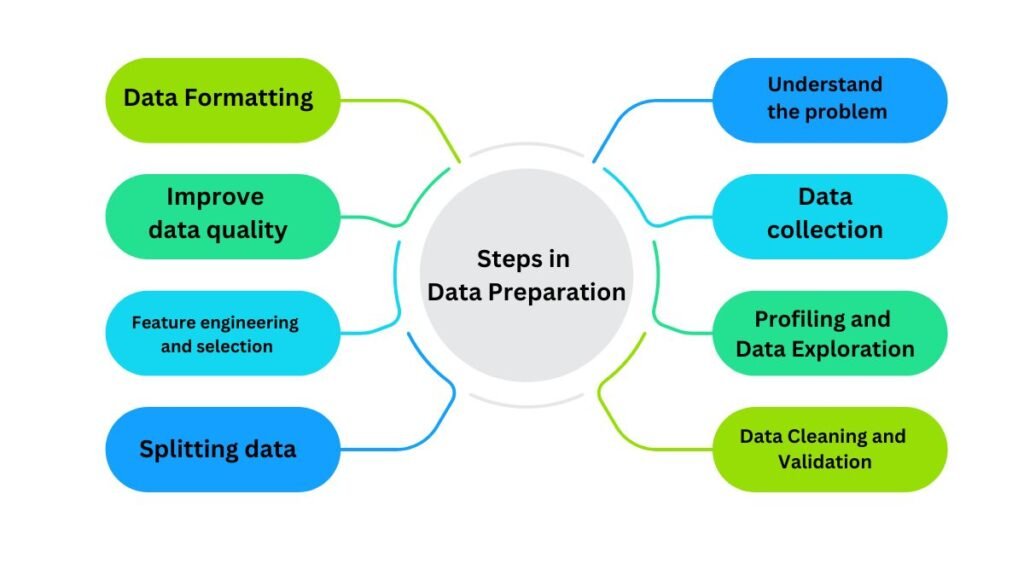

One of the most important phases in the development of a machine learning project is data preparation, which calls for a certain set of actions including several tasks. Various machine learning experts and professionals have recommended the following crucial phases for the data preparation process in machine learning:

1.Understand the problem: This is one of the crucial phases in preparing data for a machine learning model, where we need to understand the actual problem and attempt to resolve it. We need comprehensive knowledge on every topic, including what to do and how to accomplish it, in order to create a better model. Additionally, it works incredibly well to keep clients without putting in a lot of work.

2.Data collection: The most common step in the data preparation process is undoubtedly data collection, when data scientists must gather information from a variety of possible sources. These data sources could come from third-party providers or from within the company. It is advantageous to collect data in order to lessen and mitigate bias in the machine learning model; therefore, always assess data before collecting it and make sure that the data set was gathered from a variety of individuals, places, and viewpoints.

Through data collection, the following frequent issues can be resolved:

- It is useful to identify the correct attributes in the string for the .csv file format.

- It is used to convert highly nested data structures in files like XML or JSON into tabular format.

- It is useful for easier scanning and pattern recognition in data collections.

- Data collection is a practical step in machine learning that entails acquiring relevant data from external repositories.

3.Profiling and Data Exploration:After studying and gathering data from multiple sources, it is time to investigate patterns, outliers, exceptions, erroneous, inconsistent, missing, or skewed data, and so on. Although source data will yield all model results, it does not contain any hidden biases. Data exploration aids in the identification of problems such as collinearity, which indicates the need for data set standardization and other data transformations.

4.Data Cleaning and Validation: Inconsistencies, outliers, anomalies insufficient data, and other issues can be detected and resolved using data cleaning and validation approaches. Clean data allows for the discovery of useful patterns and information in data while excluding irrelevant material in datasets. It is critical to create high-quality models, and missing or incomplete data is one of the most obvious examples of inadequate data. Missing data always decreases model prediction accuracy and performance, thus data must be cleaned and validated using various imputation procedures to fill unfilled fields with statistically relevant substitutes.

5.Data Formatting: After cleaning and validating the data, the next step is to determine whether it is correctly formatted or not. Incorrect data formatting can aid in the development of a high-quality model.Because data comes from a variety of sources and is occasionally updated manually, there is a significant risk of discrepancies in the data format. For example, suppose you obtained data from two sources and one of them updated the product’s pricing to USD10.50 while the other updated it to $10.50. Similarly, there could be errors in their spelling, abbreviation, etc. This type of data generation results in inaccurate forecasts. To reduce these problems, format your data in an inconsistent manner utilizing input formatting standards.

6.Improve data quality: Quality is an important element in developing high-quality models. Quality data contributes to fewer errors, missing data, extreme values, and outliers in datasets. We may comprehend it using an example like, One dataset contains columns with First Name and Last NAME, while another contains a column called as a customer that combines First and Last Name. In such circumstances, clever machine learning algorithms must be able to match these columns and join the dataset to provide a single perspective of the consumer.

7.Feature engineering and selection: Feature engineering is the process of choosing, changing, and transforming raw data into important features or the most relevant variables in supervised machine learning.Feature engineering allows you to construct a better predictive model that generates right forecasts.

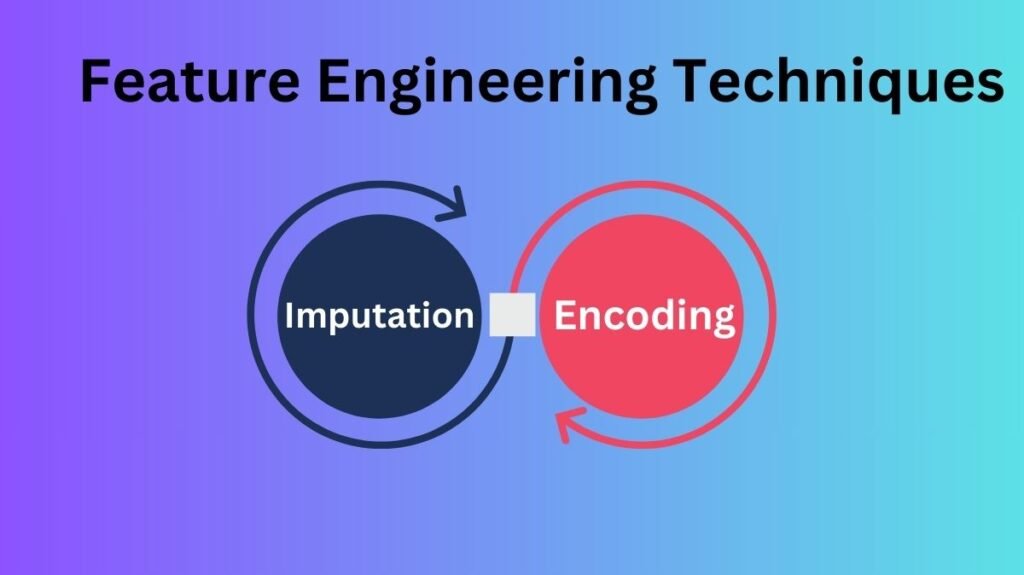

For example, data can be broken down into several pieces to collect more particular information, such as examining marketing success by day of the week rather than month or year.In this scenario, separating the day as a distinct categorical value from the data (for example, “Mon; 07.12.2021”) may provide the algorithm with more relevant information. Machine learning employs a variety of feature engineering techniques, including:

- Imputation: The process of filling in missing fields in datasets is known as feature imputation. It is crucial since missing data in the dataset prevents the majority of machine learning models from functioning. However, methods like single value imputation, multiple value imputation, K-Nearest neighbor, row deletion, etc., can help lessen the issue of missing values.

- Encoding: The process of converting string values into numeric form is known as feature encoding. Since all ML models require all values to be in numeric representation, this is crucial. Label encoding and One Hot Encoding (sometimes called get_dummies) are examples of feature encoding.

8.Splitting data: The final stage after feature engineering and selection is to divide your data into training and evaluation sets. Additionally, to guarantee appropriate testing, always use non-overlapping subsets of your data for the training and evaluation sets.

Conclusion

One important component of creating top-notch machine learning models is data preparation. Data preparation enables us to sample and implement machine learning models by cleaning, organizing, combining, and exploring data. It’s crucial because the majority of machine learning algorithms require numerical data in order to minimize errors and statistical noise. The significance of data preparation in developing machine learning and predictive modeling projects, among other things, has been covered in this topic.