What is Regression Analysis?

Regression analysis is a statistical technique that describes the relationship between an independent (predictor) and dependent (goal) variable using one or more independent variables. More specifically, regression analysis allows us to understand how the value of the dependent variable changes in respect to a constant independent variable.Temperature, age, income, price, and other continuous and real numbers are all predicted by it.

Regression is a supervised learning technique that helps identify the correlation between variables and predicts the continuous output variable based on one or more predictor variables. Its main uses include prediction, forecasting, time series modeling, and determining the causal-effect link between variables.

Here are a few examples of regression:

- Rainfall forecasting based on temperature and other variables

- Finding Market Trends

- Forecasting traffic accidents caused by reckless driving.

The following terms are associated with regression analysis:

Dependent Variable: In regression analysis, the dependent variable is the primary component that we wish to predict or comprehend. A different name for it is target variable.

Independent Variable: Independent variables, also referred to as predictors, are the elements that forecast the values of the dependent variables or have an impact on them.

Outliers: When compared to other observed values, an outlier is a value that is either exceptionally high or extremely low. Outliers should be avoided because they may have an impact on the results.

Multicollinearity: Multicollinearity occurs when the independent variables have a higher correlation with one another than with other factors. Because it causes issues when ranking the most influencing variable, it shouldn’t be in the dataset.

Underfitting and Overfitting: The problem is referred to as overfitting when our system performs well on the training dataset but poorly on the testing dataset. Underfitting refers to the condition in which our system performs poorly even while using training data.

Why do we use Regression Analysis?

As previously stated, regression analysis facilitates the prediction of a continuous variable. Many scenarios in the real world need us to make future predictions, such as weather conditions, sales projections, marketing trends, and so on. In such instances, we require technologies that can improve predictions. So, in this case, we require regression analysis, a statistical approach used in machine learning and data science.

Here are a few more justifications for doing regression analysis:

- The link between the target and the independent variable is estimated by regression.

- It is employed to identify data trends.

- It aids in the prediction of continuous or real values.

- Regression analysis allows us to clearly identify the most and least important elements as well as the ways in which each factor influences the others.

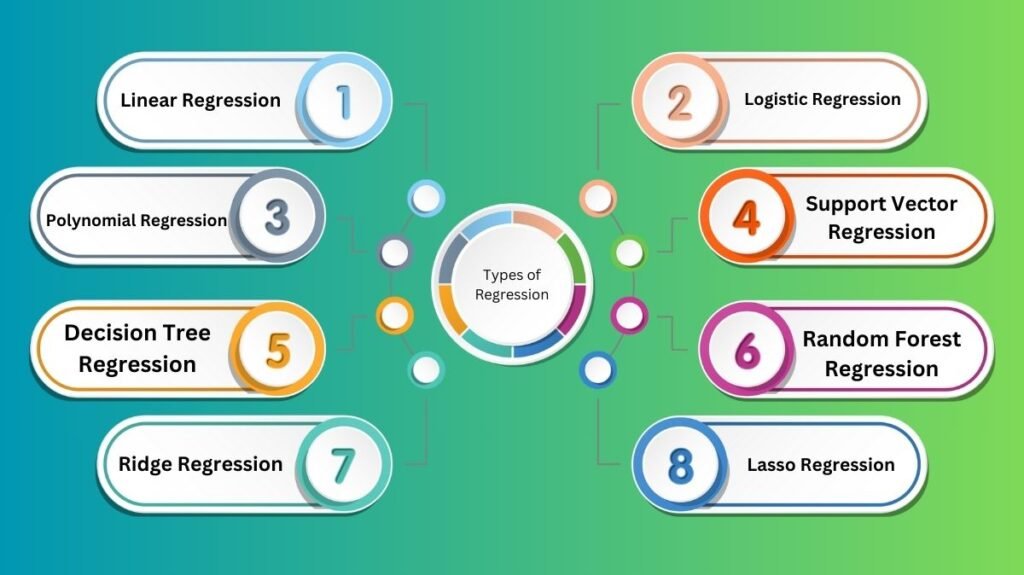

Types of Regression

There are several types of regressions used in data science and machine learning. Each type has its own significance in various settings, but at their heart, all regression methods examine the effect of the independent variable on dependent variables. Here, we will cover some key types of regression, which are listed below:

- Linear Regression

- Logistic Regression

- Polynomial Regression

- Support Vector Regression

- Decision Tree Regression

- Random Forest Regression

- Ridge Regression

- Lasso Regression

Linear Regression:

- Predictive analysis makes use of the statistical regression approach known as linear regression.

- This simple technique illustrates the relationship between continuous variables through regression.

- It is used to solve a regression problem in machine learning.

- As the name suggests, linear regression shows a linear relationship between the independent variable (X-axis) and the dependent variable (Y-axis).

- Simple linear regression requires only one input variable (x). When there are several input variables, linear regression is referred to as multiple linear regression.

Logistic Regression:

- For addressing classification problems, logistic regression is an additional supervised learning technique. Discrete or binary values, such 0 or 1, are dependent variables in classification issues.

- For example, the logistic regression algorithm uses categorical variables like 0 or 1, Yes or No, True or False, Spam or Not Spam, and etc.

- Based on the concept of probability, it is a predictive analysis technique.

- Despite being used in a different way than the linear regression algorithm, logistic regression is a regression type.

- A complicated cost function known as the logistic function, or sigmoid, is used in logistic regression. The data in logistic regression is modeled using this sigmoid function.

Polynomial Regression:

- Polynomial regression is a regression method that models a non-linear dataset using a linear model.

- It is similar to multiple linear regression, except it fits a non-linear curve between x and the conditional values of y.

- Assume you have a dataset with datapoints that are not present in a linear form; in this scenario, linear regression will not best fit those datapoints. To cover such data points, polynomial regression is required.

- Polynomial regression involves transforming the original characteristics into polynomial features of a specific degree, which are then modeled using a linear model. This suggests that a polynomial line is the most appropriate fit for the data points.

Note: Polynomial regression differs from multiple linear regression in that it uses a single element with varying degrees rather than several variables with the same degree.

Support Vector Regression:

- One supervised learning technique that may be applied to both regression and classification problems is the support vector machine.It is referred to as Support Vector Regression when applied to regression problems.

- The regression approach known as Support Vector Regression is effective with continuous variables.

A few terms that are utilized in Support Vector Regression are listed below:

Kernel: It is a function for mapping data with lower dimensions to data with greater dimensions.

Hyperplane: In general, SVM is a line that separates two classes, but in SVR, it is a line that helps forecast continuous variables and covers the majority of datapoints.

Boundary line: Boundary lines are two lines that separate the hyperplane and provide a margin for datapoints.

Support vectors: Support vectors are the datapoints that are closest to the hyperplane and opposite class.

In SVR, our goal is always to identify the hyperplane that covers the greatest number of datapoints with the biggest margin. The primary purpose of SVR is to consider the maximum number of datapoints within the boundary lines, and the hyperplane (best-fit line) must contain a maximum number of datapoints.

Decision Tree Regression:

- A supervised learning approach called a decision tree can be applied to both regression and classification issues.

- It can fix problems with categorical and numerical data.

- A tree-like structure is created using decision tree regression, where each internal node stands for the “test” of an attribute, each branch for the test’s outcome, and each leaf node for the ultimate choice or outcome.

- Starting with the root node, also known as the parent node (dataset), a decision tree is built. From there, it divides into left and right child nodes, which are subsets of the dataset. These child nodes become the parent node of those nodes after being further subdivided into their children.

Random Forest Regression

- Random Forest, one of the most powerful supervised learning algorithms, is capable of performing regression and classification tasks.

- By combining several decision trees, Random Forest regression is an ensemble learning technique that forecasts the end result by averaging the output from each tree.

- Random Forest employs an ensemble learning technique called Bagging or Bootstrap Aggregation, wherein the aggregated decision trees operate independently of one another in parallel.

- We can avoid overfitting in the model by using Random Forest regression to generate random subsets of the dataset.

Ridge Regression:

- Ridge regression is a very reliable variant of linear regression that introduces a small bit of bias to improve long-term predictions.

- The Ridge Regression penalty refers to the degree of bias included into the calculation. By multiplying the lambda by the squared weight of each unique feature, we can get this penalty term.

- If the independent variables are highly collinear, a general linear or polynomial regression will not work; in these situations, Ridge regression can be employed to address the issue.

- A regularization method called ridge regression is utilized to make the model less complicated. It is sometimes referred to as L2 regularization.

- When we have more parameters than samples, the problems are easier to solve.

Lasso Regression:

- Lasso regression is an additional regularization method that can be used to simplify the model.

- The sole difference between it and the Ridge Regression is that the penalty term only includes the absolute weights rather than a square of weights.

- It can decrease the slope to zero since it uses absolute numbers, but Ridge Regression can only do so in the vicinity of zero.

- L1 regularization is another name for it.