An Introduction to the Bootstrap Method

The bootstrap method is a powerful resampling approach commonly used in machine learning and statistics. It enables practitioners to estimate the distribution of a statistic by periodically sampling with replacement from observed data. Bootstrap methods, invented by Bradley Efron in 1979, have been essential in a variety of applications, including model validation, variance estimates, and ensemble learning approaches like bagging.

In this post, we will look at the notion of bootstrapping, its usefulness in machine learning, its various kinds, Bootstrap Methods and Their Applications and practical implementations using examples.

What is the Bootstrap Method?

The Bootstrap Method, also known as Bootstrapping, is a statistical sampling strategy that involves repeatedly taking a given data set to generate many additional false samples. According to statistician Jim Frost, this procedure is used to calculate standard errors and confidence ranges, as well as to test hypotheses. In actuality, bootstrapping is a resampling procedure that involves cramping a number of times on a file holding a population data set with replacement. It can be used to calculate summary statistics such as the mean and variance, and it is commonly used to evaluate model performance in machine learning on unseen data.

This method is valuable as an alternative to other approaches, such as hypothesis testing, because it is simple to execute while still having some of the disadvantages of other traditional procedures.

In statistical inference, popular techniques include the sampling distribution and the standard error of the characteristic of interest. In a traditional or large sample technique, a sample of size n is chosen from the population, population parameters are calculated, and conclusions are reached by drawing inferences from the sample. However, in actuality, only the sample data is available, therefore bootstrapping is a viable strategy for drawing conclusions from the data that is available.

Bootstrap Resampling Method

The bootstrap resampling method is a statistical approach that estimates a statistic’s sampling distribution by resampling from the original dataset with replacement. To compute statistics (e.g. mean, median) for a dataset of size 𝑛, numerous bootstrap samples of equal size are chosen. These estimated values have a distribution that is similar to the true sampling distribution, allowing for confidence interval calculation and hypothesis testing. Bootstrap is widely used in machine learning, econometrics, and bioinformatics because of its simplicity and robustness, particularly when typical parametric assumptions fail.

How Does Bootstrapping Work?

Bootstrapping requires the following steps:

- Generate a bootstrap sample from a dataset of size by picking observations at random with replacement.

- Calculate the desired statistic (mean, standard deviation, model accuracy, etc.) using the resampled dataset.

- Repeat steps 1 and 2 multiple times (usually 1,000 or more).

- Examine the distribution of the calculated statistics to estimate the original statistic’s attributes.

Why Is Bootstrapping Important in Machine Learning?

Bootstrapping is important in machine learning for a variety of reasons.

Estimating the Sampling Distribution: When theoretical distributions are difficult to calculate, bootstrapping offers an empirical approximation.

Model Evaluation: Model evaluation is used in cross-validation approaches such as the 0.632 bootstrap method to estimate model performance.

Ensemble Learning: Provides the foundation for bagging (Bootstrap Aggregating), which improves model robustness.

Variance Reduction: Variance reduction aids in comprehending model variance and confidence intervals.

Types of Bootstrap Methods

There are several varieties of bootstrapping strategies, each tailored to a certain use case.

Basic (non-parametric) Bootstrap

The typical bootstrap method involves drawing samples with replacement and computing the statistic of interest numerous times to estimate confidence intervals or variance.

Parametric Bootstrap

Instead of resampling from actual data, the parametric bootstrap uses a known probability distribution to generate synthetic data. This method is effective when the underlying distribution is known but the sample size is tiny.

Block Bootstrap

Used for correlated time series data. Contiguous blocks of data are resampled rather than individual data points, in order to preserve the dependence structure.

Bayesian Bootstrap

This variant applies random weights to data points instead of resampling them, making it better suited for Bayesian inference applications.

0.632 Bootstrap Method

A technique for assessing model performance that accounts for overfitting by weighting training and test mistakes.

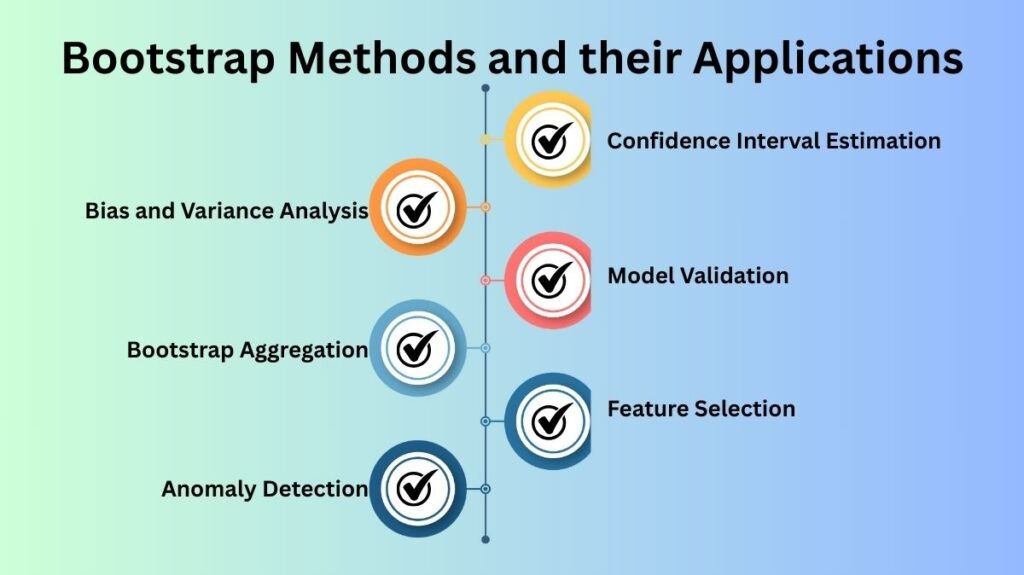

Bootstrap Methods and their Applications

Confidence Interval Estimation: When theoretical calculations are too complicated or inapplicable, bootstrapping is frequently employed to create confidence ranges for estimated statistics.

Bias and Variance Analysis: Bootstrapping analyzes the bias-variance trade-off in machine learning models by generating numerous resamples.

Model Validation: In addition to cross-validation, bootstrap approaches aid in the evaluation of model performance on small datasets.

Bagging (Bootstrap Aggregation): Bagging is an ensemble learning technique that employs several bootstrap samples to train models independently before aggregating their predictions. The Random Forest Algorithm is the most well-known application, which generates several decision trees from bootstrapped datasets.

Feature Selection: Bootstrapping determines feature relevance by examining how frequently a feature appears in resampled datasets, which improves model interpretability.

Anomaly Detection: Outlier detection approaches use bootstrapping to determine the variability of observations and distinguish between normal and anomalous data points.

How to implement Bootstrap Method in Python

Here’s a simple example demonstrating how bootstrapping can be used to estimate the mean and confidence interval of a dataset.

import numpy as np

import matplotlib.pyplot as plt

# Generate sample data

np.random.seed(42)

data = np.random.normal(loc=50, scale=10, size=100)

# Number of bootstrap samples

n_iterations = 1000

sample_size = len(data)

bootstrap_means = []

# Bootstrap resampling

for _ in range(n_iterations):

sample = np.random.choice(data, size=sample_size, replace=True)

bootstrap_means.append(np.mean(sample))

# Compute confidence interval

lower_bound = np.percentile(bootstrap_means, 2.5)

upper_bound = np.percentile(bootstrap_means, 97.5)

print(f"Estimated Mean: {np.mean(bootstrap_means):.2f}")

print(f"95% Confidence Interval: ({lower_bound:.2f}, {upper_bound:.2f})")

# Plot bootstrap distribution

plt.hist(bootstrap_means, bins=30, alpha=0.6, color='b', edgecolor='k')

plt.axvline(lower_bound, color='red', linestyle='dashed', label='95% CI Lower Bound')

plt.axvline(upper_bound, color='green', linestyle='dashed', label='95% CI Upper Bound')

plt.axvline(np.mean(bootstrap_means), color='black', linestyle='solid', label='Mean')

plt.legend()

plt.xlabel('Bootstrap Mean')

plt.ylabel('Frequency')

plt.title('Bootstrap Mean Distribution')

plt.show()Bootstrapping Method Advantages and Disadvantages

Advantages:

- Simple to implement and understand.

- Works well for tiny sample sizes.

- It makes no assumptions about normality or other distributional limitations.

- Provides strong variance and confidence interval estimations.

Disadvantages:

- Computing is expensive, particularly for huge datasets.

- May not work well with severely skewed or dependent data.

- Assume the sample accurately represents the total population.

Conclusion

The bootstrap technique is a critical statistical tool in machine learning, offering a strong way to quantify uncertainty, evaluate models, and enhance predicted accuracy. Bootstrapping is still an important approach in the data scientist’s toolset, whether for model validation, ensemble learning, or feature selection. Practitioners who understand and utilize bootstrap resampling well can draw more trustworthy conclusions and develop stronger machine learning models.