Contents [hide]

Data Science K-Means Clustering

Data grouping using unsupervised machine learning approaches like K-Means clustering is popular. Her principal uses are exploratory data analysis and pattern detection. K-Means clustering seeks to group data points according to their similarity, therefore separating data into discrete groups or clusters. The mean (centroid) of each cluster’s points serves as its representation. An extensive explanation of K-Means clustering, its operation, and its uses in data science will be given in this article.

Overview of Clustering

Clustering groups objects into clusters that are more similar than other clusters. In unsupervised machine learning, clustering is a key technique. The model gets input data without output labels. Supervisory learning instructs the model on labeled data, but clustering uses related objects to uncover data structures.

K-Means, a simple clustering technique, has worked well in many real-world applications.

K-Means Clustering: What is it?

K-Means clustering iteratively divides data points into a preset number of clusters, denoted as “K.” While maximizing the variation across various clusters, the method aims to reduce the variance inside each cluster. The basic idea of K-Means is assigning each data point to the cluster whose centroid (mean) is closest.Until the clusters stabilize or the algorithm converges, the algorithm iterates through a series of steps.

Procedures for the K-Means clustering

The following phases are part of the straightforward, iterative process that the K-Means clustering method uses:

Setting up

First, the method chooses “K” starting centroids at random from the dataset. The integer “K” indicates how many clusters the algorithm will produce. It is crucial to remember that the value of “K” is a user-defined parameter that has a big influence on the clustering outcomes.

Step of Assignment

The closest centroid is assigned to each data point. Euclidean distance is used to assess data point proximity to a centroid. When data points are assigned, cluster centroid is recalculated.

Step of Update

The centroids of the clusters are recalculated after the data points have been allocated to clusters. The average of every data point in the cluster is the new centroid. In the following iteration, the cluster’s center will be this revised centroid.

Do Steps 2 and 3 again.

Until the centroids stop changing or the algorithm reaches a certain number of iterations, steps two and three are performed recursively. To put it another way, the algorithm converges when the centroids don’t vary much and the distribution of data points among clusters stays constant.

K-Means Clustering Benefits

Because of its many benefits, K-Means clustering is one of the most often used clustering methods.

Efficiency and Simplicity: The algorithm is simple to comprehend and use. It is frequently used in practice because it is computationally efficient, especially for huge datasets.

Scalability: K-Means is appropriate for big data applications since it scales well to huge datasets with numerous dimensions.

Versatility: When paired with distance measures like Gower’s distance, K-Means can be used with a variety of data formats, including numerical, categorical, and mixed-type data.

Clearly Defined Outcomes: K-Means generates distinct clusters, with every data point falling into a single cluster.

The K-Means Clustering’s drawbacks

Notwithstanding its widespread use, K-Means clustering has a number of drawbacks:

Sensitive to Initial Centroids: Depending on the initial centroids selected, the algorithm’s performance may change. Various initializations can have varying outcomes. For this reason, in order to get the best clustering, K-Means is frequently run several times with various initializations.

Selecting K: One major disadvantage is the requirement to predetermine the number of clusters K, particularly in cases where the precise value of 𝐾 is unknown. Poor cluster assignments may result from incorrect K selections.

Assumes Spherical Clusters:K-Means makes the assumption that the clusters are spherical and uniformly sized, which isn’t always the case in real-world data. When the clusters have varying sizes, densities, or shapes, it performs badly.

Noise and Outliers: Because the mean is impacted by extreme values, K-Means is susceptible to outliers. Poor clustering might arise from outliers that skew the centroids.

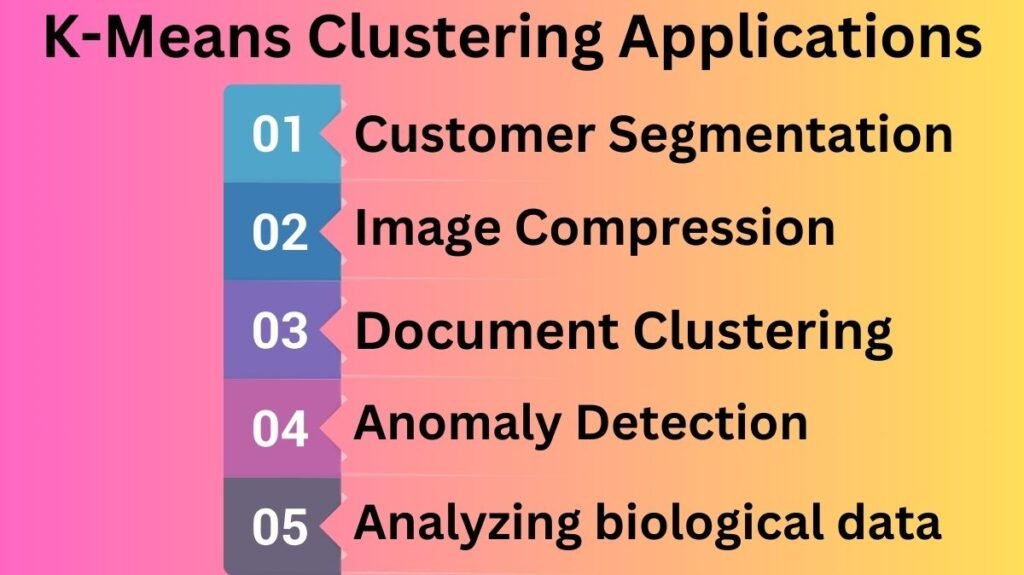

The K-Means Clustering Applications

Many firms and sectors employ K-Means clustering, including:

Customer Segmentation:Marketers can utilize K-Means to segment customers by surfing history, demographics, or shopping trends. Segmentation aids personalized guidance and targeted marketing.

Image Compression:Image compression uses K-Means to eliminate unique colors. This reduces image size without compromising quality.

Document Clustering: K-Means can cluster text mining documents by topic. Summarization, recommendation systems, and information retrieval benefit from meaningful grouping of large document sets.

Anomaly Detection: K-Means can identify unusual user behavior or network traffic patterns that may indicate fraud or other security threats.

Analyzing biological data: K-Means can cluster genomic gene expression data to help researchers locate genes with similar expression patterns.

Conclusion

A popular, simple, and successful unsupervised learning method is K-Means clustering. Although limited, it can uncover data structures and patterns, especially when cluster numbers are available.Commercial applications make K-Means clustering popular among data scientists and machine learning professionals. Knowing how K-Means works, choosing the proper K number, and overcoming its restrictions will help you analyze data.