Contents

Data Science Artificial Neural Networks

Machine learning, pattern recognition, and deep learning use Artificial Neural Networks (ANNs), one of the most effective data science techniques. ANNs are computational models that process and evaluate data nonlinearly, inspired by the brain. This article discusses ANNs’ structure, function, applications, and data science influence.

Artificial Neural Networks?

Human brain neural networks are modeled by Artificial Neural Networks (ANNs). Neurons carry electrical signals between brain areas. ANNs have layers of nodes (like neurons) that process input data and pass it on to the next layer.

ANNs are machine learning models that learn to discover patterns, forecast, and improve. Adjusting neuron weights based on feedback optimizes network output during learning.

Artificial neural network architecture

Artificial neural networks include three main layers:

Input Layer:Data for network processing is received by the input layer. This layer’s neurons represent dataset features. In an image classification job, each input neuron might be a pixel.

Hidden Layers: Hidden layers compute. Layers add weights and activation functions to input data. The number of hidden layers in a network increases model complexity. The network captures complicated patterns by abstracting input data with hidden layers.

Output Layer:Network computations end in the output layer. Classification output layers have as many neurons as classes. Regression output layers normally have one neuron representing the anticipated value.

Neuronal connections have weights that govern their strength. Weights are modified during training to reduce network prediction error.

Activations Functions

Activation functions govern whether a neuron is activated based on input. Non-linearity from these functions lets the network model complicated patterns. Popular activation functions include:

Sigmoid: Outputs 0–1. Commonly used for binary categorization.

ReLU (Rectified Linear Unit):The ReLU outputs the input value if it is positive and zero otherwise. Due of its deep network training efficiency, it is a common activation function.

Tanh (Hyperbolic Tangent): Outputs -1 to 1. Hidden layers use it because it handles negative numbers.

Softmax:The output layer of multi-class classification jobs uses Softmax. It turns logits into probabilities, ensuring that the sum is 1.

Network Training

A neural network is trained by altering its weights to decrease prediction error. Process steps usually include:

Forward Propagation: Layer-by-layer input data is propagated across the network to compute output.

Loss Function:The loss function measures the difference between projected output and target. For regression and classification, mean squared error and cross-entropy loss are common loss functions.

Backpropagation:The error is used to update network weights in backpropagation. The gradient descent algorithm computes the loss function gradient with respect to the weights and modifies the weights to minimize loss.

Optimization:Stochastic gradient descent, Adam, and other gradient descent variants are used to efficiently minimize the loss function. The learning rate hyperparameter affects weight adjustment speed during training.

Especially for deep learning models, neural network training demands enormous datasets and computer capacity. Better training efficiency and less overfitting are achieved by batch processing, data augmentation, and regularization.

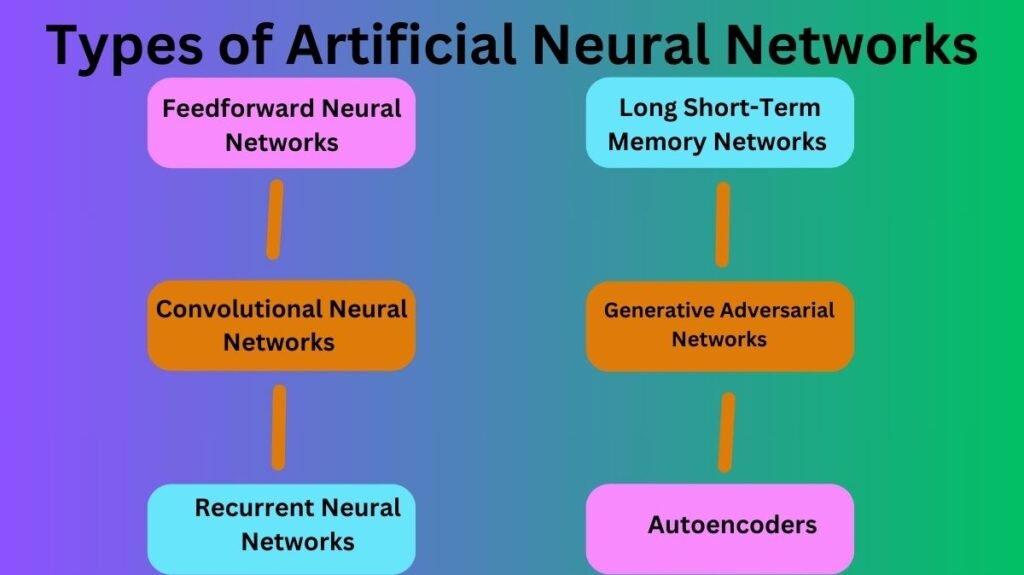

Types of Artificial Neural Networks

ANN designs are tailored to certain purposes. Some popular varieties are:

Feedforward Neural Networks (FNNs):The simplest neural network is the feedforward neural network (FNN), which carries information from input to output. Simple classification and regression problems are popular with these networks.

Convolutional Neural Networks (CNNs):CNNs are designed for image and video data jobs. They extract edges, textures, and forms using convolutional layers to filter input data. CNNs excel at object, image, and facial recognition.

Recurrent Neural Networks (RNNs): RNNs are used for sequence-based applications like time series forecasting, NLP, and speech recognition. For sequential data, RNNs are better than feedforward networks because their loops allow information to remain.

Long Short-Term Memory Networks (LSTMs): LSTM RNNs solve the vanishing gradient problem in extended sequence training. Machine translation and text generation use LSTMs to capture long-term interdependence.

Generative Adversarial Networks (GANs):Adversarial generated networks Generator and discriminator networks compete in GANs. Discriminator seeks to identify genuine data, whereas generator makes synthetic data. Realistic photos, films, and other synthetic data are generated by GANs.

Autoencoders:Unsupervised neural networks for dimensionality reduction and feature learning are autoencoders. The encoder compresses input data into a latent form, while the decoder reconstructs it. Data compression, anomaly detection, and picture denoising use autoencoders.

Neural Network Applications

Artificial Neural Networks are used in data science and other fields. Some significant areas are:

- CNNs excel at visual analysis. Image pattern recognition is essential in facial identification, object detection, and autonomous vehicles.

- Sentiment analysis, machine translation, chatbots, and text summarization use RNNs, LSTMs, and transformers. Essential for voice assistants and social media analysis, these models interpret and generate human language.

- In several businesses, ANNs are used to anticipate stock prices, customer behavior, and equipment breakdown. These forecasts inform business decisions.

- In medical image analysis, neural networks can detect cancers, fractures, and other problems. Individualized medicine uses patient data to predict treatment outcomes.

- In finance, ANNs are used for algorithmic trading, fraud detection, credit scoring, and risk management. They assist banks and financial institutions invest better by analyzing vast amounts of financial data.

- ANNs have been used to create intelligent agents that can play video and board games. Gamers like DeepMind’s AlphaGo, which defeated the world champion Go player, use ANNs.

Struggles and Prospects

Artificial neural networks struggle despite their progress. Smaller organisations may struggle to obtain substantial datasets and computational resources. In addition, ANNs are “black box” models, making their decisions hard to understand. Critical applications like healthcare and banking might be affected by this lack of openness.

Developing explainable AI methods, enhancing neural network training efficiency, and constructing models with minimal data are future research goals. Emerging fields include transfer learning and few-shot learning, which train models with minimal datasets.

Conclusion

Artificial Neural Networks have transformed data science by solving complicated challenges. In image and speech recognition, healthcare, and finance, ANNs have shown their adaptability and potential. Artificial neural networks will shape technology and data science as research advances and intelligent systems are developed.