OpenAI introduces PaperBench, a test that measures how well AI bots can copy the latest AI research. Agents have to copy 20 ICML 2024 Spotlight and Oral papers from scratch. This means they have to understand what each paper contributes, create a program, and run tests successfully.

PaperBench is a benchmark that evaluates AI agents’ capacity to reproduce modern AI research. It is an interesting benchmark designed to test how AI systems can understand and reproduce real-world machine learning research. It involves giving AI agents research papers and complete instructions (rubrics), asking them to create software to repeat the experiments, and then automatically evaluating their attempts.

The results specify that the most powerful AI models are still knowingly behind human researchers in this complex task. By providing a way to measure AI’s research capabilities, PaperBench goals to progress in the field and help us better understand the potential and limitations of increasingly independent AI systems. It’s a critical step towards developing AI that can assist and accelerate scientific discovery.

PaperBench is a test for Artificial Intelligence (AI) systems, exactly designed to see how they can understand and repeat actual AI research. See giving an AI a scientific paper and asking it to not just read it, but to actually recreate the experiments and get the same results. That’s essentially what PaperBench is all about.

The Main Goal: Testing AI’s Research Abilities

The idea of PaperBench is to assess how independent (self-reliant) AI agents are at real-world research and development in the field of machine learning. The creators (OpenAI) of PaperBench believe that if AI can successfully repeat existing research, it could speed up the progress of machine learning and help with important areas like AI safety.

Example: You could ask a student to read a study paper and then do the same experiment in a lab to see how good they are at science. PaperBench does the same thing, but for AI.

Why is this important?

It’s interesting to see how AI systems can handle difficult jobs that usually need human expertise, like study, as they get smarter. It is important to be able to accurately measure this ability for the purpose of understanding the strengths and weaknesses of advanced AI. In the field of machine learning study, PaperBench suggests a way to do just that.

How PaperBench Works: The Process

The process of using PaperBench to test an AI agent involves several key steps:

- Choosing the Research Papers: The creators (OpenAI) of PaperBench selected 20 recent, high-quality research papers existing at a machine learning conference (ICML 2024). These papers cover a topic within machine learning, like deep learning and probabilistic methods. The selection standards confirmed that the papers involved considerable experimental work that would need real effort to repeat and the experiments could be run on a single computer. They avoided papers that were theoretical or focused only on introducing new software without experimental results.

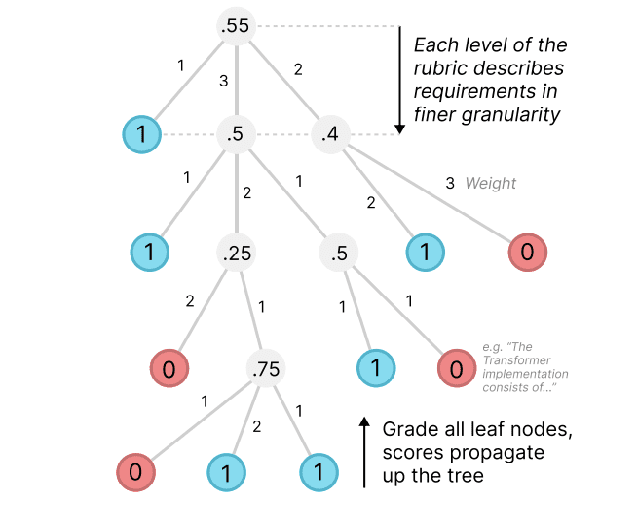

- Creating Detailed Rubrics: For each of the 20 research papers, the PaperBench team created a very detailed set of instructions, called a “rubric”. A rubric as a multi-level checklist that breaks down every single feature of the research paper that needs to be replicated. These rubrics are organized like a tree, with broad goals at the top that are broken down into increasingly specific sub-tasks.

- For example, a top-level goal might be “Reproduce the main experimental results,” which could then be broken down into sub-tasks like “Implement the Transformer model,” “Run the model on the specified dataset,” and “Generate the key performance metrics”.

- Association with Original Authors: To make sure the rubrics were correct and truly taken the spirit of the research, the PaperBench team worked closely with the original authors of each paper. The authors reviewed the rubrics, provided feedback, and clarified any doubts in their papers. This collaboration was important to define what a successful reproduction would look like.

- Hierarchical Structure and Weighting: The rubrics are organized in a graded way, meaning that larger tasks are divided into smaller, more controllable sub-tasks. This allows for a very detailed evaluation of the AI’s progress. Each task in the rubric, the most specific ones at the bottom of the tree (called “leaf nodes”), has a clear “pass” or “fail” standard. Additionally, each task is assigned a “weight” to reflect its importance to the overall research contribution. This ensures that the AI gets more credit for repeating the important parts of the paper.

- The AI Agent’s Task: When an AI agent is estimated using PaperBench, it is given the research paper and any clarifications by the original authors. The AI’s task is to create a complete software codebase from scratch that can reproduce the experimental results (the data-driven findings) reported in the paper.

- The reproduce.sh Script: A critical part of the AI’s submission is a special script called reproduce.sh. This script acts as the entry point for running all the code needed to replicate the paper’s results. The idea is something should be simply run this script and see if it produces the same outcomes as described in the research paper.

- Running the Code and Checking the Results: After the AI submits its codebase and the reproduce.sh script, the PaperBench system tries to run this script. The goal is an execution of the AI’s code can actually reproduce the key findings, like the numbers in tables and the patterns in graphs presented in the original research paper.

- Automated Grading with an AI Judge: Because the rubrics are so detailed, it would take humans a very long time to grade each AI’s attempt. To solve this, PaperBench uses another AI system, called an “LLM-based judge”, a system called SimpleJudge, to automatically evaluate the AI’s submission against the rubric.

- How the Judge Works: The judge is given the research paper, the rubric for that paper, and the relevant files from the AI’s submission (including the code, the reproduce.sh script, and any output generated by running the script). For each specific standard in the rubric, the judge looks at the materials and decides whether the AI has met the requirements. It basically goes through the checklist in the rubric, assigning a “pass” (1) or “fail” (0) score to each of the most detailed tasks (the leaf nodes).

- Calculating the Overall Score: Detailed tasks in the rubric have been graded by the AI judge, the scores are collective based on the graded structure and the weights assigned to each task. The scores from the lower-level tasks are averaged (taking the weights into account) to get scores for the higher-level tasks, all the way up to the root of the rubric tree. The final score at the root represents the AI’s overall “Replication Score” for that research paper.

- JudgeEval: The PaperBench team also created a distinct evaluation for the judges themselves, called “JudgeEval”. This involves having humans manually grade some AI replication attempts and then comparing those human grades to the grades given by the automated judge. This helps to understand how accurate and reliable the automated grading process is and to improve it over time.

Components of PaperBench

- The Set of 20 Research Papers: These form the basis of the challenge for the AI agents.

- The Author-Approved Rubrics: Graded checklists define what establishes a successful replication for each paper.

- The Automated LLM-Based Judge (SimpleJudge): This AI system automatically estimates the AI agents’ submissions against the rubrics.

- The reproduce.sh Script: This is the standard way for the AI to package its work and for the PaperBench system to execute the replication attempt.

- JudgeEval: This separate benchmark helps to assess the accuracy and reliability of the automated judges.

PaperBench Code-Dev: A Simpler Version

The creators of PaperBench also introduced a version called “PaperBench Code-Dev”. This version makes simpler the evaluation process by concentrating only on the AI’s ability to develop the code needed for replication, without actually running the code or checking if the results match the paper. In PaperBench Code-Dev, the automated judge only looks at the “Code Development” parts of the rubric. This makes the cheaper and faster because it doesn’t require the computational resources to run potentially complex experiments. However, it’s considered a less complete evaluation because it doesn’t verify if the code actually works.

How AI Agents Perform

The PaperBench team evaluated several front-line AI models on the benchmark. The best-performing model they tested, called Claude 3.5 Sonnet, achieved an average replication score of only 21.0% on the full PaperBench. They also tested other models like o1, o3-mini, GPT-4o, and Gemini 2.0 Flash, and found that none of them could steadily and fully replicate the research papers. The results suggest that even the most advanced AI systems still have a long way to terms of alone conduct and replicate complex machine learning research.

On the simpler PaperBench Code-Dev, the o1 model achieved a higher score of 43.4%, indicating that AI can be more successful at just developing the code compared to fully reproducing the results.

Comparison with Human Researchers

How AI performance compares to human capabilities, the PaperBench team also recruited experienced machine learning PhD students to attempt to replicate a subset of the papers. These human experts, working for 48 hours, achieved an average score of 41.4% on the same subset of papers, outperforming the best AI model (which scored 26.6% on that subset). This highlights that even though AI is making rapid progress, human researchers still significantly outperform current AI systems in the complex task of replicating front-line research.

Challenges and Future Directions

Developing PaperBench and evaluating AI agents exposed some challenges and potential areas for future work:

- Rubric Design and Creation: Creating the detailed rubrics is a very time-consuming and labour-intensive process, needing a deep understanding of the research papers and careful association with the original authors. Future work could discover ways to automate or improve the rubric creation process.

- Improving Automated Judges: While the LLM-based judge is crucial for scalability, its accuracy and reliability are still areas for improvement. More research is wanted to develop better automated evaluation methods for complex, open-ended tasks.

- Dealing with under-specification: Research papers repeatedly have some level of under-specification, meaning they don’t provide every single detail needed for perfect replication. The PaperBench team addressed this by working with authors to create additions with clarifications. However, this remains a challenge in accurately evaluating replication.

- Specification Gaming: AI agents might find ways to “game” the evaluation, meaning they might produce submissions that score well on the rubric without truly replicating the research in the intended way. Future work could explore methods to mitigate this risk.

Significance and Impact

PaperBench is a significant step towards creating hard and complete evaluations of AI’s research capabilities in machine learning. By providing a standardized benchmark and a detailed evaluation framework, it allows researchers to:

- Measure Progress: Track the advancements of AI systems in performing complex research tasks over time.

- Identify Strengths and Weaknesses: Understand which features of research AI models shine at and where they still struggle.

- Guide Future Research: Inform the development of AI systems with improved research and problem-solving abilities.

- Assess Autonomy: Estimate the level of self-reliance and expertise that AI systems are achieving in a demanding real-world domain.

Limitations

While PaperBench is a valuable tool, it’s important to note some of its limitations. It focuses on the ability to replicate experiential research in machine learning, meaning research that involves experiments and data. It might not fully capture other features of research, such as generating original ideas, formulating new theories, or writing insightful analyses. Additionally, the benchmark relies heavily on the quality of the rubrics and the accuracy of the automated judge, which are still areas of ongoing development.

Source of this article

Read More on OpenAI Speect to Text