AMD and ONNX with TurnkeyML

With the rise of generative AI models that can produce words and synthesize visuals, machine learning and artificial intelligence are constantly evolving. New models constantly challenge what is possible in this ever-changing sector. Though intriguing, this presents a major obstacle for model repositories to remain current. The main problem is with the upkeep and consumption of these models. The state-of-the-art models might fast become outdated as the field develops because they are unable to keep up with newer, more efficient structures. This difficulty is exacerbated by the many and constantly growing amalgamation of hardware backends, software stacks, and model precisions, all of which contribute to the intricacy of the model management procedure.

What the ONNX ecosystem discovered is that a more dynamic strategy to automating the ongoing integration of these novel, state-of-the-art model architectures is required. By making the newest models easily accessible and tailored for a range of platforms and applications, this is essential to allowing the community to fully utilize the promise of these breakthroughs.

Presenting TurnkeyML:

AMD is pleased to provide TurnkeyML, an open-source toolchain intended to enhance the way they handle AI models for inferencing, in order to address these issues in partnership with the ONNX community. With the help of TurnkeyML, you can quickly and easily integrate any open-source PyTorch model, optimize it, and run it on a variety of hardware targets. Additionally, the user is able to see through all of this functionality.

TurnkeyML’s strength is its automation of open-source AI model import and pre-processing, which saves a tonne of time and work when integrating the models into the ONNX Model repository. The user can also alter the model processing parameters, such as the target OpSet version, data type precision, and model optimizations, using this tool.

ML Architecture Turnkey:

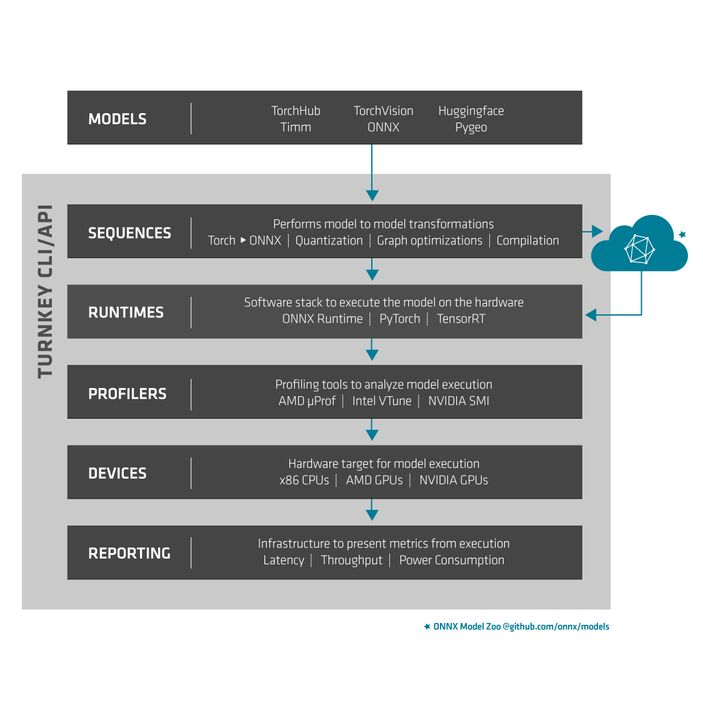

TurnkeyML can be accessed by API or CLI to meet a variety of user preferences and automation needs. Its architecture comprises several key components that play specialized roles in model deployment and optimization:

Model-to-model transformations are carried out using sequences, which are the fundamental building blocks of the TurnkeyML stack. Tasks like applying model quantization techniques, exporting a PyTorch model to the ONNX format with torch.onnx.export, or optimizing graphs with ONNX Runtime to improve model performance are examples of sequences.

Runtimes: Known as the execution layer, a runtime is the program that makes a certain model execute on a particular piece of hardware. Runtimes that offer cross-platform compatibility and may be used with various backend hardware, such as AMD ROCm execution provider for AMD GPUs, or high-performance frameworks like TensorRTTM for NVIDIA GPUs are examples.

Profilers: TurnkeyML interacts with a number of profiling tools so that users may assess and improve model performance while also getting insight into how well your model is being executed. Users can choose from a variety of profilers, such as Intel’s VTune Profiler for comprehensive CPU profiling and performance monitoring or Nvidia’s System Management Interface (SMI) for GPU-based data.

Devices: Targeting a broad spectrum of hardware platforms, TurnkeyML is engineered to be device-agnostic. TurnkeyML offers versatility and adaptability in hardware choices, whether it’s for running models on x86 CPUs, using GPUs’ parallel computing capabilities, or deploying to custom accelerators. Adding new hardware targets to TurnkeyML is a simple process.

TurnkeyML’s reporting infrastructure is the last part. It gathers and displays performance metrics from the model execution process, like mean latency (ms) and throughput (IPS). The ability to visualize performance statistics, offer efficiency insights, and support users in making well-informed decisions on model deployments are all made possible by this reporting.

The modular, plug-and-play architecture of TurnkeyML allows for simple addition, removal, or replacement of any component without the need for laborious integration procedures. Developers can easily modify the toolchain to meet their unique requirements by integrating additional models, introducing new model-to-model conversions, or focusing on new hardware accelerators thanks to its modular architecture.

Important advantages for AI development come from TurnkeyML’s methodical reproducibility:

Designed to Reduce User Error: Process automation reduces human error, improving consistency and dependability while handling models.

Standardizes Model Sources: It makes sure that models are consumed and processed consistently, which makes comparison and assessment simpler.

Standardized testing environments provide equitable and precise benchmarking among many models with TurnkeyML.