Ironwood, Google’s seventh-generation Tensor Processing Unit (TPU), is the first unique AI accelerator created just for inference and is the most scalable and performant to date.

Google’s most rigorous AI training and serving workloads have been powered by TPUs for over ten years, and Cloud clients can now do the same. Ironwood AI Chip most potent, competent, and energy-efficient TPU to date is Ironwood. Additionally, it is specifically designed to support large-scale, inferential AI models.

Ironwood marks a substantial turning point in the evolution of AI and the framework supporting it. There is a shift from AI models that are responsive and give real-time information for human interpretation to models that generate insights and interpretation on their own. It refer to this period as the “age of inference,” in which AI agents will proactively create and retrieve data in order to cooperatively provide answers and insights rather than merely data.

Ironwood is designed to handle the massive computational and communication demands of this next stage of generative AI. It has the capacity to grow up to 9,216 liquid-cooled chips connected by innovative Inter-Chip Interconnect (ICI) networking that spans around 10 MW. It is among a number of novel elements of the Google Cloud AI Hypercomputer architecture, which combines software and hardware optimization to meet the most complex AI tasks. Ironwood AI Chip allows developers to simply and consistently utilize the aggregate computing power of tens of thousands of Ironwood TPUs by utilizing Google’s own Pathways software stack.

Powering the age of inference with Ironwood

Ironwood AI Chip is made to handle the intricate communication and computing requirements of “thinking models,” which include sophisticated reasoning tasks, Mixture of Experts (MoEs), and Large Language Models (LLMs). These models necessitate effective memory access and huge parallel computation. Ironwood is specifically made to perform huge tensor manipulations with the least amount of data flow and latency possible on the device. The computational requirements of thinking models at the frontier are far greater than what can be handled by a single chip. Google created Ironwood TPUs with a high bandwidth, low latency ICI network to facilitate synchronous, coordinated communication at the whole TPU pod scale.

Based on the demands of AI workloads, Ironwood is available to Google Cloud users in two chip configurations: 256 and 9,216.

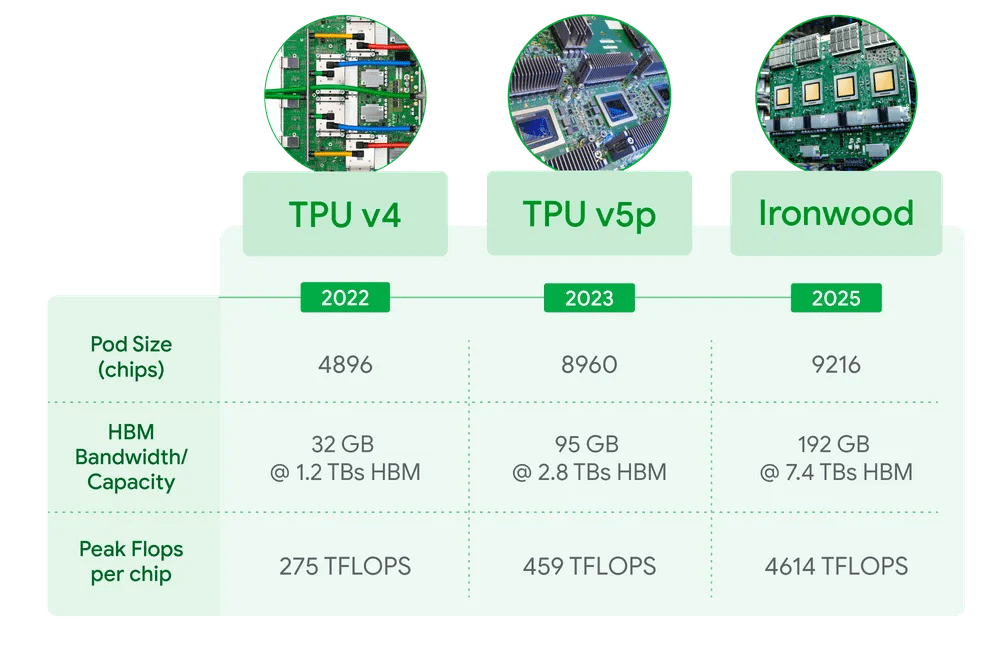

- Ironwood can accommodate more than 24 times the compute power of El Capitan, the greatest supercomputer in the world, which only offers 1.7 Exaflops per pod, when scaled to 9,216 chips per pod, or 42.5 Exaflops in total. For the most difficult AI tasks, including ultra big size dense LLM or MoE models with thinking skills for training and inference, Ironwood AI Chip provides the enormous parallel processing power required. With a peak computation of 4,614 TFLOPs, each chip is impressive. This is a huge advancement in AI technology. To support top performance at this enormous scale, Ironwood’s memory and network design guarantees that the appropriate data is always available.

- Additionally, Ironwood has an improved SparseCore, a dedicated accelerator for handling ultra-large embeddings that are frequently found in workloads involving advanced ranking and recommendation. A greater variety of workloads can be expedited with Ironwood’s expanded SparseCore support, including those in the scientific and financial fields in addition to the conventional AI sector.

- Effective distributed computing across several TPU devices is made possible by Pathways, Google’s proprietary machine learning runtime created by Google DeepMind. Google Cloud’s Pathways makes it simple to go beyond a single Ironwood Pod, allowing hundreds of thousands of Ironwood AI Chip to be assembled collectively to quickly push the boundaries of conventional AI computation.

Ironwood key features

The only hyperscaler with over ten years of expertise in providing AI computing to enable state-of-the-art research is Google Cloud, which is seamlessly integrated into planetary-scale services for billions of users daily through Gmail, Search, and other services. The core of Ironwood AI Chip capabilities is all of this experience. Important characteristics include:

- AI applications can operate more economically because to notable performance improvements and an emphasis on power economy. Compared to Trillium, it sixth generation TPU that was unveiled last year, Ironwood’s performance per watt is two times higher. The provide much greater capacity per watt for customer workloads at a time when power availability is one of the limitations for offering AI capabilities. Even under constant, high AI workloads, sophisticated liquid cooling technologies and optimized chip architecture can consistently maintain up to twice the performance of conventional air cooling. Actually, compared to initial Cloud TPU from 2018, Ironwood AI Chip is about 30 times more power efficient.

- Significant expansion of the capacity of High Bandwidth Memory (HBM). With 192 GB per chip six times more than Trillium Ironwood can process larger models and datasets, lowering the frequency of data transfers and enhancing performance.

- Significantly increased HBM bandwidth, which was 4.5 times that of Trillium and reached 7.2 TBps per chip. Rapid data access is ensured by this high bandwidth, which is essential for memory-intensive tasks that are typical in contemporary AI.

- Increased bandwidth for the Inter-Chip Interconnect (ICI). With a 1.5x speedup over Trillium, this has been raised to 1.2 Tbps bidirectional, allowing for quicker chip-to-chip communication and effective distributed training and inference at scale.

Read more on Intel And Google Cloud VMware Engine For Optimizing TCO

Ironwood solves the AI demands of tomorrow

Ironwood AI Chip enhanced processing power, memory capacity, ICI networking innovations, and dependability make it a singular breakthrough in the age of inference. It most demanding clients can now handle training and serving workloads with the best performance and lowest latency, all while satisfying the exponential growth in computing demand, with these innovations and a nearly twofold increase in power efficiency. TPUs are being used by cutting-edge models like Gemini 2.5 and the Nobel Prize-winning AlphaFold. When Ironwood becomes available later this year, the excited to see what AI innovations own developers and Google Cloud users will come up with.

Read more on Introduction to Multi Agent Systems Enhancement in Vertex AI