Increase PyTorch’s Inference and Training Speed with Intel Advanced Matrix Extensions (Intel AMX).

Applications in computer vision and natural language processing are the primary uses for PyTorch, a deep learning framework built on top of the Torch library. Meta created this framework, which is currently a component of the Linux foundation. To optimize the framework for Intel architectures, Intel works with the open source PyTorch project. Prior to being up streamed into the PyTorch stock distribution, the most recent features and optimizations are initially made available in the Intel Extension for PyTorch.

The built-in AI accelerator engine in 4th Gen Intel Xeon processors, known as Intel Advanced Matrix Extensions (Intel AMX), is introduced in this article along with how PyTorch can be used to speed up AI training and inference performance.

Intel AMX in 4th Gen Intel Xeon Scalable processors

Fourth-generation Intel Xeon processors are optimized for total cost of ownership and are built to provide better security, power-efficient processing, and greater performance. For AI workloads, these processors extend the capabilities of CPUs.

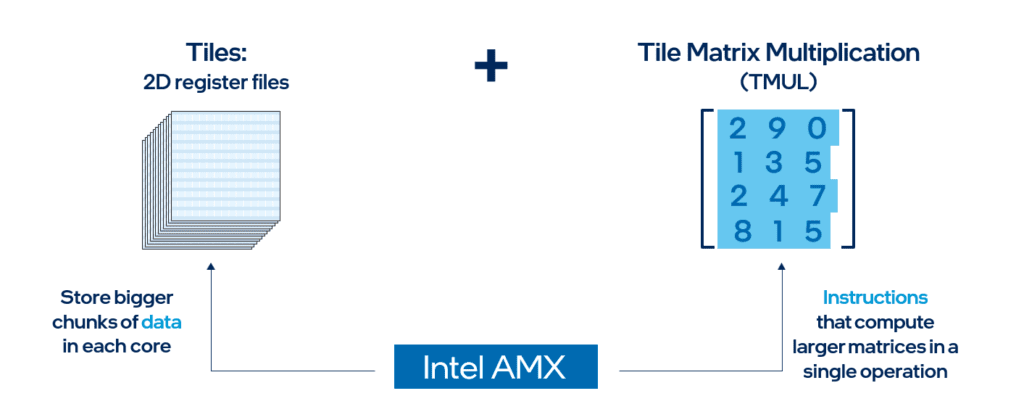

Each 4th generation Intel Xeon processor core has an integrated accelerator called Intel AMX, which speeds up workloads related to deep learning training and inference. There are two primary parts to the Intel AMX architecture:

Tiles: These are brand-new, 1kB, expandable 2D register files.

The instructions known as TMUL (Tile Matrix Multiply) work with the tiles to carry out matrix-multiply calculations for artificial intelligence.

To put it simply, Intel AMX will compute larger matrices in a single operation after storing larger data chunks in each core. While third-generation Intel Xeon processors still provide FP32 data types via Intel Advanced Vector Extensions 512 (Intel AVX-512) instructions, Intel AMX only supports BF16 and INT8 data types. Deep learning tasks including image detection, natural language processing, and recommender systems are accelerated by Intel AMX.

Traditional machine learning tasks that involve tabular data will make advantage of the Intel AVX-512 instructions that are currently in use. In order to execute code using the most efficient instruction set, 4th Gen Intel Xeon processors can smoothly switch between Intel AMX and Intel AVX-512. This is especially useful for deep learning tasks that require mixed precision.

What is Mixed Precision Learning?

This method of training a big neural network involves storing the model’s parameters in datatypes with varying precision (most frequently floating point 16 and 32) so that the network may operate more quickly and consume less memory.

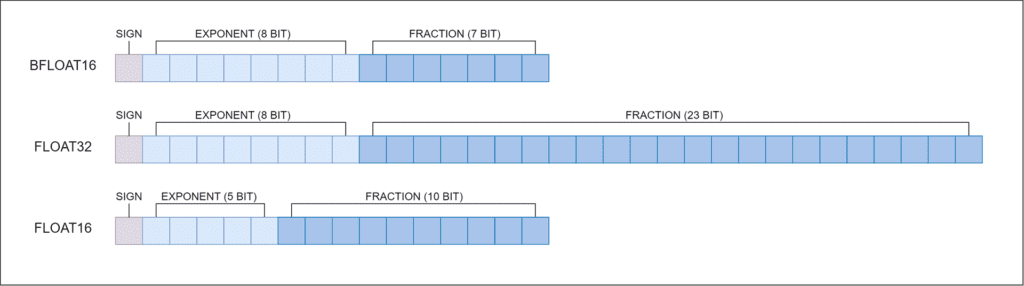

The single precision floating-point (FP32) data type, which uses 32 bits of memory, is used in the majority of models nowadays. However, there are two lower-precision data types that only need 16 bits of memory each: float16 and bfloat16 (BF16). Bfloat16 is a floating-point format that represents the approximate dynamic range of 32-bit floating-point integers while using only 16 bits of computer memory. The format for Bfloat16 is as follows:

- 1 bit – sign,

- 8 bits – exponent,

- 7 bits – fraction.

The image illustrates the differences between float16, float32, and bfloat16:

Intel Extension for PyTorch

For an additional performance boost on Intel hardware, the Intel extension adds the newest capabilities and optimizations to PyTorch. Future iterations of PyTorch’s standard implementation will incorporate the majority of these additional features. The extension can be purchased separately or as a component of the Intel AI Analytics Toolkit. (To install the Intel extension, refer to the installation instructions.)

The extension can be linked as a C++ library or loaded as a Python module. It can be dynamically enabled by Python users by importing intel_extension_for_pytorch.

- The Intel Extension for PyTorch for Intel CPUs is covered in full in the CPU lesson. The master branch contains the source code.

- The Intel Extension for PyTorch for Intel GPUs is covered in full in the GPU lesson. The xpu-master branch has the source code.

How to Enable Intel AMX bfloat16 Mixed Precision Learning on a PyTorch Model

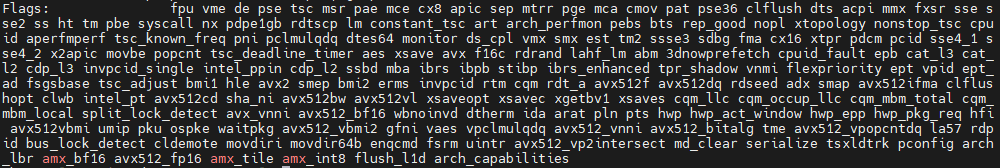

Verifying whether Intel AMX is enabled on your hardware is the first step. Enter the following command in the bash terminal:

cat /proc/cpuinfo

As an alternative, you might use:

lscpu | grep amx

Check for amx_bf16 and amx_int8 in the “flags” section. Upgrade to Linux kernel 5.17 or later if you don’t see them. Your final product ought to resemble this:

Code is automatically detected and sent during execution using the Intel Extension for PyTorch. It accomplishes this in a manner akin to that of the Intel oneAPI Deep Neural Network Library (oneDNN), which is a cross-platform and open source library that enhances the functionality of existing frameworks and offers optimized implementations of deep learning building blocks.

The latest accessible instruction set architecture (ISA), Intel AMX, is the default, therefore it is not necessary to adjust the environment variable ONEDNN_MAX_CPU_ISA during runtime. All of the settings are listed in the oneDNN documentation on CPU Dispatcher Control.

Steps to enable Intel AMX BF16 on PyTorch:

Just a few lines of code are needed for this. It entails sending the torch.bfloat16 datatype into the optimize() function after importing the Intel Extension for PyTorch module. Using the torch.cpu.amp.autocast() function is the final step. Take a look at the following ResNet50 training and inference examples.

To convert the model parameters into BF16, pass torch.bfloat16 into the optimize() method. To run the operations in mixed precision, in this instance BF16, pass torch.cpu.amp.autocast().

Training and Inference Optimizations with Intel AMX

Training

Using the CIFAR10 dataset and the Intel Extension for PyTorch, this code sample shows how to train a ResNet50 model. It boasts Intel AMX BF16’s superior performance over FP32.

The code sample implements the subsequent steps:

- Examine the cpuinfo flags to see if the hardware supports Intel AMX.

- Bring the CIFAR10 dataset into view.

- If using Intel AMX, set the environment variable:

- ONEDNN_MAX_CPU_ISA to DEFAULT

- If using Intel AVX-512, set it to AVX512_CORE_BF16.

- Use the optimize() method in the Intel Extension for PyTorch on the model and preferred training optimizer after instantiating the ResNet50 model.

- Use mixed precision where appropriate to train the model in the following run situations.

- Note the amount of time spent training.

- Baseline FP32, BF16, and Intel AVX-512

- Intel AMX in BF16

- Compute the speedup of each run case in relation to the baseline, FP32, and compare training times.

Try out the code sample on the Linux environment and on the Developer Clouds for Accelerated Computing using a 4th Gen Intel Xeon Scalable Processor.

Inference

This code example will show you how to use the Intel Extension for PyTorch to perform inference using the ResNet50 and BERT models. It boasts Intel AMX BF16 and INT8’s superior performance than FP32. Additionally, the Intel AVX-512 Vector Neural Network Instructions (VNNI) INT8, the prior instruction set for INT8 operations, is compared to the Intel AMX INT8.

The code sample implements the subsequent steps:

- Examine the cpuinfo flags to see if the hardware supports Intel AMX.

- Launch the BERT or ResNet50 model.

- If using Intel AMX, set the environment variable

- ONEDNN_MAX_CPU_ISA to DEFAULT;

- If using Intel AVX512, set it to AVX512_CORE_VNNI.

- In the following run cases, do inference on the model, utilising mixed precision as necessary. Note the inference time. The Intel Extension for PyTorch’s quantization capability is used to quantize the original FP32 model for run cases using INT8. After that, TorchScript is used to JIT-trace all models in order to benefit from graph optimizations.

- When implementing models in production, this is helpful.

- FP32 (baseline) BF16

- Intel AVX-512 VNNI INT8

- Intel AMX with INT8

- Compute the speedup of each run scenario using the FP32 baseline and compare inference times.

What’s Next?

Accelerate your PyTorch training and inference performance on 4th Gen Intel Xeon Scalable processors with Intel AMX by launching the Intel Extension for PyTorch now.

In order to help you plan, develop, implement, and scale your AI solutions, one can also encourage you to look into and integrate Intel’s other AI/ML Framework optimizations and end-to-end portfolio of tools into your AI workflow. Additionally, you can learn about the unified, open, standards-based oneAPI programming model that serves as the basis for Intel’s AI Software Portfolio.