Arize, Vertex AI API: Assessment procedures to boost AI ROI and generative app development

Vertex AI API providing Gemini 1.5 Pro is a cutting-edge large language model (LLM) with multi-modal features that provides enterprise teams with a potent model that can be integrated across a variety of applications and use cases. The potential to revolutionize business operations is substantial, ranging from boosting data analysis and decision-making to automating intricate procedures and improving consumer relations.

Enterprise AI teams can do the following by using Vertex AI API for Gemini:

- Develop more quickly by using sophisticated natural language generation and processing tools to expedite the documentation, debugging, and code development processes.

- Improve the experiences of customers: Install advanced chatbots and virtual assistants that can comprehend and reply to consumer inquiries in a variety of ways.

- Enhance data analysis: For more thorough and perceptive data analysis, make use of the capacity to process and understand different data formats, such as text, photos, and audio.

- Enhance decision-making by utilizing sophisticated reasoning skills to offer data-driven insights and suggestions that aid in strategic decision-making.

- Encourage innovation by utilizing Vertex AI’s generative capabilities to investigate novel avenues for research, product development, and creative activities.

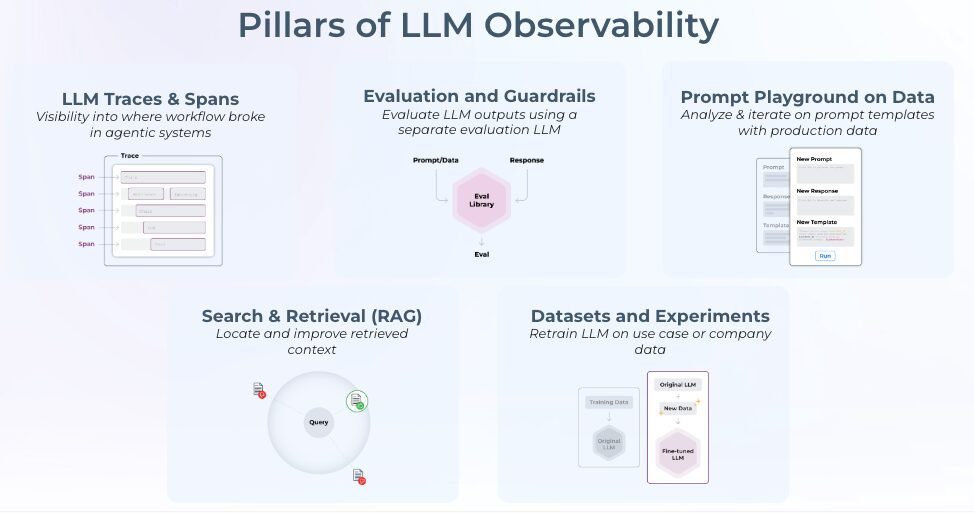

While creating generative apps, teams utilizing the Vertex AI API benefit from putting in place a telemetry system, or AI observability and LLM assessment, to verify performance and quicken the iteration cycle. When AI teams use Arize AI in conjunction with their Google AI tools, they can:

- As input data changes and new use cases emerge, continuously evaluate and monitor the performance of generative apps to help ensure application stability. This will allow you to promptly address issues both during development and after deployment.

- Accelerate development cycles by testing and comparing the outcomes of multiple quick iterations using pre-production app evaluations and procedures.

- Put safeguards in place for protection: Make sure outputs fall within acceptable bounds by methodically testing the app’s reactions to a variety of inputs and edge circumstances.

- Enhance dynamic data by automatically identifying difficult or unclear cases for additional analysis and fine-tuning, as well as flagging low-performing sessions for review.

- From development to deployment, use Arize’s open-source assessment solution consistently. When apps are ready for production, use an enterprise-ready platform.

Answers to typical problems that AI engineering teams face

A common set of issues surfaced while collaborating with hundreds of AI engineering teams to develop and implement generative-powered applications:

- Performance regressions can be caused by little adjustments; even slight modifications to the underlying data or prompts might cause anticipated declines. It’s challenging to predict or locate these regressions.

- Identifying edge cases, underrepresented scenarios, or high-impact failure modes necessitates the use of sophisticated data mining techniques in order to extract useful subsets of data for testing and development.

- A single factually inaccurate or improper response might result in legal problems, a loss of confidence, or financial liabilities. Poor LLM responses can have a significant impact on a corporation.

Engineering teams can address these issues head-on using Arize’s AI observability and assessment platform, laying the groundwork for online production observability throughout the app development stage. Let’s take a closer look at the particular uses and integration tactics for Arize and Vertex AI, as well as how a business AI engineering team may use the two products in tandem to create superior AI.

Use LLM tracing in development to increase visibility

Arize’s LLM tracing features make it easier to design and troubleshoot applications by giving insight into every call in an LLM-powered system. Because orchestration and agentic frameworks can conceal a vast number of distributed system calls that are nearly hard to debug without programmatic tracing, this is particularly important for systems that use them.

Teams can fully comprehend how the Vertex AI API supporting Gemini 1.5 Pro handles input data via all application layers query, retriever, embedding, LLM call, synthesis, etc. using LLM tracing. AI engineers can identify the cause of an issue and how it might spread through the system’s layers by using traces available from the session level down to a specific span, such as retrieving a single document.

Additionally, basic telemetry data like token usage and delay in system stages and Vertex AI API calls are exposed using LLM tracing. This makes it possible to locate inefficiencies and bottlenecks for additional application performance optimization. It only takes a few lines of code to instrument Arize tracing on apps; traces are gathered automatically from more than a dozen frameworks, including OpenAI, DSPy, LlamaIndex, and LangChain, or they may be manually configured using the OpenTelemetry Trace API.

Could you play it again and correct it? Vertex AI problems in the prompt + data playground

The outputs of LLM-powered apps can be greatly enhanced by resolving issues and performing fast engineering with your application data. With the help of app development data, developers may optimize prompts used with the Vertex AI API for Gemini in an interactive environment with Arize’s prompt + data playground.

It can be used to import trace data and investigate the effects of altering model parameters, input variables, and prompt templates. With Arize’s workflows, developers can replay instances in the platform directly after receiving a prompt from an app trace of interest. As new use cases are implemented or encountered by the Vertex AI API providing Gemini 1.5 Pro after apps go live, this is a practical way to quickly iterate and test various prompt configurations.

Verify performance via the online LLM assessment

With a methodical approach to LLM evaluation, Arize assists developers in validating performance after tracing is put into place. To rate the quality of LLM outputs on particular tasks including hallucination, relevancy, Q&A on retrieved material, code creation, user dissatisfaction, summarization, and many more, the Arize evaluation library consists of a collection of pre-tested evaluation frameworks.

In a process known as Online LLM as a judge, Google customers can automate and scale evaluation processes by using the Vertex AI API serving Gemini models. Using Online LLM as a judge, developers choose Vertex AI API servicing Gemini as the platform’s evaluator and specify the evaluation criteria in a prompt template in Arize. The model scores, or assesses, the system’s outputs according to the specified criteria while the LLM application is operating.

Additionally, the assessments produced can be explained using the Vertex AI API that serves Gemini. It can frequently be challenging to comprehend why an LLM reacts in a particular manner; explanations reveal the reasoning and can further increase the precision of assessments that follow.

Using assessments during the active development of AI applications is very beneficial to teams since it provides an early performance standard upon which to base later iterations and fine-tuning.

Assemble dynamic datasets for testing

In order to conduct tests and monitor enhancements to their prompts, LLM, or other components of their application, developers can use Arize’s dynamic dataset curation feature to gather examples of interest, such as high-quality assessments or edge circumstances where the LLM performs poorly.

By combining offline and online data streams with Vertex AI Vector Search, developers can use AI to locate data points that are similar to the ones of interest and curate the samples into a dataset that changes over time as the application runs. As traces are gathered to continuously validate performance, developers can use Arize to automate online processes that find examples of interest. Additional examples can be added by hand or using the Vertex AI API for Gemini-driven annotation and tagging.

Once a dataset is established, it can be used for experimentation. It provides developers with procedures to test new versions of the Vertex AI API serving Gemini against particular use cases or to perform A/B testing against prompt template modifications and prompt variable changes. Finding the best setup to balance model performance and efficiency requires methodical experimentation, especially in production settings where response times are crucial.

Protect your company with the Vertex AI API and Arize, which serve Gemini

Arize and Google AI work together to protect your AI against unfavorable effects on your clients and company. Real-time protection against malevolent attempts like as jailbreaks, context management, compliance, and user experience all depend on LLM guardrails.

Custom datasets and a refined Vertex AI Gemini model can be used to configure Arize guardrails for the following detections:

- Embeddings guards: By analyzing the cosine distance between embeddings, it uses your examples of “bad” messages to protect against similar inputs. This strategy has the advantage of constant iteration during breaks, which helps the guard become increasingly sophisticated over time.

- Few-shot LLM prompt: The model determines whether your few-shot instances are “pass” or “fail.” This is particularly useful when defining a guardrail that is entirely customized.

- LLM evaluations: Look for triggers such as PII data, user annoyance, hallucinations, etc. using the Vertex AI API offering Gemini. Scaled LLM evaluations serve as the basis for this strategy.

An instant corrective action will be taken to prevent your application from producing an unwanted response if these detections are highlighted in Arize. The remedy can be set by developers to prevent, retry, or default an answer such “I cannot answer your query.”

Utilizing the Vertex AI API, your personal Arize AI Copilot supports Gemini 1.5 Pro

Developers can utilize Arize AI Copilot, which is powered by the Vertex AI API servicing Gemini, to further expedite the AI observability and evaluation process. AI teams’ processes are streamlined by an in-platform helper, which automates activities and analysis to reduce team members’ daily operational effort.

Arize Copilot allows engineers to:

- Start AI Search using the Vertex AI API for Gemini; look for particular instances, such “angry responses” or “frustrated user inquiries,” to include in a dataset.

- Take prompt action and conduct analysis; set up dashboard monitors or pose inquiries on your models and data.

- Automate the process of creating and defining LLM assessments.

- Prompt engineering: request that Gemini’s Vertex AI API produce prompt playground iterations for you.

Using Arize and Vertex AI to accelerate AI innovation

The integration of Arize AI with Vertex AI API serving Gemini is a compelling solution for optimizing and protecting generative applications as businesses push the limits of AI. AI teams may expedite development, improve application performance, and contribute to dependability from development to deployment by utilizing Google’s sophisticated LLM capabilities and Arize’s observability and evaluation platform.

Arize AI Copilot’s automated processes, real-time guardrails, and dynamic dataset curation are just a few examples of how these technologies complement one another to spur innovation and produce significant commercial results. Arize and Vertex AI API providing Gemini models offer the essential infrastructure to handle the challenges of contemporary AI engineering as you continue to create and build AI applications, ensuring that your projects stay effective, robust, and significant.

Do you want to further streamline your AI observability? Arize is available on the Google Cloud Marketplace! Deploying Arize and tracking the performance of your production models is now simpler than ever with this connection.