Understanding the Linux Kernel

Running software requires virtualization. The target system boots and software build can affect this. Linux kernel binary loading was added to Intel Simics Quick-Start Platform. Installation of kernel variant images into disk images before virtual platform boot was previously inconvenient.

Imagine how a virtual platform system boots Linux and why it helps. More realistic virtual platforms make it harder to “cheat” to provide user convenience beyond hardware. The new Quick-Start Platform setup is convenient without changing the virtual platform or BIOS/UEFI.

Linux? What is it?

A “Linux system” needs three things:

- Linux kernel—the operating system core and any modules compiled into it.

- Root file system, or Linux kernel file system. Linux needs a file system.

- Command-line kernel parameters. The command line can configure the kernel at startup and override kernel defaults. The kernel’s root file system hardware device is usually specified by the command line.

Kernel settings command. The command line can configure the kernel at startup and modify kernel defaults. Commands specify the kernel’s root file system hardware device.

On real hardware, a bootloader initializes hardware and starts the kernel with command-line parameters. Other virtual platforms differ from this process. Five simple to advanced virtual Linux startup methods are covered in this blog post.

Simple Linux Kernel Boot

Common are simple virtual platforms without real platforms. They execute instruction set architecture-specific code without buying exotic hardware or modeling a real hardware platform. Linux can run on the Intel Simics Simulator RISC-V simple virtual platform with multiple processor cores.

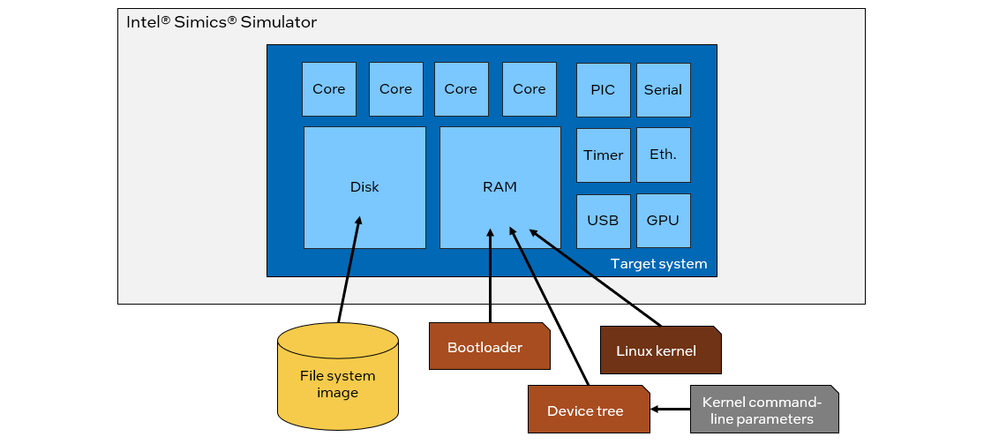

Binary files from Buildroot boot RISC-V. Bootloader, Linux kernel, root file system image, and binary device tree blob required. Software development changes Linux kernel and file system, but bootloader and device tree are usually reused. Bootloaders, Linux kernels, and root file systems are typical.

The simulator startup script loaded target RAM with bootloader, Linux kernel, and device tree. The startup script determines load locations. Updates to software may require address changes.

Startup script register values give the RISC-V bootloader device tree and kernel address. Kernel command line parameters are in devices. To change kernel parameters or move the root file system, developers must change the device tree.

Images are used on primary disks. Discs support unlimited file systems and simplify system startup changes.

Immediate binary loading into target memory is a simulator “cheat,” but kernel handover works as on real hardware after bootloader startup.

Flow variations. If the target system uses U-Boot, the bootloader interactive command-line interface can give the kernel the device tree address.

Too bad this boot flow can’t reboot Linux. It cannot reset the system because the virtual platform startup flow differs from the hardware startup flow. Any virtual platform reset button is prohibited.

Directly boot Linux Kernels

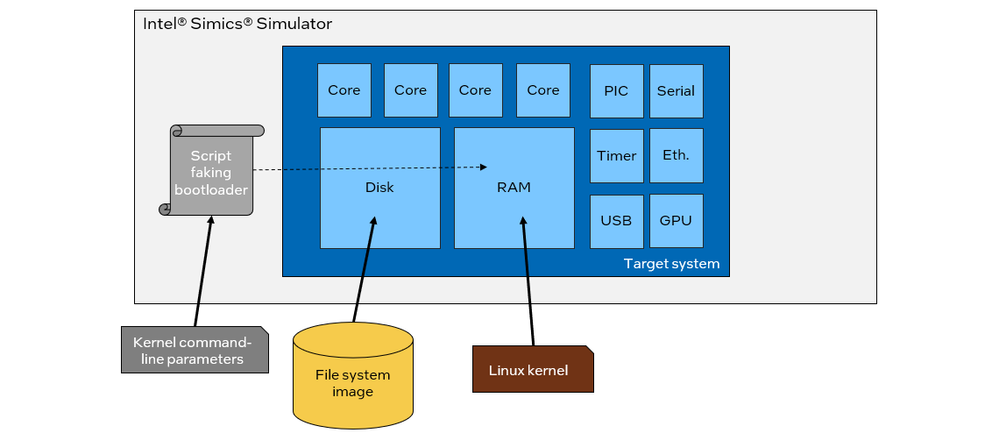

Bootloader skipping simplifies system startup. A simulator setup script must load the kernel and launch it. The bootloader-to-Linux interface must be faked by setup. This requires providing all RAM descriptors and setting the processor state (stack pointer, memory management units, etc.) to the operating system’s expectations.

Instead of building and loading a bootloader, a simulator script does it. Changes to the kernel interface require script updates. ACPI, a “wide” bootloader-OS interface, may complicate this script. The method fails System Management Mode and other bootloader-dependent operations.

Sometimes the fake bootloader passes kernel command-line parameters.

Real bootloaders are easier, says Intel Simics.

Booting from Disk

Virtual platforms should boot like real hardware. For this scenario, real software stacks are pre-packaged and easy to use. Virtual platform software development is bad.

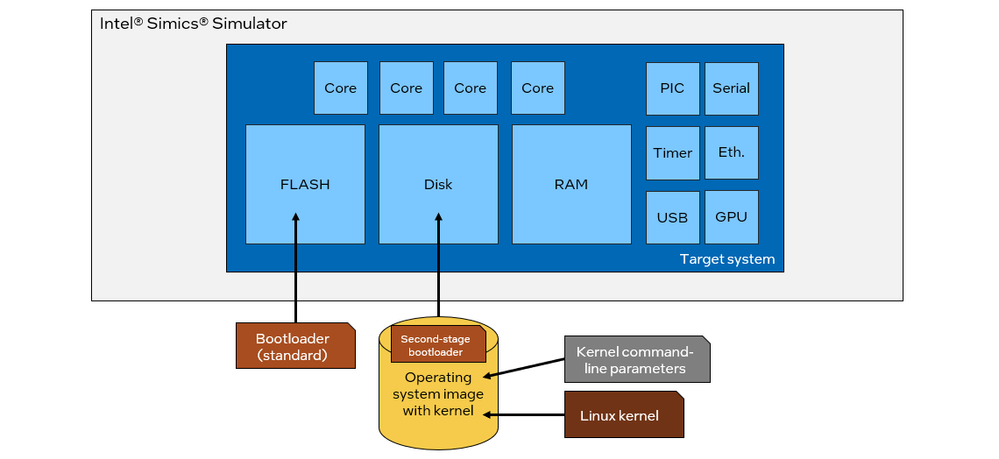

FLASH or EEPROM bootloaders are hardware. By providing code at the system “reset vector,” the bootloader starts an operating system on a “disk” (NVMe, SATA, USB, SDCard, or other interfaces) when the system is reset. Intel Architecture system workflow starts with UEFI firmware. System designers choose Coreboot or Slim Bootloader.

In Linux, GRUB or Windows Boot Manager may be needed for bootloader-OS handover. Normal software flow requires no simulator setup or scripting.

Note that this boot flow requires a bootloader binary and disk image. Hardware bootloaders are unexecutable.

Disk image includes Linux kernel and command-line parameters. Change command-line parameters by starting the system, changing the saved configuration, and saving the updated disk image for the next boot, just like on real hardware.

The virtual platform setup script shouldn’t mimic user input to control the target system during this booting method. Similar to hardware, the bootloader chooses a disk or device for modeled platform reset. Target and bootloader select boot devices. Find and boot the only bootable local device, like a PC.

For a single boot, interacting with the virtual target as a real machine is easiest. Scripting may be needed to boot from another device.

Virtual platforms run the real software stack from the real platform, making them ideal for pre-silicon software development and maintenance. Test proof value decreases with virtual platform “cheating”.

Change-prone Linux kernel developers may dislike this flow. Every newly compiled kernel binary must be integrated into a disk image before testing, which takes time. New Linux kernel boot flow enables real virtual platform with real software stack and easy Linux kernel replacement.

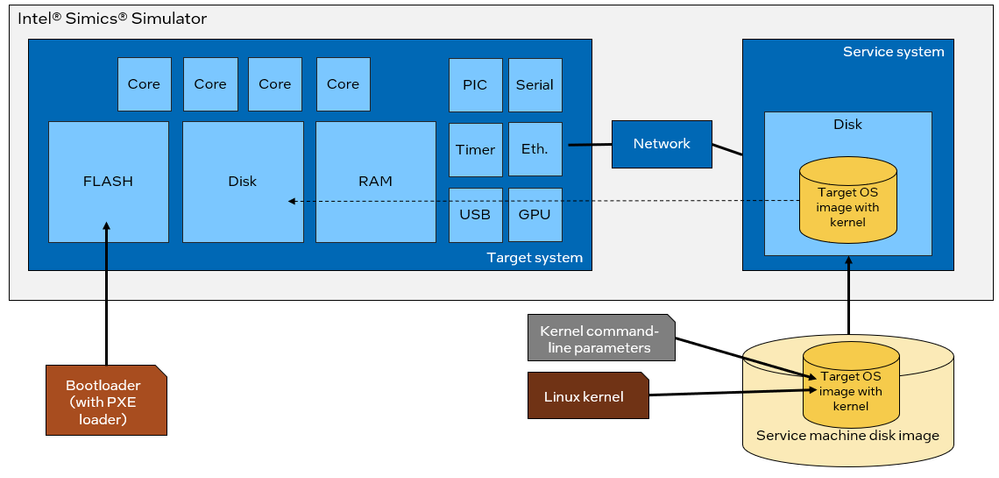

Network Boot

Disks don’t always boot real systems. Data centers and embedded racks boot networks. Software upgrades and patches don’t require system-by-system updates, simplifying deployments. System boot from central server disk images.

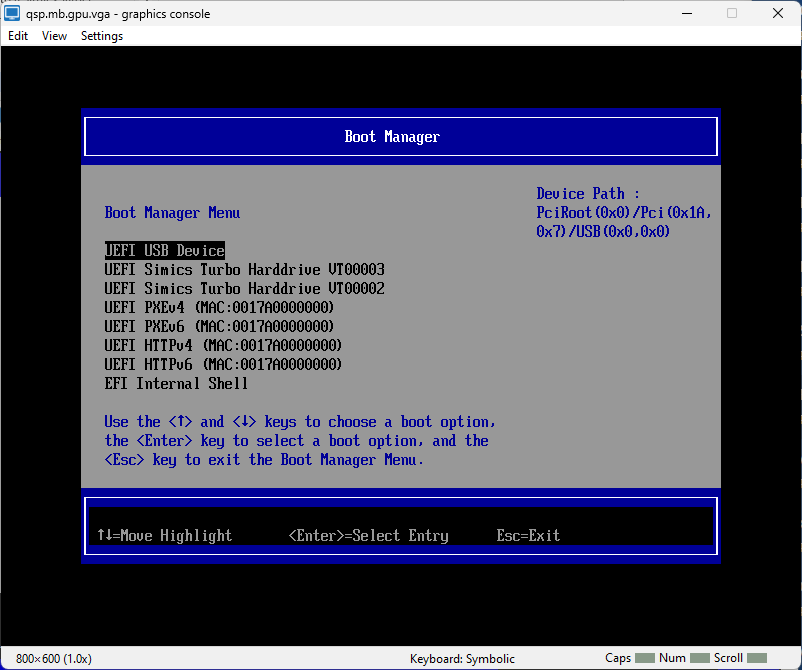

The Intel PXE network-based booting standard. Instead of booting locally, PXE acquires the disk image from a network server. The network boot can load stronger binaries and disk images gradually.

The virtual platform FLASH loads firmware to boot the disk.

Building a virtual platform network is hard. Linking virtual platform to host machine’s lab network does this. IT-managed networks need a TAP solution to connect the virtual platform to the lab network. PXE booting uses DHCP, which struggles with NAT.

One or more machines connected to the simulated network that provides services is the most reliable and easiest solution. Servers ran on service system files with boot images. An alternative disk boot file system.

Target systems need network adapters and bootloaders to boot disks.

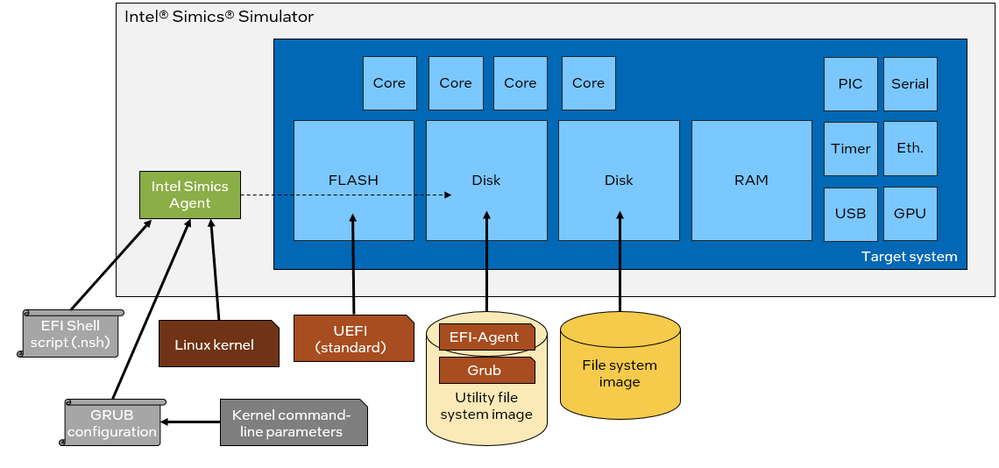

New Linux boot flow

The new Linux Kernel boot flow for Intel Simics simulator UEFI targets can be described with this background. Similar to direct kernel boots, this flow starts with a Linux kernel binary, command-line parameters, and root filesystem image. The standard target setup uses UEFI bootloader-based disk boot.

Target has 2 disks. First disk’s prebuilt file system’s Intel Simics Agent target binary and GRUB binary are used. The second disk has disk boot-like root file system. Dynamically configured disk images can boot Linux from utility disks. Avoids RAM kernel placement issues.

Intel Simics Agent allows this. A simulator or target software drives the agent system to quickly and reliably move files from the host to the target system software stack. Agents send “magic instructions” directly to target software, simulator, and host. Although fragile and slow, networking could transfer files.

Intel Simics simulator startup scripts automate boot. The script uses kernel command-line parameters and binary name to create a temporary GRUB configuration file. The agent loads the host’s EFI shell script into the target’s UEFI. A script boots EFI shell.

The EFI shell script calls the agent system to copy the kernel image and GRUB configuration file from the host to the utility disk. Finish: boot the utility disk Linux kernel with GRUB.

Virtual platforms speed Linux kernel testing and development. A recompiled kernel can be used in an unmodified Intel Simics virtual platform model (hot-pluggable interfaces allow disk addition). No kernel disk image needed. Easy kernel command-line parameter changes.

Flexible platform root file system insertion. PCIe-attached virtio block devices are the default, but NVMe or SATA disks can hold disk images. Virtuoso PCIe paravirtual devices provide root file systems. A host directory, not an image, contains this file system content. Host-privileged Virtuous daemons may cause issues. Root file system is found by kernel CLI.

A disk image contains all UEFI boot files, so the platform reboots. UEFI locates a bootable utility disk with GRUB, configuration, and custom Linux kernel.

All target-OS dependencies are in the EFI shell script. Rewritten to boot “separable” kernels outside the root file system.

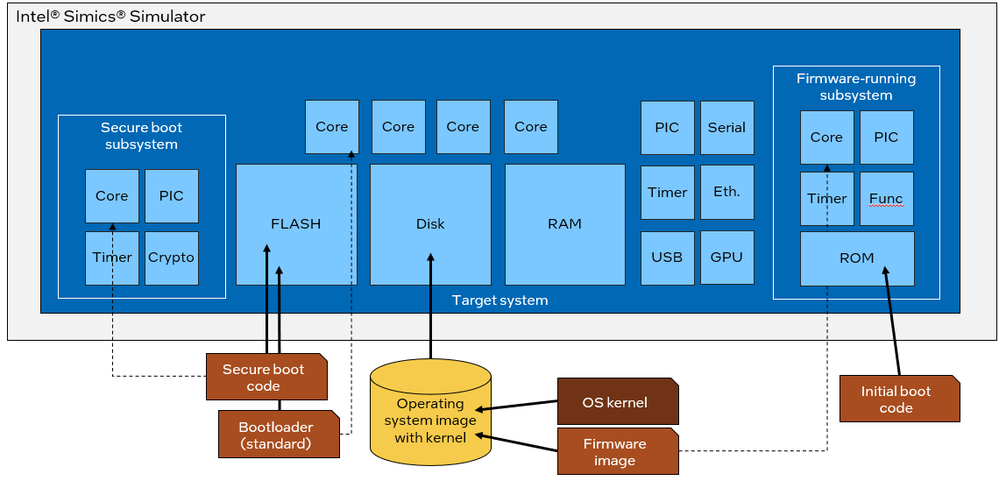

Just Beginning

Although simple, virtual platform booting has many intricate methods. Always, the goal is to create a model that simulates hardware enough to test interesting scenarios while being convenient for software developers. Use case and user determine.

Some boot flows and models are more complicated than this blog post. Not many systems boot with the main processor core bootloader. The visible bootloader replaces hidden subsystems after basic tasks.

Security subsystems with processors can start the system early. General bootloader FLASH or local memory can store processor boot code.

The main operating system image can boot programmable subsystems and firmware. OS disks contain subsystem firmware. The operating system driver’s boot code loads production firmware in most subsystems from a small ROM.