In this article we will discuss about What is LLMs, Advantages of LLMs, Use cases , How does it work, Disadvantages and Types.

What are large language models (LLMs)?

AI computers like large language models (LLMs) can identify and create text. Large data sets are used to train LLMs, thus the term “large.” Machine learning more especially, a transformer model, a kind of neural network is the foundation of LLMs.

To put it simply, an LLM is a computer program that has been given enough instances to identify and comprehend complicated data, like as human language. Thousands or millions of megabytes of text collected from the Internet are used to train many LLMs. The programmers of an LLM may employ a more carefully selected data set because the quality of the samples affects how effectively the LLMs will learn natural language.

LLMs employ deep learning, a subset of machine learning, to comprehend the relationships between words, phrases, and characters. Through the probabilistic examination of unstructured data, deep learning eventually makes it possible for the model to distinguish between different bits of material without the need for human interaction.

LLMs are then further trained by tuning, which involves adjusting or prompt-tuning them to the specific task the programmer wants them to perform, such translating text across languages or deciphering questions and producing answers.

How do large language models work?

LLMs employ a sophisticated strategy with several elements.

An LLM must be trained on a substantial amount of data, sometimes measured in petabytes, which is commonly referred to as a corpus. This is the fundamental layer. There are several stages to the training process, but unsupervised learning is typically the first. That method uses unlabelled and unstructured data to train the model. Training on unlabelled data has the advantage of frequently having access to a much larger amount of data. At this point, the model starts to infer connections between various terms and ideas.

For certain LLMs, training and fine-tuning using a type of self-supervised learning comes next. In this case, some data labelling has taken place, which helps the model recognise various ideas more precisely.

As it moves through the transformer neural network process, the LLM then engages in deep learning. Through the use of a self-attention mechanism, the transformer model architecture allows the LLM to comprehend and identify the links and interactions between words and concepts. To ascertain the relationship, that system can give a specific object, known as a token, a score, also known as a weight.

After training an LLM, there is a foundation upon which the AI may be used. The AI model inference can provide a response by prompting the LLM with a question. This response might be a sentiment analysis report, freshly created text, a summary of the text, or an answer to a question.

Use Cases of Large Language Models

Due to their extensive application for a variety of NLP tasks, such as the following, LLMs have grown in popularity:

Text production: One of the main use cases is that the LLM may produce text on any subject on which it has been educated.

Interpretation: The capacity to communicate across languages is a frequent trait of LLMs that have had multilingual training.

Synopsis of the content: One helpful feature of LLMs is their ability to summarise blocks or entire pages of material.

Content rewriting: Another feature is the ability to rewrite a passage of text.

grouping and classification: Content can be categorised and classified by an LLM.

Analysis of sentiment: The majority of LLMs may be used for sentiment analysis, which aids users in comprehending the purpose of a certain answer or piece of material.

Talkative AI and chatbots: Compared to previous generations of AI technologies, LLMs can facilitate a communication with a user in a manner that is usually more natural.

A chatbot, which may take many various forms and involve a query-and-response paradigm of user interaction, is one of the most popular applications of conversational AI. ChatGPT, created by OpenAI, is the most popular LLM-based AI chatbot. Although paid customers can utilise the more recent GPT-4 LLM, ChatGPT is presently built on the GPT-3.5 paradigm.

Advantages of LLMs

LLMs provide businesses and customers a number of Advantages of LLMs, including:

Flexibility and versatility

Customised use cases can be built on top of LLMs. An LLM combined with additional training can provide a model that is precisely tailored to the requirements of a certain organisation.

Adaptability

Organisations, individuals, and apps may all utilise a single LLM for a wide range of functions and deployments.

Performance

Current LLMs may produce quick, low-latency replies and are generally high-performing Precision. The transformer model may produce ever-higher levels of accuracy in an LLM as the number of parameters and the amount of training data increase.

Training simplicity

Unlabelled data is used to train several LLMs, which speeds up the training process.

Effectiveness

Employees can save time by using LLMs to automate repetitive operations.

Disadvantages of LLMs

Although having Advantages of LLMs, there are a number of drawbacks and restrictions as well:

Costs of development

Large amounts of costly graphics processing unit hardware and enormous data collections are often needed for LLMs to operate.

Costs of operations

The cost of running an LLM for the host organisation might be quite expensive following the training and development phase.

Prejudice

Any AI trained on unlabelled data has the danger of bias since it’s not always obvious that known bias has been eliminated ethical issues. LLMs may produce dangerous content and have privacy concerns.

Explainability

Explaining to consumers how an LLM produced a particular outcome is difficult and not always clear.

A hallucination

When an LLM gives an answer that is not based on taught data, it is called an AI hallucination.

Intricacy

Modern LLMs are incredibly sophisticated technology with billions of parameters, making troubleshooting them extremely challenging.

Tokens for glitches

Since 2022, a new trend has emerged: glitch tokens, which are maliciously created prompts that cause an LLM to malfunction.

Risks to security

Phishing assaults on workers can be enhanced with the use of LLMs.

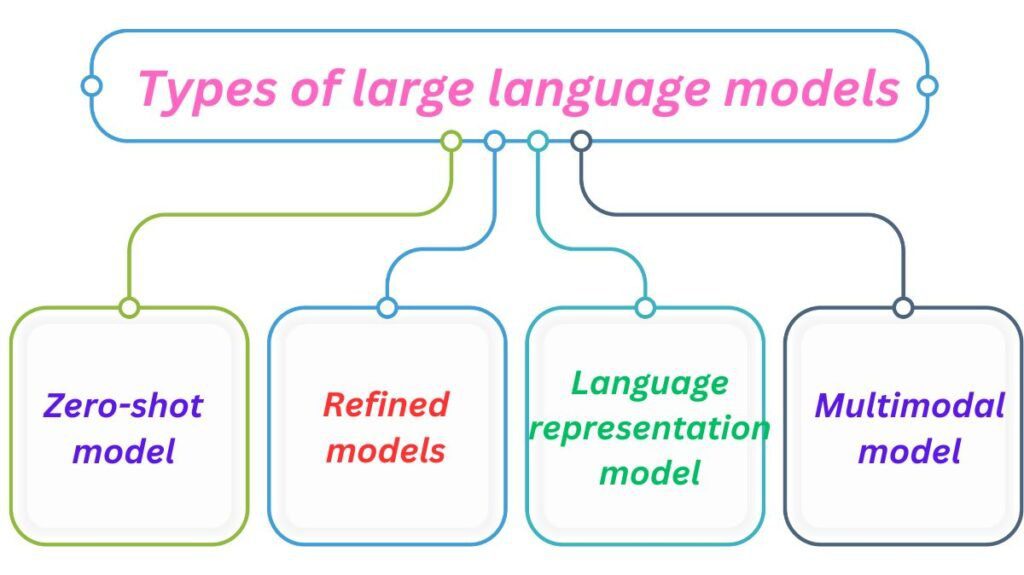

What are the different types of large language models?

The terminology used to characterise the various kinds of large language models is constantly changing. The following are some of the prevalent types:

Zero-shot model

This huge, generalised model may provide a reasonably accurate answer for many use cases without the need for further training because it was trained on a generic corpus of data. Many people think of GPT-3 as a zero-shot model.

Domain-specific or refined models

A domain-specific, fine-tuned model may be produced by adding more training to a zero-shot model like GPT-3. OpenAI Codex, a domain-specific LLM for programming based on GPT-3, is one example.

Language representation model

Google’s Bert is an example of a language representation model that uses deep learning with NLP-appropriate transformers.

Multimodal model

Originally designed solely for text, LLMs can now handle both text and graphics because to the multimodal approach. One example of this kind of model is GPT-4.