Big data professionals are probably already familiar with Apache Hive and the Hive Metastore, which has evolved into the industry standard for handling metadata. Running on Google Cloud, Dataproc Metastore is a fully managed Apache Hive metastore (HMS). Dataproc Metastore is serverless, self-healing, auto-scaling, and highly available. All of this facilitates interoperability between different data processing engines and whatever tools you may be utilising, and it helps you manage your metadata and data lake.

You might be looking for strategies to efficiently arrange your Dataproc Metastores (DPMS) if you are transitioning from an on-premises Hadoop setup with several Hive Metastores to Dataproc Metastore on Google Cloud. Three key considerations need to be taken into account while developing a DPMS architecture: persistence vs. federation, single-region vs. multi-region, and centralization vs. decentralisation. These design choices can have a big effect on how manageable, resilient, and scalable your metadata is.

Four patterns of DPMS deployment are examined in this blog post:

- A single multi-regional centralised DPMS

- DPMS per-domain centralised metadata federation

- Federated decentralised metadata with per-domain DPMS

- Federated ephemeral metadata

Every one of these patterns has benefits of its own to assist you choose the one that best suits the requirements of your company. The patterns are arranged in a progressively more complicated and mature order so that you can select the best pattern for the particular DPMS needs and usage of your company.

Note: A department, business unit, or functional area within your organisation is referred to as a domain in the purpose of this blog article. Every domain could have different specifications, needs for data processing, and methods for managing information.

Let’s examine each of these patterns in more detail.

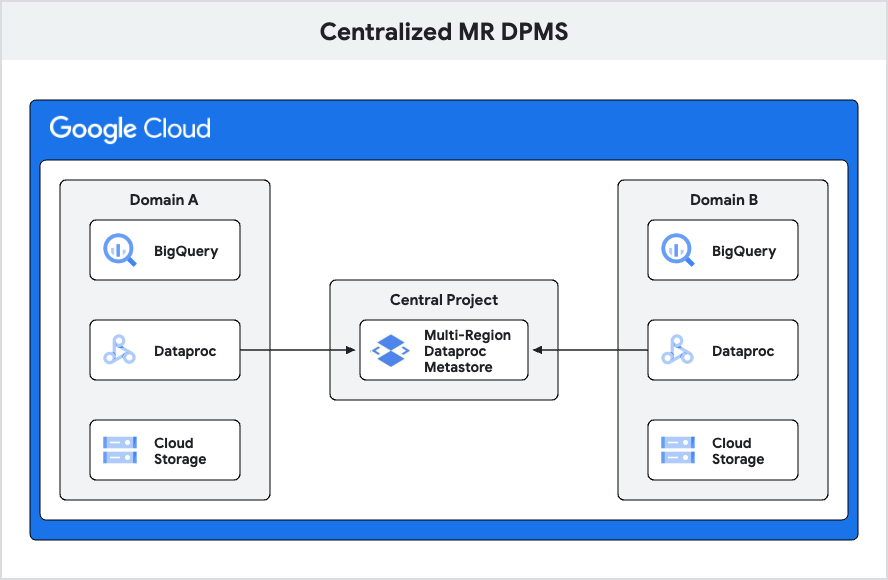

1.Dataproc Metastore, a centralised multiregional system

When you have fewer domains and can combine all metastores into a single multi-regional (MR)Dataproc Metastore, this solution works well for smaller use cases.

In this approach, all of the metastores from all of the domains are combined into a single shared project, which serves as the deployment platform for a single multi-regional DPMS. With this configuration, the organization’s domain projects can all access the centralised DPMS’s metadata. Providing a clear and manageable solution for organisations with a small number of domains and a relatively basic use case is the major goal of this design.

When you build a Dataproc Metastore service, you designate a region a geographical area where your service will always be located. One region or many regions can be chosen. A multi-region is a huge geographic area that offers greater availability and encompasses two or more geographic locations. With multi-regional Dataproc Metastore services, your workloads are executed in two distinct locations while your data is stored in one. The US-central1 and US-east4 regions, for instance, are included in the multi-region nam7.

Benefits of this layout:

- You may lessen the complexity of your data environment and streamline metadata administration by combining several metastores into a single DPMS.

- Controlling access and permissions gets easier.

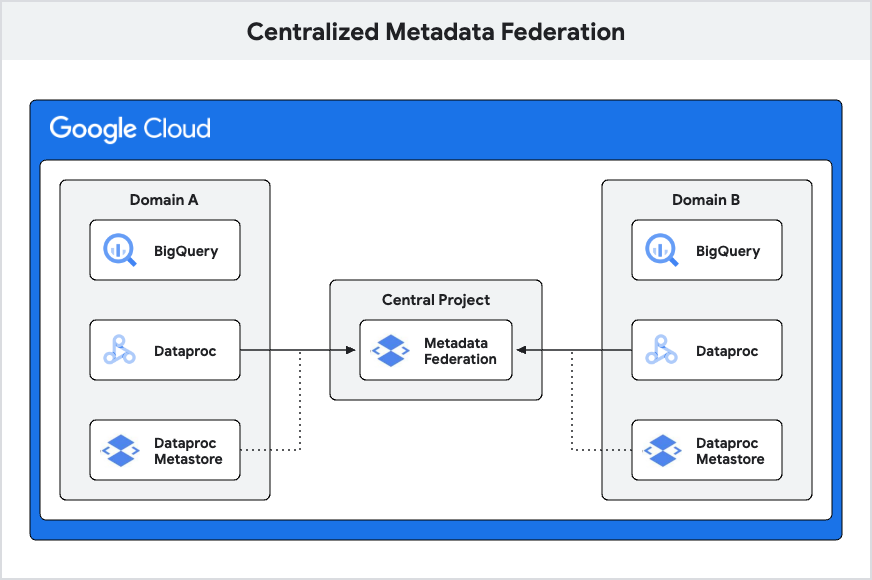

2.Per-domain DPMS and centralised metadata federation

When you have several domains, each with its own DPMS, and it is not practical to combine them into a single metastore, you can use this slightly more sophisticated approach. In these situations, you can use a fundamental building piece called metadata federation to promote cooperation and metadata exchange between domains.

A service called metadata federation allows users to access metadata from several sources via a single endpoint. These sources include Dataproc Metastore , BigQuery datasets, and Dataplex lakes as at the time this blog post was written. The gRPC (Google Remote Procedure Call) protocol is used by the federation service to expose this endpoint. In order to retrieve the necessary metadata, this protocol verifies the source ordering across metastores, which makes request processing easier. Because of its great performance, gRPC is a popular choice for developing distributed systems.

Create a federation service and then specify your metadata sources to begin federation setup. Subsequently, all of your metadata is accessible through a single gRPC endpoint that is exposed by the service. According to this design, it is the responsibility of each domain to own and operate its own Dataproc Metastores.

The metastore federation, which combines the BigQuery and DPMS resources from each domain, is hosted by a central project. Teams can work independently, create data pipelines, and access metadata with this configuration. Teams can use the federation service to retrieve information and data from other domains as needed.

Among this design’s benefits are:

Per-domain DPMS: By giving each domain its own Dataproc Metastore, management and access control are made easier by clearly defining the boundaries for metadata and data access.

Centralised metastore federation: This system gives users a single, easily-accessible view of all metadata from all domains, giving them a thorough understanding of the ecosystem as a whole.

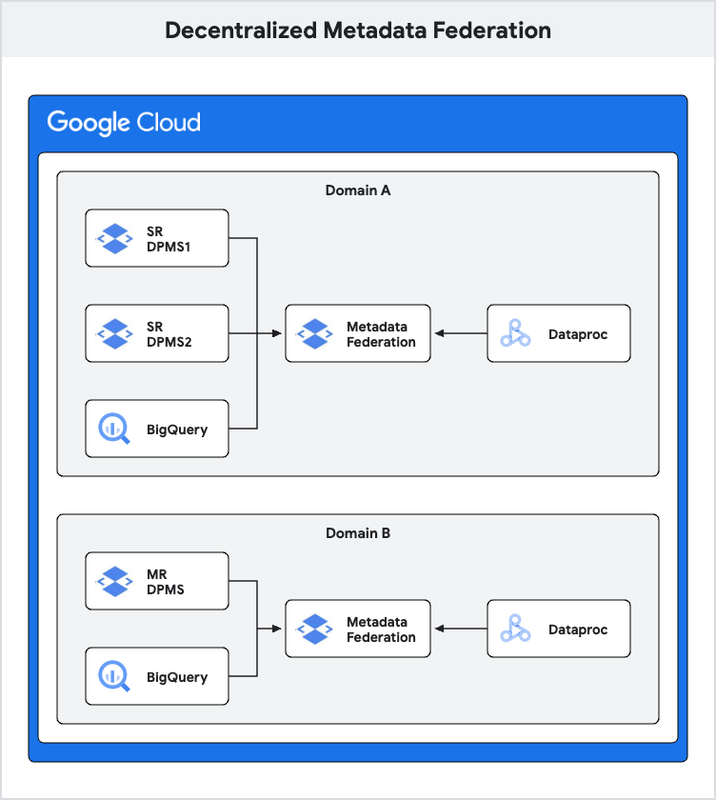

3.Per-domain DPMS in a decentralised metadata federation

When there are several DPMS instances some single-region and some multi-region within each domain, you utilise this rather more sophisticated approach. In order to facilitate cooperation across the domain’s metastores, you want each team within a domain to own and administer its own DPMS, but you also want a metadata federation that connects all DPMS instances inside a single domain.

Each domain in this design is in charge of managing its own Dataproc Metastores, which could be made up of many separate DPMS instances or a single, integrated MR DPMS. Within each domain, a Metastore federation is created to link Dataplex lakes, BigQuery, and one or more DPMS installations. Expanding upon the concept of metadata federation discussed in the centralised metadata federation section above, this federation service can also integrate metadata (DPMS, BigQuery, lakes) from other domains as needed.

Among this design’s benefits are:

- When a DPMS fails unexpectedly, the consequences are far less than in the case of a single MR DPMS.

- Because only relevant DPMS instances are included in the federation and the order in which DPMS instances are stitched dictates the order for metadata search and collision priority, the latency of searching numerous DPMS through federation is minimised.

- Because only local metastores and those required for ETL are included in the federation, namespace problems are lessened.

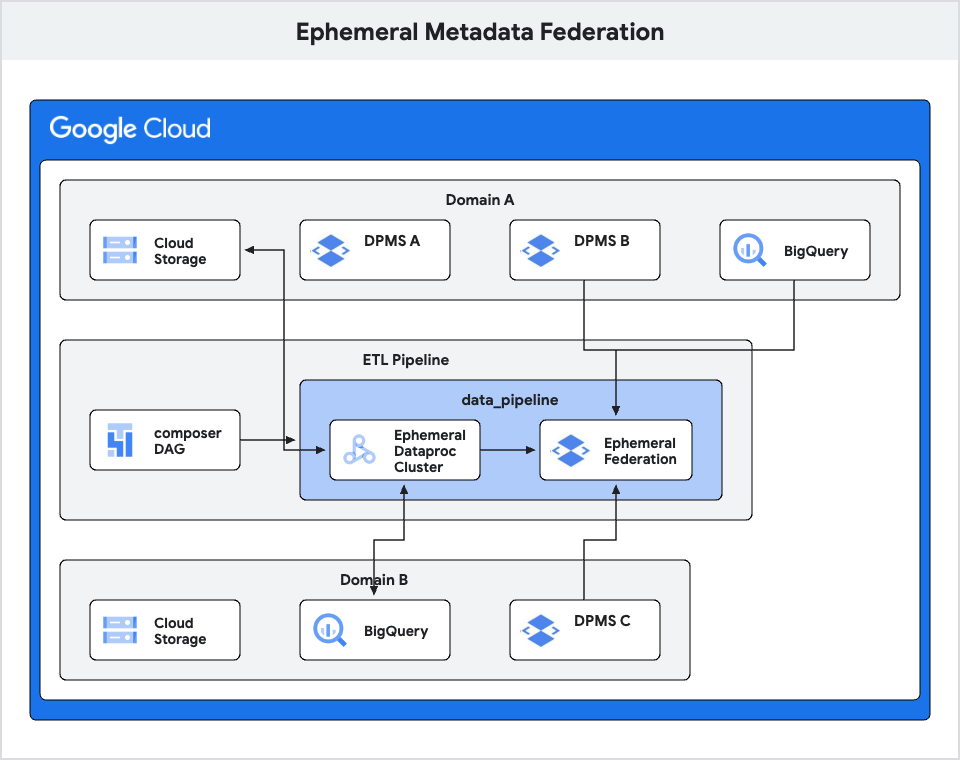

4.Federated ephemeral metadata

We may expand the idea to allow ephemeral federation across domains by building on the prior approach, where we talked about metadata federation within a domain. When you have ETL operations that need temporary access to metadata from several DPMS instances across various projects or domains, this design is especially helpful.

This architecture dynamically stitches metastores for ETL by utilising ephemeral federation. You can establish a temporary federation with other DPMS instances from different projects when ETL tasks need access to more metadata than what is available in the domain’s DPMS or BigQuery. ETL operations can now obtain the required metadata from the additional DPMS thanks to this temporary federation. Once more, the metastore federation serves as the foundation for this.

The flexibility to dynamically specify and stitch together different DPMS instances for each ETL task or workflow as needed is a major benefit of the ephemeral federation strategy. This enables the federation to be restricted to the necessary metastores alone, as opposed to having a static, more expansive federation setup. When establishing a Dataproc cluster, the temporary federation configuration can be coordinated and incorporated into an Airflow DAG. This implies that for the period of the ETL tasks, the provisioning and deconstruction of the ephemeral federation can be completely automated.

In summary

It is essential to comprehend the advantages and disadvantages of any DPMS deployment pattern in order to match your organization’s objectives with its infrastructure. Take into account the following important factors when choosing the best design pattern:

- Evaluate the intricacy of your data environment, taking into account the quantity of teams, domains, and data processing needs.

- Determine whether cross-domain metadata sharing and collaboration are necessary for your company.

- Think about the significance of data autonomy and the degree of metadata control that each area needs.

- Establish the ideal ratio between your metadata management architecture’s flexibility and simplicity.

You can make an informed choice that ensures successful metadata management at scale by carefully weighing these aspects and comprehending the trade-offs between the various design patterns. These factors will help you find the correct balance between simplicity, scalability, cooperation, and resilience.