Creating an infinite number of varied training contexts for generic agents in the future

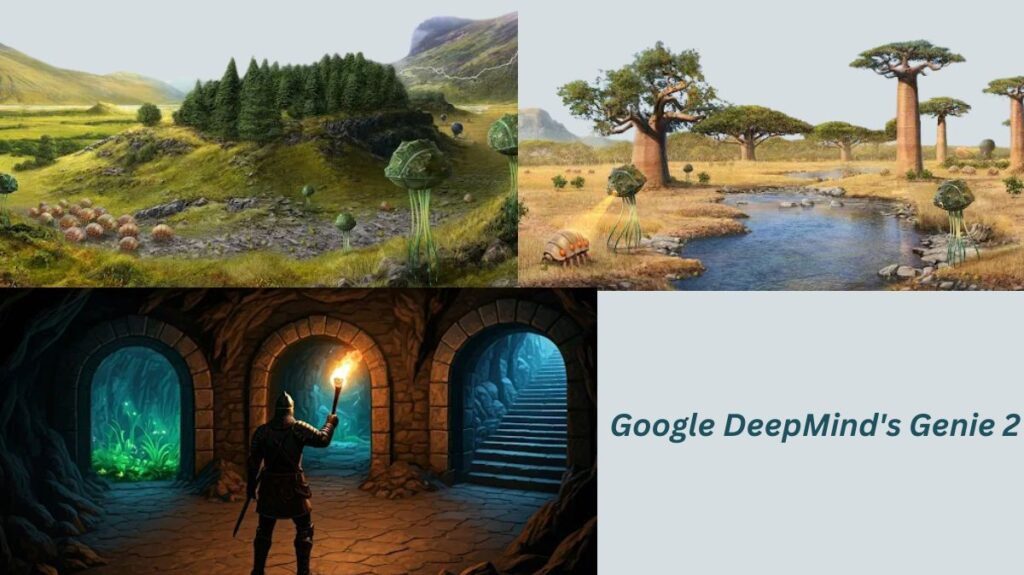

Google DeepMind presents Genie 2, a foundation world model that can create an infinite number of playable, action-controllable 3D environments for embodied agent training and evaluation. It can be played by a human or AI agent with keyboard and mouse inputs based on a single prompt image.

In the field of artificial intelligence (AI) research, games are crucial. They are the perfect settings for securely testing and developing AI capabilities because of their captivating qualities, distinctive mix of difficulties, and quantifiable advancements.

Indeed, since its inception, Google DeepMind has placed a high value on gaming. Games have always been at the forefront of its research, from its early work with Atari games to innovations like AlphaGo and AlphaStar to its study of generalist agents in partnership with game producers. However, the availability of suitably rich and diverse training environments has historically been a hurdle for training more general embodied agents.

Google DeepMind demonstrates how Genie 2 may allow future agents to be trained and assessed in an infinite number of new environments. Additionally, its research opens the door to innovative, new processes for interactive experience prototyping.

New features of a foundational world model

World models have thus far mostly been limited to simulating certain domains. It presented a method for creating a wide variety of 2D worlds in Genie 1. The generality of Genie 2, which Google DeepMind demonstrates today, has advanced significantly. A wide variety of intricate 3D worlds can be created with Genie 2.

As a world model, Genie 2 is capable of simulating virtual environments, including the results of any action (such as jumping, swimming, etc.). Like other generative models, it was trained on a sizable video dataset and exhibits a range of emergent capabilities at scale, including physics, intricate character animation, object interactions, and the capacity to model and anticipate the actions of other agents.

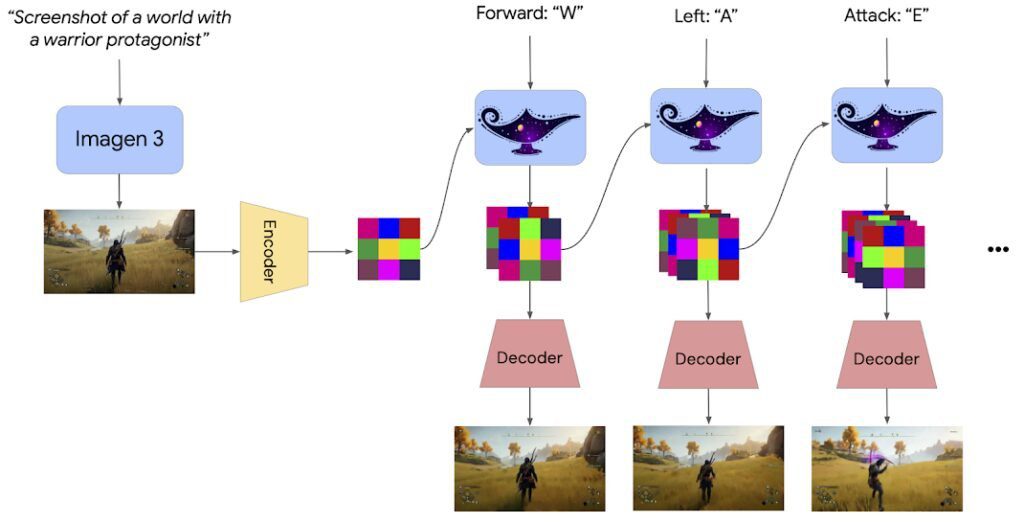

Here are some sample videos of individuals using Genie 2. GDM’s cutting-edge text-to-image model, Imagen 3, generates a single image to stimulate the model for each case. This implies that anyone can write a description of a world they desire, pick their preferred representation of that concept, and then enter and engage with the freshly constructed world (or have an AI agent trained or assessed in it). Genie 2 mimics the subsequent observation once a person or agent provides a keyboard and mouse action at each stage. The majority of the samples displayed last 10–20 seconds, however Genie 2 can create continuous worlds for up to a minute.

Action Controls

When a character is pressed on a keyboard, Genie 2 recognizes the character and moves it appropriately in response to the input.

Creating counterfactual scenarios

For training agents, GDM can simulate counterfactual experiences by generating several paths from the same beginning frame.

Long-term memory

Parts of the world that are no longer visible can be remembered by this model, and when they are observable again, they can be rendered precisely.

Creating lengthy videos with freshly created content

It keeps a stable universe for up to a minute and creates fresh, believable content on the spot.

Various settings

Various viewpoints, including first-person, isometric, and third-person driving videos, can be produced with Genie 2.

Three-dimensional structures

It acquired the ability to produce intricate 3D visual scenarios.

The affordances and interactions of objects

It simulates a variety of object interactions, including blowing up balloons, opening doors, and firing explosive barrels.

Animation of characters

Genie 2 acquired the ability to animate a variety of characters engaging in diverse tasks.

NPCs

It simulates complicated interactions with other agents.

Physics

Water effects are modelled by Genie 2.

Smoke

It simulates the effects of smoking.

Gravity

Gravity is modelled by it.

Lighting

Point and directed lighting are modelled by Genie 2.

Thoughts

Genie 2 simulates coloured lighting, bloom, and reflections.

Playing from pictures of the real world

Additionally, Genie 2 can be triggered by real-world pictures, such as grass waving in the wind or river water flowing.

Genie 2 makes quick prototyping possible

Researchers can swiftly test and train embodied AI agents in new surroundings with Genie 2, which makes it simple to quickly prototype a variety of interactive experiences.

Concept art and drawings can be transformed into completely interactive settings using Genie 2’s out-of-distribution generalisation features. This speeds up research by allowing designers and artists to swiftly experiment, which helps bootstrap the environment design creative process.

AI entities operating within the world model

GDM’s researchers may also develop evaluation tasks that agents haven’t seen during training by using Genie 2 to swiftly create rich and diverse settings for AI agents.

The SIMA agent is made to obey natural-language instructions to do tasks in various 3D game settings.

SIMA can also be used to assess Genie 2’s potential. Here, we give SIMA instructions to scan the area and investigate behind the home in order to verify Genie 2’s capacity to create consistent surroundings.

Genie 2 is the way to address a structural issue of safely training embodied agents while attaining the breadth and generality necessary to advance toward AGI, even though this study is still in its infancy, there is a lot of room for advancement in terms of agent and environment generation capabilities.

Diffusion world model

A sizable video dataset was used to train the autoregressive latent diffusion model Genie 2. Following an autoencoder, the video’s latent frames are sent into a large transformer dynamics model, which is trained using a causal mask akin to those employed by big language models.

It is possible to sample Genie 2 in an autoregressive manner at inference time, taking individual actions and past latent frames frame by frame. To increase action controllability, it employ classifier-free guidance.

Creating its technologies in an ethical manner

Genie 2 demonstrates how foundational world models can be used to speed up agent research and create a variety of 3D environments. GDM is excited to keep advancing Genie’s world-generating capabilities in terms of generality and consistency, even if this research direction is still in its infancy.

The goal of its research, like that of SIMA, is to develop more broad AI systems and agents that can comprehend and securely perform a variety of jobs in a way that benefits people both online and offline.