What is Reinforcement Learning?And it’s Applications

Contents

What is Reinforcement Learning?

A feedback-based machine learning technique called Reinforcement Learning teaches an agent how to behave in an environment by executing actions and experiencing the effects. Each good action earns the agent positive feedback, whereas each bad action earns negative feedback or penalty.

Unlike supervised learning, Reinforcement Learning uses feedback without labeled data to teach the agent.

- Game-playing, robotics, and other sequential, long-term problems are solved by RL.

- The agent explores its environment alone. Reinforcement learning agents aim to maximize positive incentives to increase performance.

- Reinforcement Learning underpins all AI agents. Since the agent learns from experience, pre-programming is unnecessary.

- Example: An artificial intelligence agent searching a maze for the diamond seeks The agent interacts with the environment by doing actions, which change its state and give it rewards or penalties.

- By taking action, changing state/remaining in the same state, and getting feedback, the agent learns and explores the environment.

- Agent learns what activities result in good feedback or rewards and negative feedback penalties. For rewards and punishments, the agent gets positive and negative points.

Terms used in Reinforcement Learning:

- Agent(): Agent() is a being able to see and investigate the surroundings and act upon them.

- Environment(): The circumstances in which an agent is either surrounded by or present.

- Action(): Actions are the motions an agent within the surroundings makes.

- State(): State() is the condition the surroundings bring back following every action the agent takes.

- Reward(): An evaluation of the agent’s action returned to her from the surroundings.

- Policy(): Policy() is an agent’s approach for the future action determined by their present condition.

- Value(): The long-term retuned will be the opposite of the short-term prize, and it will have a discount factor.

- Q-value() : Even though it’s pretty much the same as the value, it needs an extra parameter to work on (a).

Important Aspects of Reinforcement Learning:

- In RL, the agent receives no direction regarding the surroundings or what actions should be done.

- It comes from the method of “hit and trial.”

- The agent responds with the next action and modifies states based on the response of the last one.

- The agent could be rewarded later than expected.

- The agent must investigate the stochastic environment if it is to maximize the positive rewards.

In machine learning, reinforcement-learning can be implemented essentially in three ways:

Value-based: The value-based method is about to identify the maximum value at a state under any policy, so determining the ideal value function. Consequently, under policy π the agent anticipates the long-term return at any one state (s).

Policy-based: Finding the best policy for the maximum future benefits without using the value function is the policy-based approach. Under this strategy, the agent seeks to implement such a policy that the action taken in every phase aids to maximize the future payoff.

Model-based: Under the model-based approach, the agent explores the virtual world built for the surroundings to learn it. Because the model representation differs for every setting, there is no specific solution or technique for this method.

Reinforcement Learning’s Elements

Reinforcement learning consists in four main components, which are enumerated here:

- Policy

- Value

- Reward Signal

- Model of the Environmental

Policy: A policy is a means of behavior for an agent at a particular moment. It links the supposed states of the surroundings to the behavior performed on those states. The fundamental component of the RL since only it can specify the agent’s behavior. While for certain circumstances it may be a straightforward function or a lookup table, for others general computation as a search process is required.

Reward Signal: The reward signal indicates what the purpose of reinforcement learning is. The environment immediately signals the learning agent at every state; these signals are known as rewards. The good and bad deeds the agent does determine these rewards. Maximizing the overall amount of rewards for virtuous deeds is the agent’s primary goal. Should an agent choose an activity that results in low reward, the reward signal can alter the policy such that it chooses different actions going forward.

Value Function: The value function provides details on the degree of reward an agent could expect as well as on the quality of the circumstances and conduct. Whereas a value function denotes the desirable condition and action for the future, a reward indicates the instant signal for every good and poor deed. The value function depends on the reward as without it there could not be any value. Estimating values has as its aim more rewards.

Model of the Environment: The model is the last component of reinforcement learning since it replics the behavior of the surroundings. One can deduce about the behavior of the surroundings by means of the paradigm. Such as, a model can forecast the next state and reward if a state and an action are given.

Planning uses the model since it offers a mechanism to follow a path of action by evaluating all future circumstances before really facing those ones. The model-based approach is the method used in the solutions of the RL problems leveraging the model.

Types of reinforcement learning

There are two main reinforcement learning methods:

- Positive reinforcement

- Negative reinforcement

Positive reinforcement:

- Positive reinforcement learning increases the likelihood of expected behavior. It improves agent behavior and strengthens it.

- Positive reinforcement can maintain changes for a long time, but too much might overload states and minimize consequences.

Negative reinforcement:

- Negative reinforcement learning, unlike positive reward, improves the likelihood of the behavior by avoiding the negative circumstance.

- It may be more successful than positive reinforcement depending on the situation and conduct, but it only reinforces minimum behavior.

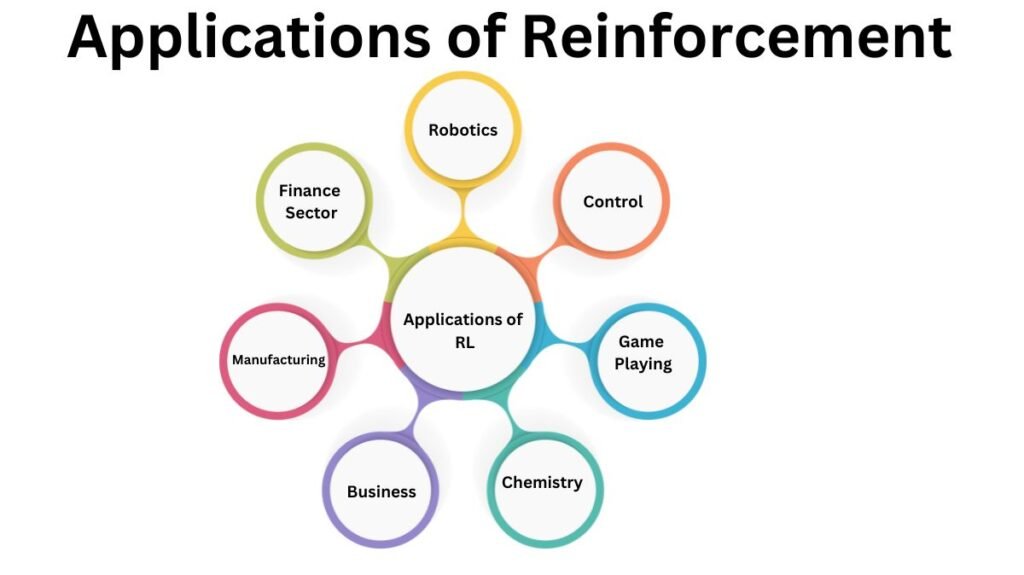

Applications of Reinforcement Learning

Robotics: RL is used in robotics for navigation, soccer, walking, and juggling.

Control:Helicopter pilots, factory operations, and telecommunication admission control use reinforcement learning.

Game Playing:Playing games like chess and tic-tac-toe is easier with RL.

Chemistry:Chemical reactions can be optimized using RL.

Business:In business, RL is now used for strategy planning.

Manufacturing:In auto production, robots utilize deep reinforcement learning to pick and place things in containers.

Finance Sector:In the finance sector, the RL is utilized to assess trading tactics.

Conclusion:

In conclusion, Reinforcement Learning is a highly intriguing and essential aspect of Machine Learning. Agents explore RL environments without human interaction. AI’s main learning algorithm. If you have enough data to tackle the problem, alternative ML techniques may be more efficient. The fundamental issue with the RL algorithm is that delayed feedback in some parameters might slow learning.