ROCm 6.1.3 Software with AMD Radeon PRO GPUs for LLM inference.

AMD Pro Radeon

Large Language Models (LLMs) are no longer limited to major businesses operating cloud-based services with specialized IT teams. New open-source LLMs like Meta’s Llama 2 and 3, including the recently released Llama 3.1, when combined with the capability of AMD hardware allow even small organizations to execute their own customized AI tools locally, on regular desktop workstations, eliminating the need to keep sensitive data online.

AMD Radeon PRO W7900

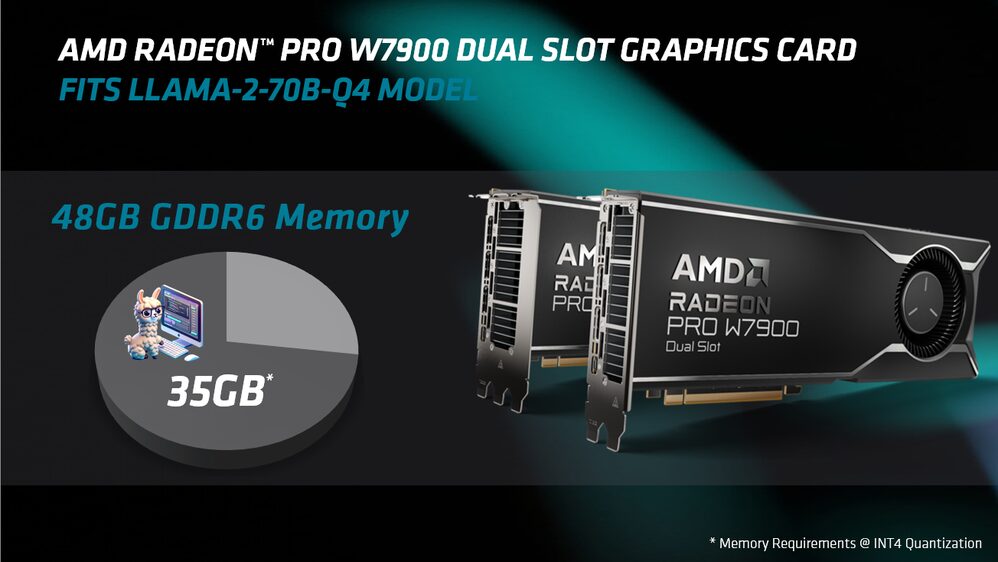

Workstation GPUs like the new AMD Radeon PRO W7900 Dual Slot offer industry-leading performance per dollar with Llama, making it affordable for small businesses to run custom chatbots, retrieve technical documentation, or create personalized sales pitches. The more specialized Code Llama models allow programmers to generate and optimize code for new digital products. These GPUs are equipped with dedicated AI accelerators and enough on-board memory to run even the larger language models.

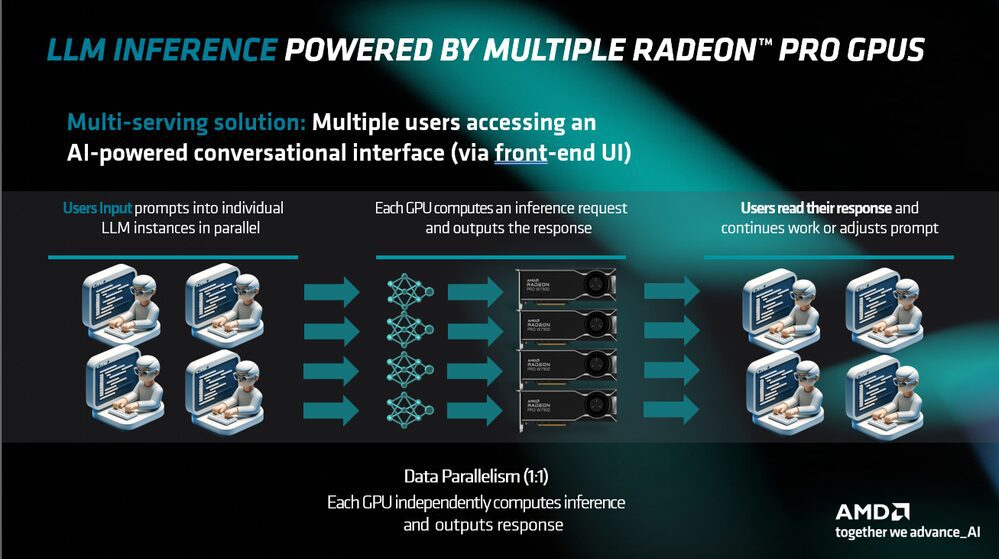

And now that AI tools can be operated on several Radeon PRO GPUs thanks to ROCm 6.1.3, the most recent edition of AMD’s open software stack, SMEs and developers can support more users and bigger, more complicated LLMs than ever before.

LLMs’ new applications in enterprise AI

The prospective applications of artificial intelligence (AI) are much more diverse, even if the technology is commonly used in technical domains like data analysis and computer vision and generative AI tools are being embraced by the design and entertainment industries.

With the help of specialized LLMs, such as Meta’s open-source Code Llama, web designers, programmers, and app developers can create functional code in response to straightforward text prompts or debug already-existing code bases. Meanwhile, Llama, the parent model of Code Llama, has a plethora of potential applications for “Enterprise AI,” including product personalization, customer service, and information retrieval.

Although pre-made models are designed to cater to a broad spectrum of users, small and medium-sized enterprises (SMEs) can leverage retrieval-augmented generation (RAG) to integrate their own internal data, such as product documentation or customer records, into existing AI models. This allows for further refinement of the models and produces more accurate AI-generated output that requires less manual editing.

How may LLMs be used by small businesses?

So what use may a customized Large Language Model have for a SME? Let’s examine a few instances. Through the use of an LLM tailored to its own internal data:

- Even after hours, a local retailer may utilize a chatbot to respond to consumer inquiries.

- Helpline employees may be able to get client information more rapidly at a bigger shop.

- AI features in a sales team’s CRM system might be used to create customized customer pitches.

- Complex technological items might have documentation produced by an engineering company.

- Contract drafts might be first created by a solicitor.

- A physician might capture information from patient calls in their medical records and summarize the conversations.

- Application forms might be filled up by a mortgage broker using information from customers’ papers.

- For blogs and social media postings, a marketing firm may create specialized text.

- Code for new digital items might be created and optimized by an app development company.

- Online standards and syntactic documentation might be consulted by a web developer.

- That’s simply a small sample of the enormous potential that exists in enterprise artificial intelligence.

Why not use the cloud for running LLMs?

While there are many cloud-based choices available from the IT sector to implement AI services, small companies have many reasons to host LLMs locally.

Data safety

Predibase research indicates that the main barrier preventing businesses from using LLMs in production is their apprehension about sharing sensitive data. Using AI models locally on a workstation eliminates the need to transfer private customer information, code, or product documentation to the cloud.

Reduced latency

In use situations where rapid response is critical, such as managing a chatbot or looking up product documentation to give real-time assistance to clients phoning a helpline, running LLMs locally as opposed to on a distant server minimizes latency.

More command over actions that are vital to the purpose

Technical personnel may immediately fix issues or release upgrades by executing LLMs locally, eliminating the need to wait on a service provider situated in a different time zone.

The capacity to sandbox test instruments

IT teams may test and develop new AI technologies before implementing them widely inside a company by using a single workstation as a sandbox.

AMD GPUs

How can small businesses use AMD GPUs to implement LLMs?

Hosting its own unique AI tools doesn’t have to be a complicated or costly enterprise for a SME since programs like LM Studio make it simple to run LLMs on desktop and laptop computers that are commonly used with Windows. Retrieval-augmented generation may be easily enabled to tailor the result, and LM Studio can use the specialized AI Accelerators in modern AMD graphics cards to increase speed since it is designed to operate on AMD GPUs via the HIP runtime API.

AMD Radeon Pro

While consumer GPUs such as the Radeon RX 7900 XTX have enough memory to run smaller models, such as the 7-billion-parameter Llama-2-7B, professional GPUs such as the 32GB Radeon PRO W7800 and 48GB Radeon PRO W7900 have more on-board memory, which allows them to run larger and more accurate models, such as the 30-billion-parameter Llama-2-30B-Q8.

Users may host their own optimized LLMs directly for more taxing activities. A Linux-based system with four Radeon PRO W7900 cards could be set up by an IT department within an organization to handle requests from multiple users at once thanks to the latest release of ROCm 6.1.3, the open-source software stack of which HIP is a part.

In testing using Llama 2, the Radeon PRO W7900’s performance-per-dollar surpassed that of the NVIDIA RTX 6000 Ada Generation, the current competitor’s top-of-the-range card, by up to 38%. AMD hardware offers unmatched AI performance for SMEs at an unbelievable price.

A new generation of AI solutions for small businesses is powered by AMD GPUs

Now that the deployment and customization of LLMs are easier than ever, even small and medium-sized businesses (SMEs) may operate their own AI tools, customized for a variety of coding and business operations.

Professional desktop GPUs like the AMD Radeon PRO W7900 are well-suited to run open-source LLMs like Llama 2 and 3 locally, eliminating the need to send sensitive data to the cloud, because of their large on-board memory capacity and specialized AI hardware. And for a fraction of the price of competing solutions, companies can now host even bigger AI models and serve more users thanks to ROCm, which enables inferencing to be shared over many Radeon PRO GPUs.