IBM’s Watson Large Speech Model for Phones(IBM LSM)

Large language models, or LLMs, are a term that most people are familiar with because of generative AI’s remarkable ability to generate text and images as well as its potential to completely transform the way businesses perform essential business operations. The idea of interacting with AI via a chat interface or having it carry out particular tasks for you is more feasible than ever. Huge efforts are being made to embrace this technology in order to enhance our everyday lives as consumers and as individuals.

However, what about in the voice domain? Not many people are discussing how LLMs can be used to improve voice-based conversational experiences because they have received so much attention as a catalyst for improved generative AI chat capabilities. Rigid conversational experiences—yes, Interactive Voice Response, or IVR—remain the norm in today’s contact centers. Unlock the mysteries of Large Speech Models (LSMs). Indeed, generative AI has a more talkative cousin for LLMs that offers similar advantages and possibilities, but this time, users can communicate with the helper via phone.

The development teams of IBM Watsonx and IBM Research have been working diligently over the last few months to create a brand-new, cutting-edge Large Speech Model (LSM). Large volumes of training data and model parameters are required for LSMs, which are based on transformer technology, to provide accurate speech recognition. IBM’s LSM is designed with customer care use cases such as real-time call transcription and self-service phone assistants in mind. It provides highly sophisticated transcriptions right out of the box, ensuring a flawless customer experience.

IBM are thrilled to announce the launch of new LSMs in both English and Japanese, which are only accessible to Watson Speech to Text and Watsonx Assistant phone customers in closed beta right now.

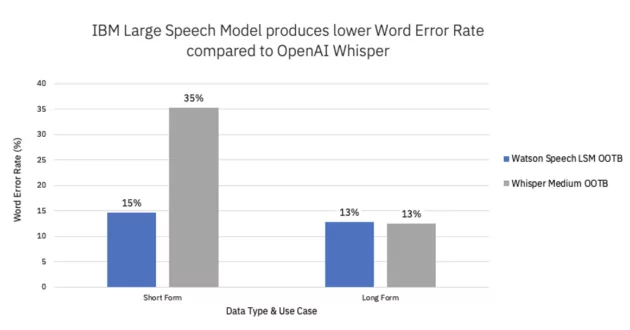

IBM could discuss these models’ virtues endlessly, but ultimately, performance is what matters. The new LSM outperforms OpenAI’s Whisper model on short-form English use cases, making it our most accurate speech model to date according to internal benchmarking. The Word Error Rate (WER) of the IBM LSM was found to be 42% lower than that of the OpenAI Whisper model when IBM compared the out-of-the-box performance of their English LSM with the Whisper model over five real customer use cases over the phone.

With five times fewer parameters than the Whisper model, IBM’s LSM processes audio ten times faster on the same hardware. When streaming, the LSM stops processing as soon as the audio stops; with Whisper, however, audio is processed in block mode (e.g., every 30 seconds). Let’s examine an example: when processing an audio file that is less than 30 seconds, say 12 seconds, IBM LSM processes after the audio has finished for a total of 30 seconds, despite Whisper padding with silence during that time.

These tests show that the short-form accuracy of IBM’s LSM is very high. However, there’s more. The chart below illustrates how the LSM performed similarly to Whisper´s accuracy on long-form use cases (such as call analytics and call summarization).

How do you begin using these models?

In order to arrange a call, IBM’s Product Management team will get in touch with you after you apply for their closed beta user program. Certain features and functionalities of the IBM LSM are still under development because it is in closed beta.

[…] and societal changes are shifting consumer expectations and disrupting company strategies. IBM Consulting’s professional services for business link digital transformation with business […]